What Is Serverless Computing? When Should You Use It?

Learn what serverless computing is, its benefits, and when to use it. Explore AWS Lambda, Azure Functions, and when serverless architecture.

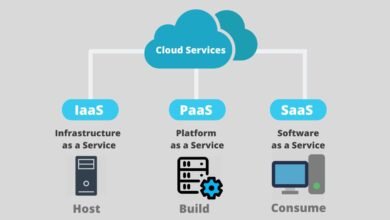

Serverless computing has revolutionized the way developers approach application development and deployment in the cloud. Despite its misleading name, serverless architecture doesn’t eliminate servers—rather, it abstracts server management away from developers, allowing them to focus exclusively on writing application code. A cloud service provider handles all backend infrastructure responsibilities, including server provisioning, scaling, patching, monitoring, and maintenance. This paradigm shift represents one of the most significant transformations in cloud computing since the advent of Infrastructure as a Service (IaaS).

In a serverless model, developers deploy code as discrete functions that remain dormant until triggered by specific events, such as HTTP requests, file uploads, database changes, or scheduled timers. When an event occurs, the cloud provider automatically allocates computing resources, executes the function, and releases resources immediately upon completion.

Users are charged exclusively for actual function execution time, making this approach exceptionally cost-effective for applications with variable, unpredictable, or intermittent workloads. The global serverless computing market continues experiencing exponential growth, with major cloud providers including Amazon Web Services (AWS Lambda), Microsoft Azure (Azure Functions), and Google Cloud offering robust serverless platforms. This comprehensive guide explores the fundamental concepts of serverless architecture, examines practical use cases, analyzes advantages and disadvantages, and provides guidance on determining whether serverless solutions align with your specific organizational needs and application requirements.

Serverless Computing: Core Concepts and Definition

Serverless computing represents a cloud-native development model where developers build and execute applications without managing underlying infrastructure or server administration. Despite the name suggesting the complete absence of servers, the term refers specifically to the developer’s perspective—physical servers exist but remain completely abstracted from the development process. In a serverless architecture, a cloud provider assumes responsibility for all infrastructure management tasks, including hardware provisioning, operating system maintenance, security patches, and automatic scaling.

The term “serverless” encompasses various cloud services beyond simple Function as a Service (FaaS). While FaaS remains the core component, serverless computing extends to serverless databases, serverless containers, backend-as-a-service (BaaS), and other managed services. The fundamental principle underlying all serverless services is event-driven execution: code runs exclusively when triggered by specific events, eliminating idle compute capacity and wasted resources.

This event-driven approach enables automatic scaling without any manual configuration, as the platform instantly provisions or releases resources based on demand. Developers write discrete, stateless functions that execute independently, each performing a specific business logic operation. The combination of event-driven execution, automatic scaling, and pay-per-use billing creates a fundamentally different operational model compared to traditional server-based architectures.

Key Advantages of Serverless Computing for Modern Applications

- Serverless computing delivers substantial benefits that make it increasingly attractive for organizations seeking to modernize their application infrastructure. The most compelling advantage is its cost-efficiency through the pay-per-use model, where organizations only pay for actual function execution time measured in milliseconds, eliminating charges for idle capacity. Unlike traditional cloud infrastructure, which requires payment for reserved resources regardless of utilization, serverless platforms align costs directly with usage patterns. This approach provides dramatic cost savings—typically a 30%-50% reduction in operational expenses—for applications experiencing variable or unpredictable traffic patterns.

- Automatic scaling represents another transformative advantage, as serverless architecture seamlessly handles traffic spikes without manual intervention. The platform instantly provisions additional resources during demand surges and scales down to zero during quiet periods, maintaining consistent performance while minimizing costs. This elastic scalability eliminates the capacity planning challenges that plague traditional approaches, where developers must estimate future traffic and reserve resources accordingly. Reduced operational complexity frees developers from infrastructure management tasks, allowing them to focus exclusively on business logic and application features.

By delegating server provisioning, monitoring, patching, and maintenance to the cloud provider, organizations significantly decrease DevOps overhead and enable development teams to concentrate on writing quality code. Faster time-to-market emerges naturally from reduced complexity, as developers avoid infrastructure setup delays and focus entirely on application development. Improved scalability handles explosive growth seamlessly—applications effortlessly accommodate millions of concurrent requests without architectural modifications. Additionally, serverless computing enhances high availability and reliability, as cloud providers manage redundancy, failover, and disaster recovery automatically.

Serverless Computing Costs and Pricing Models

Serverless pricing fundamentally differs from traditional cloud computing models, implementing a consumption-based approach that aligns expenses precisely with actual usage. AWS Lambda, the pioneering serverless platform, charges based on two primary factors: the number of function invocations (requests) and execution duration measured in milliseconds. AWS Lambda pricing includes 1 million free requests monthly, then charges $0.20 per million additional requests, while execution time costs $0.0000166667 per GB-second of consumed resources. Azure Functions offers similarly competitive pricing, granting 1 million free executions monthly with comparable per-invocation and resource consumption rates.

The pay-per-use model provides exceptional cost advantages for applications with fluctuating demand. A mobile application experiencing zero traffic at 2 AM incurs zero compute costs, while traffic spikes during peak hours are handled automatically without paying for reserved capacity. However, cost considerations require careful evaluation: applications with consistent, high-volume, predictable traffic may achieve better cost efficiency through reserved instances or dedicated servers.

Cold starts—the latency incurred when the platform provisions a new execution environment for a function—can increase costs and degrade performance for latency-sensitive applications. Organizations should monitor serverless execution metrics closely and implement usage alerts to prevent unexpected cost spikes from viral features or unanticipated traffic surges. Memory allocation significantly impacts serverless expenses, as selecting excessive memory increases per-millisecond costs unnecessarily. Proper function optimization and right-sizing memory allocation through tools like AWS Lambda Power Tuning substantially reduce overall costs.

Serverless Architecture vs. Traditional Server-Based Models

Comparing serverless computing against traditional server-based architectures reveals fundamental operational differences. Traditional models require purchasing or reserving server capacity upfront, provisioning operating systems, managing security updates, monitoring performance, and handling scaling decisions manually. Developers must estimate anticipated traffic, reserve corresponding resources, and maintain infrastructure continuously—even during periods of minimal usage. This approach demands significant DevOps expertise, increases operational overhead, and creates rigid scaling constraints.

Serverless architecture inverts this model entirely. Infrastructure provisioning happens automatically and instantaneously in response to demand, with resources allocated at millisecond granularity. There are no operating systems to manage, no patches to apply, and no servers to monitor—the cloud provider handles all these responsibilities. Developers write discrete, event-driven functions without considering infrastructure concerns. Scaling limitations evaporate completely, as serverless platforms handle thousands or millions of concurrent requests seamlessly.

For applications with variable, unpredictable, or bursty traffic patterns, serverless computing provides superior cost efficiency and operational simplicity. However, traditional approaches excel for applications with sustained, predictable, high-volume traffic where reserved instances provide better economies of scale. Hybrid architectures combining both approaches prove optimal for many organizations: running steady-state workloads on traditional infrastructure while reserving serverless services for event-driven, variable-load components.

Function as a Service (FaaS): The Heart of Serverless Architecture

- Function as a Service (FaaS) comprises the core technology enabling serverless computing, though the terms are often used interchangeably. FaaS allows developers to deploy small, self-contained code blocks—functions—that execute independently in response to specific triggers. Each function encapsulates a single business operation, receives input data, performs processing, and returns results. The FaaS provider manages the complete execution environment, handling resource allocation, scaling, failure recovery, and monitoring automatically.

- Event-driven functions respond to diverse trigger types: HTTP requests arrive through API gateways, database changes trigger functions, file uploads initiate processing, timers schedule periodic execution, and messaging systems invoke functions when messages arrive. This flexibility enables sophisticated application architectures where discrete services communicate asynchronously through events. Stateless function design remains critical in serverless architecture, as functions must not rely on local state or assume they execute on the same physical server.

Each invocation potentially executes on a different infrastructure, necessitating that persistent data is stored externally in databases or object storage services. Cold starts, the latency incurred during new execution environment provisioning, represent a notable FaaS limitation, particularly for functions invoked infrequently. This latency can reach seconds for languages with heavy runtime initialization like Java, though languages like Node.js and Python exhibit significantly shorter cold starts.

When to Use Serverless Computing: Ideal Use Cases

- Serverless computing excels for specific application patterns and use cases where its strengths provide clear advantages. Event-driven applications represent the quintessential serverless use case—applications responding to discrete events like file uploads, database modifications, or user actions. Image processing pipelines triggered by photo uploads to cloud storage exemplify perfect serverless scenarios. REST APIs built with serverless functions and API gateways provide cost-effective web service backends, particularly for APIs with variable traffic patterns. Data processing pipelines leveraging serverless functions process information in the background—data migrations, log analysis, and batch transformations execute efficiently without maintaining idle infrastructure.

- Microservices architectures benefit substantially from serverless platforms, as each discrete service scales independently based on its specific demand patterns. Real-time file processing handles tasks like image resizing, video transcoding, and document conversion, triggered automatically when files arrive. Scheduled jobs and background tasks such as database cleanup, log analysis, or periodic data synchronization execute on fixed schedules without requiring constantly-running servers.

- Mobile and web application backends leverage serverless platforms to handle variable request loads efficiently. Internet of Things (IoT) applications process massive volumes of sensor data generated sporadically, with serverless architecture providing natural elasticity for unpredictable traffic patterns. Machine learning and artificial intelligence workloads benefit from serverless computing, particularly for inference operations triggered by user requests, enabling event-driven AI where intelligence informs real-time decision-making.

When NOT to Use Serverless Computing: Limitations and Constraints

Despite significant advantages, serverless computing presents notable limitations requiring careful consideration. Long-running processes represent a fundamental mismatch with serverless architecture—functions have execution time limits (typically 15 minutes for AWS Lambda), making them unsuitable for batch operations exceeding these thresholds. Sustained, predictable workloads with constant traffic benefit more from reserved instances, offering better cost efficiency than serverless pay-per-execution pricing.

- Cold start latency creates problems for latency-sensitive applications requiring consistent, minimal response times. Complex state management and stateful operations conflict with the stateless function design philosophy, though durable function extensions partially address this limitation. Vendor lock-in emerges as serverless platforms use proprietary technologies and APIs, making migration to alternative providers difficult and costly.

- Debugging and monitoring serverless applications present challenges compared to traditional approaches. Distributed function execution across multiple servers complicates troubleshooting and performance analysis. Cost predictability becomes problematic when serverless function invocations scale unexpectedly, creating surprise billing despite cost-efficiency benefits at normal volumes. Resource constraints on individual functions—memory limits, timeout constraints, and concurrency throttling—prevent workloads requiring extensive compute resources from utilizing serverless platforms. Organizations should evaluate these limitations carefully against their specific requirements before committing to serverless architecture exclusively.

AWS Lambda Leading the Serverless Computing Market

- AWS Lambda pioneered Function as a Service (FaaS) in 2014 and remains the dominant serverless platform globally. Lambda executes code in response to diverse event triggers—HTTP requests via API Gateway, data changes in DynamoDB, file uploads to S3, or events from SNS topics. The platform provides exceptional scalability, automatically scaling from zero to thousands of concurrent executions without manual configuration. AWS Lambda pricing charges $0.20 per million requests plus $0.0000166667 per GB-second of execution, with 1 million free requests monthly, making it cost-effective for variable workloads.

- Lambda strengths include deep integration with AWS services, extensive programming language support (Python, Node.js, Java, C#, Ruby, Go), and a mature ecosystem of tools and libraries. Lambda Layers enable code reuse across functions, while Lambda Extensions facilitate monitoring and security tool integration. However, Lambda limitations include cold start latency for infrequently invoked functions, execution time limits (15 minutes), and memory constraints (up to 10 GB).

Organizations heavily invested in the AWS ecosystem find Lambda particularly attractive due to seamless integration with storage services, databases, and other AWS offerings. For developers prioritizing serverless computing with maximum flexibility and AWS ecosystem integration, AWS Lambda represents the market-leading choice.

Azure Functions: Microsoft’s Serverless Computing Solution

- Azure Functions, launched by Microsoft in 2016, provides serverless computing capabilities within the Azure ecosystem. Azure Functions supports diverse hosting plans—the Consumption plan offers true serverless pay-per-use pricing, while Premium and App Service plans provide alternative deployment options. The platform charges similar rates to Lambda: 1 million free executions monthly, then $0.20 per million invocations, with execution duration costs of $0.000016 per GB-second.

- Azure Functions’ strengths include Durable Functions, an extension enabling stateful workflows within a serverless environment—a capability AWS Lambda lacks natively. Triggers and bindings simplify integration with Azure services like Event Hub, Cosmos DB, and Event Grid. The platform supports multiple programming languages, including C#, JavaScript, Python, and Java. However, Azure Functions’ limitations include notably higher cold start latency (around 3,640 ms compared to Lambda’s 39 ms), particularly affecting performance-sensitive applications.

Organizations deeply integrated with Microsoft technologies and Azure find Azure Functions particularly valuable for serverless computing needs. The platform’s emphasis on Durable Functions for complex workflows and flexible hosting options provides advantages for specific application patterns.

Serverless Computing Best Practices and Implementation Guidance

Successful serverless computing implementation requires adherence to established best practices. Right-size function memory allocation using performance tuning tools, as excessive memory increases costs without performance benefits. Design stateless functions that don’t depend on local state or server affinity, maintaining portability across execution environments. Implement comprehensive monitoring using CloudWatch, Application Insights, or third-party tools to track function performance, error rates, and cost drivers. Optimize code execution time by eliminating unnecessary operations, using connection pooling for database access, and avoiding redundant computations.

- Manage concurrency carefully to prevent resource exhaustion and unexpected costs from uncontrolled scaling. Use provisioned concurrency for latency-sensitive functions that can’t tolerate cold start latency. Implement proper error handling, including retries with exponential backoff and dead-letter queues for failed function executions.

- Secure functions using identity and access management (IAM) policies, encrypting sensitive data, and validating inputs rigorously. Set up cost alerts and budgets to prevent surprise expenses from traffic spikes or poorly optimized functions. Decompose applications into smaller, single-responsibility functions rather than monolithic functions handling multiple concerns. Test thoroughly, including local testing with runtime simulators and comprehensive integration testing in staging environments.

More Read: What Is Serverless Computing? When Should You Use It?

Conclusion

Serverless computing represents a transformative cloud paradigm that eliminates infrastructure management overhead, enabling developers to focus entirely on application logic and business value creation. The serverless model through AWS Lambda, Azure Functions, Google Cloud Functions, and other serverless platforms provides exceptional cost efficiency for variable workloads, automatic scaling without manual intervention, reduced operational complexity, and faster time-to-market for new applications.

However, serverless architecture isn’t universally optimal—applications with sustained, predictable traffic, long-running processes, strict latency requirements, or complex stateful operations may benefit more from traditional or hybrid approaches. Organizations should evaluate their specific requirements, traffic patterns, cost sensitivity, and architectural constraints before adopting serverless computing.

For event-driven applications, variable-load services, microservices architectures, and modern cloud-native development, serverless solutions offer compelling advantages that accelerate development cycles and optimize costs. By when serverless computing with your needs and implementing best practices around function design, monitoring, cost management, and security, organizations can leverage this powerful paradigm to build scalable, cost-effective, and maintainable modern applications.