How Much Data Do You Need for Machine Learning?

Discover how much training data you need for machine learning. Learn the 10x rule, data requirements by model type, and strategies for limited.

The question haunts virtually every machine learning project: “Do we have enough training data?” This fundamental concern represents one of the most critical decision points in machine learning development, yet the answer remains frustratingly elusive. Unlike cooking recipes that specify exact measurements, data requirements for machine learning models resist one-size-fits-all answers. A successful machine learning implementation for predicting customer churn might require 5,000 labeled examples, while training an image recognition system demands tens of thousands or even millions. The difference isn’t random—it reflects the intersection of problem complexity, algorithm type, data quality, and acceptable performance thresholds.

Data represents the lifeblood of modern machine learning systems. Without adequate training data, even sophisticated algorithms collapse into statistical noise, generating predictions barely better than random guessing. Yet acquiring vast datasets proves expensive, time-consuming, and sometimes practically impossible due to privacy regulations, data scarcity, or labeling costs. This paradox creates genuine tension for organizations launching machine learning initiatives: invest in expensive data collection upfront, or risk project failure due to insufficient training examples? The actual data volume requirements for your specific use case transform this dilemma from overwhelming uncertainty into informed decision-making.

This comprehensive guide moves beyond vague recommendations to provide practical frameworks for determining how much data your machine learning project actually requires. We’ll explore the widely-cited 10x rule, examine how different learning approaches demand varying dataset sizes, and investigate critical factors influencing data needs. Additionally, we’ll discuss proven strategies for succeeding with limited data through transfer learning, data augmentation, and synthetic data generation. Whether you’re developing your first machine learning model or optimizing a mature production system, these principles enable confident decisions about training data investment and resource allocation.

The 10x Rule: Starting Point for Data Estimation

The 10x Rule Fundamentals

The 10x rule represents the most widely cited heuristic for estimating training data requirements in machine learning. This guideline suggests that datasets should contain at least ten times more observations than your model has parameters or degrees of freedom. For example, if your algorithm distinguishes dog photos from cat photos using 1,000 parameters, the 10x rule recommends at least 10,000 images for training.

The mathematical logic underlying the 10x rule addresses a fundamental machine learning principle: models with many parameters require proportionally more observations to learn reliable patterns. Each parameter represents a potential “degree of freedom”—a dimension the model must learn through training data exposure. Without sufficient examples per parameter, the algorithm risks memorizing noise rather than capturing genuine underlying patterns.

Limitations of the 10x Rule for Modern Machine Learning

While simple and memorable, the 10x rule carries substantial limitations for contemporary machine learning work. The 10x rule originated in contexts of traditional machine learning algorithms like linear regression and logistic regression, which operated with dozens or hundreds of parameters. Modern deep learning models like convolutional neural networks or transformers possess millions or billions of parameters, rendering the 10x rule completely inadequate for these neural network architectures.

Additionally, the 10x rule ignores problem complexity and task diversity. Natural language processing systems require billions of training examples to capture linguistic variations, while anomaly detection tasks might function effectively with sparse datasets containing few unusual examples. Classification problems identifying between two categories differ fundamentally from multi-class scenarios with hundreds of categories.

The 10x rule also assumes uniform data—all examples equally informative and noise-free. Real-world training datasets rarely meet this assumption. High-quality, representative data enables models to learn effectively with fewer examples, while poor-quality or biased datasets demand larger volumes to achieve equivalent performance.

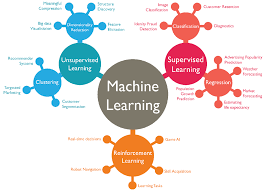

Data Requirements By Machine Learning Approach

Supervised Learning and Labeled Data Demands

Supervised learning approaches, which rely on labeled training data pairing inputs with correct outputs, typically demand more training examples than unsupervised alternatives. During supervised training, the algorithm learns mappings between input features and labeled outputs through iterative error correction. The more complex the mapping—more features, more classes to predict, more subtle distinctions—the more labeled examples the algorithm requires to learn reliable patterns.

For straightforward classification tasks like binary problems (cat versus dog), traditional algorithms might achieve acceptable results with several Machine Learning algorithms. hundred labeled examples per class. However, multi-class classification with dozens of categories demands thousands or tens of thousands of labeled examples for robust performance. Image classification using deep learning typically requires tens of thousands of labeled images, though specific requirements depend on image complexity and category distinctiveness.

Regression problems—predicting continuous values rather than discrete categories—generally demand fewer examples than classification of equivalent complexity. A model predicting house prices from structured features might succeed with 1,000-3,000 examples, Machine Learning? whereas an image classification task with similar conceptual complexity might require 10,000+ examples.

Unsupervised Learning with Unlabeled Data

Unsupervised learning approaches that discover patterns within unlabeled data typically demand different data requirements than supervised learning. Since unsupervised algorithms don’t require manual labeling—the primary cost driver in machine learning—they can leverage larger volumes of readily available unlabeled data.

However, unsupervised tasks still require substantial datasets to identify meaningful patterns and structures. Clustering algorithms discovering natural groupings within data need sufficient volume and diversity to reveal genuine clusters rather than noise-driven separations. The larger and more diverse the dataset, the more robust the discovered patterns become.

The advantage of unsupervised learning lies in availability rather than quantity reduction—you can more easily accumulate 100,000 unlabeled examples than 100,000 carefully annotated, labeled examples. This enables unsupervised approaches to leverage massive datasets while remaining economically feasible.

Critical Factors Influencing Data Requirements

Model Complexity and Architecture

The complexity of your chosen algorithm fundamentally drives data requirements. Simple models like logistic regression with modest parameterization converge quickly and achieve reasonable results with limited training data—potentially hundreds of examples. More complex traditional algorithms like random forests demand more training data to avoid overfitting, but remain orders of magnitude more efficient than deep learning.

Deep neural networks with hundreds of layers and millions of weights represent the most data-hungry machine learning architectures. Convolutional neural networks (CNNs) for computer vision typically require tens of thousands of examples. Transformer-based Machine Learning natural language processing models demand billions of training examples to capture linguistic complexity adequately.

The relationship proves consistent: model complexity directly correlates with data requirements. Adding hidden layers, expanding layer width, or increasing parameter count all demand proportionally larger datasets to train effectively without overfitting.

Problem Complexity and Feature Space

Beyond model architecture, the inherent complexity of your prediction task influences dataset sizing. Binary classification (two categories) requires less training data than multi-class classification with hundreds of categories. The more potential output classes, the more training examples needed to represent each class adequately.

Similarly, feature complexity and dimensionality directly impact data needs. Problems with a few input features require less training data than high-dimensional problems with hundreds of features. Image and video data represent high-dimensional inputs requiring more training examples than structured data with dozens of features.

Environmental variability further increases data requirements. Machine learning models for outdoor object detection need examples capturing different lighting conditions, weather, seasons, and camera angles. Controlled environments with consistent conditions enable smaller datasets to achieve equivalent performance.

Data Quality and Labeling Accuracy

High-quality training data dramatically reduces quantity requirements. Datasets featuring accurate labels, representative coverage of real-world scenarios, and minimal noise enable models to learn efficiently. Conversely, poor-quality training data with mislabeled examples, inconsistent definitions, or narrow representation demands substantially larger volumes to achieve equivalent performance.

The cost of label errors compounds across training data. A dataset with 10% labeling errors effectively reduces usable training examples by significant percentages. During model training, the algorithm learns patterns from both correct and incorrect labels, reducing overall learning efficiency.

Data preprocessing and cleaning substantially influence the effective dataset size. A well-cleaned, properly normalized dataset enables models to learn patterns from smaller samples. Conversely, noisy, inconsistently formatted data requires larger volumes to overcome quality deficiencies.

Available Resources and Project Constraints

Practical constraints frequently override theoretical optima. If acquiring additional training data proves prohibitively expensive, project budgets determine practical maximum dataset sizes. Organizations must balance data quality investments against quantity, often choosing 1,000 carefully curated examples over 100,000 hastily assembled ones.

Timeline constraints similarly impact data requirements decisions. Aggressive project schedules may necessitate simpler algorithms or transfer learning approaches rather than waiting for massive dataset assembly. Time-to-value considerations sometimes justify starting with smaller datasets and iteratively expanding as the business case justifies continued investment.

Data Requirements Across Common Machine Learning Problems

Time Series Forecasting Requirements

Time series problems present unique data requirements differing from static classification or regression. The fundamental rule: training data must exceed the longest seasonality pattern in your data. If predicting daily sales with annual seasonality, your dataset requires at least 365 observations for one complete annual cycle plus additional examples for testing.

A practical formula suggests minimum dataset size equals: (Longest Seasonality Period) × 1.5-2 to provide adequate training and test set separation. For hourly data with weekly patterns, you’d need approximately 168×1.5 = 252 observations minimum, ideally 350-500 examples for reliable model development.

Image Classification Deep Learning Models

Image classification using deep learning demands substantial datasets due to neural network parameter counts and high-dimensional image data. Simple computer vision tasks—classifying apples versus oranges in controlled lighting—might succeed with 500-2,000 images per category. More complex scenarios—identifying hundreds of object types across varying conditions—require tens of thousands or hundreds of thousands of images.

Industry practitioners widely note that achieving 95%+ accuracy for image classification typically demands a minimum of 10,000-100,000+ examples, depending on categories, complexity, and acceptable error rates.

Natural Language Processing and Text Analysis

NLP models exhibit voracious data appetites reflecting language complexity. Text classification tasks identifying spam emails or sentiment might succeed with 1,000-5,000 labeled examples for binary classification, or 5,000-10,000 for multi-class problems.

Conversely, large language models require billions of training examples. BERT, a foundational NLP model, was trained on 3+ billion words from diverse sources. GPT models trained on similar massive datasets, as this scale proves necessary for capturing linguistic nuance and general knowledge.

Strategies for Succeeding With Limited Data

Transfer Learning and Pre-trained Models

Transfer learning enables effective machine learning with smaller datasets by leveraging models trained on massive data beforehand. Instead of training neural networks from scratch, practitioners fine-tune pre-trained models like ResNet for images or BERT for text using their specific labeled dataset.

This approach dramatically reduces data requirements. An image classification task normally requiring 50,000 examples might achieve equivalent results by fine-tuning a pre-trained model with 5,000 examples. The pre-trained model already learned fundamental features—edge detection, shape recognition—applicable across domains. Your training data focuses on task-specific refinement rather than foundational pattern learning.

Data Augmentation Techniques

Data augmentation synthetically increases the training dataset size through transformations and variations of existing examples. For images, this includes rotation, cropping, brightness adjustment, and flipping—creating unique examples while preserving labels. For text, synonym replacement, sentence shuffling, or backtranslation, generate variations.

Effective data augmentation can reduce actual training data requirements by 30-50% through intelligently expanded datasets. A dataset of 5,000 images might become effectively 10,000-15,000 through augmentation, potentially sufficient for problems requiring 10,000 examples.

Synthetic Data Generation

When real-world training data is scarce or expensive, synthetic data generation creates artificial examples matching the statistical properties of real data. Self-driving cars are initially trained on synthetic data from video games before graduating to real-world scenarios. Medical imaging models train on synthetically generated images when actual patient data is limited by privacy regulations.

Advanced techniques like generative adversarial networks (GANs) create highly realistic synthetic examples suitable for training. This approach proves particularly valuable when data collection involves privacy concerns, high costs, or safety risks.

Determining Your Specific Data Requirements

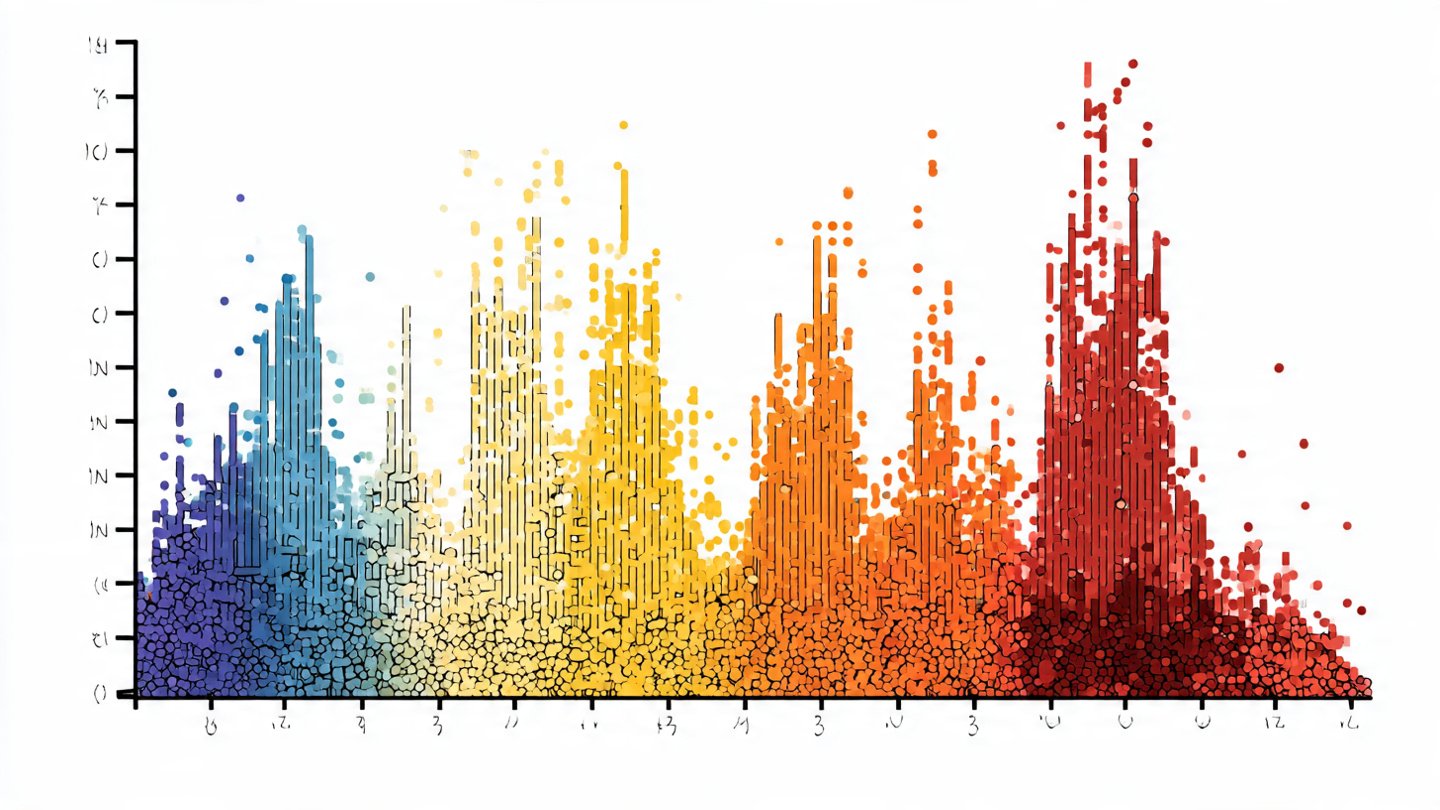

Conducting Iterative Testing and Learning Curves

Rather than attempting precise calculation, many practitioners determine data requirements empirically through learning curves—plotting model performance against training set size. You gradually increase data volume while monitoring performance improvement, identifying the point where additional data yields diminishing returns.

This approach reveals your model’s specific data appetite. Some problems plateau at 2,000 examples, while others show continuous improvement through 100,000+. Empirical testing provides more reliable guidance than theoretical heuristics.

Applying Statistical Power Analysis

Statistical power analysis offers a principled framework for sample size estimation. Specify your desired performance (accuracy, precision, recall), acceptable error probability, and data variability characteristics. Statistical formulas then calculate the minimum samples required.

While more rigorous than arbitrary heuristics, power analysis requires statistical concepts and making subjective assumptions about acceptable error rates and expected effect sizes.

Consulting Domain Experts and Historical Data

Domain expertise proves invaluable for realistic data requirement estimation. Experienced practitioners in your problem domain often possess intuition about required dataset sizes developed through previous projects. Academic literature and published research frequently report dataset sizes and performance for comparable problems, providing empirical benchmarks.

Warning Signs of Insufficient Data

Overfitting and Generalization Failure

When models memorize training examples rather than learning generalizable patterns, overfitting indicates insufficient training data relative to model complexity. Training accuracy becomes extremely high while test set performance plummets. The model learned noise rather than the signal.

Overfitting manifests as dramatically different performance on training data versus held-out test data. If your model achieves 99% accuracy on training data but 60% on test data, insufficient training examples likely caused memorization of specific examples rather than learning general patterns.

Poor Minority Class Representation

Classification problems with imbalanced classes—one category vastly outnumbering others—require special handling. If 99% of examples represent normal behavior and 1% represent fraud, your model might classify everything as normal and achieve 99% accuracy while missing fraud entirely.

Insufficient examples from minority classes prevent models from learning distinguishing characteristics. Addressing this requires either data augmentation of minority classes, class weighting during training, or collecting additional minority examples.

High Variance in Cross-Validation Results

When cross-validation scores fluctuate dramatically—80% accuracy fold one, 55% fold two, 75% fold three—unstable results often indicate insufficient training data for stable model learning. Larger, more representative datasets stabilize learning and produce consistent performance across different data splits.

More Read: Machine Learning Frameworks Compared: TensorFlow vs PyTorch

Conclusion

Determining how much data your machine learning project requires resists simple formulas, instead demanding thoughtful consideration of problem complexity, algorithm choice, data quality, and practical constraints. The widely-cited 10x rule provides a useful starting point for traditional machine learning, yet proves inadequate for modern deep learning and varies substantially across problem domains. Supervised learning typically demands more labeled examples than unsupervised approaches, while image classification and natural language processing prove more data-intensive than structured data problems.

Rather than pursuing perfectly-sized datasets upfront, embrace iterative approaches using learning curves to identify performance plateaus, leverage transfer learning and pre-trained models to minimize requirements, and employ data augmentation and synthetic data to supplement limited training examples. No fixed answer exists for every scenario, but armed with of influencing factors, empirical testing methodologies, and proven strategies for limited data, you’ll make confident data requirement decisions enabling successful machine learning implementation regardless of your starting position.