Supervised vs Unsupervised Machine Learning Complete Comparison

Discover the key differences between supervised vs unsupervised machine learning. Complete guide with algorithms, applications, and examples

The landscape of machine learning has revolutionized how businesses process data and make intelligent decisions. At the core of this technological evolution lie two fundamental approaches: supervised learning and unsupervised learning. Understanding the distinctions between these methodologies is crucial for data scientists, AI engineers, and business leaders who want to leverage artificial intelligence effectively.

Supervised machine learning operates like a student learning with a teacher’s guidance, where algorithms are trained on labeled datasets with predefined outcomes. In contrast, unsupervised machine learning functions independently, discovering hidden patterns and structures within unlabeled data without explicit instructions. These two paradigms represent fundamentally different approaches to data analysis and predictive modeling.

The choice between supervised and unsupervised learning significantly impacts project outcomes, computational resources, and business results. The primary distinction centers on whether labeled data is used to predict outcomes or not, but the differences extend far beyond this basic classification. Each approach offers unique advantages for specific use cases, from classification tasks and regression analysis to clustering algorithms and anomaly detection.

In today’s data-driven environment, organizations generate massive volumes of information daily. The ability to extract meaningful insights from this data determines competitive advantage. Machine learning algorithms have become indispensable tools for automating decision-making processes, identifying customer behavior patterns, optimizing operations, and predicting future trends. However, selecting the wrong approach can lead to wasted resources, inaccurate predictions, and missed opportunities.

This comprehensive guide explores the complete comparison between supervised and unsupervised learning, examining their definitions, methodologies, algorithms, real-world applications, advantages, limitations, and implementation considerations. Whether you’re a beginner exploring artificial intelligence concepts or an experienced practitioner seeking to optimize your machine learning models, this article provides actionable insights to inform your decision-making process. We’ll analyze when to use each approach, how they complement each other, and what factors should guide your selection in different scenarios.

By the end of this article, you’ll possess a thorough understanding of both learning paradigms, enabling you to choose the most appropriate methodology for your specific data science projects and organizational objectives.

What is Supervised Machine Learning

Supervised learning represents a machine learning approach where algorithms learn from labeled training data to make predictions or classifications. This method excels in predictive tasks with known outcomes, making it the preferred choice for scenarios where historical data with clear labels exists.

The fundamental concept behind supervised machine learning involves training a model using input-output pairs. Each training example consists of an input feature set and a corresponding correct output label. The algorithm analyzes these relationships, identifying patterns that connect inputs to outputs. Once trained, the model can predict outputs for new, unseen data based on the learned patterns.

How Supervised Learning Works

The supervised learning process follows a structured workflow. First, data scientists collect and prepare a labeled dataset where each data point has a known outcome. These labels serve as ground truth for training, requiring significant upfront investment in data annotation and quality control. The dataset is then divided into training, validation, and test sets.

During training, the algorithm receives input features and uses the corresponding labels to adjust its internal parameters. Through iterative processing, the model minimizes prediction errors by optimizing a loss function. This optimization process continues until the model achieves acceptable accuracy on the validation set.

Model evaluation occurs using the test set, which contains labeled data that the algorithm hasn’t seen during training. Performance metrics such as accuracy, precision, recall, F1-score, and mean squared error determine the model’s effectiveness. If performance is unsatisfactory, data scientists adjust hyperparameters, engineer new features, or try different algorithms.

Types of Supervised Learning Algorithms

Supervised learning algorithms fall into two primary categories: classification and regression.

Classification algorithms predict discrete categorical outputs. Popular techniques include:

- Decision Trees: Hierarchical models that split data based on feature values

- Random Forests: Ensemble methods combining multiple decision trees

- Support Vector Machines (SVM): Algorithms that find optimal boundaries between classes

- Logistic Regression: Statistical models for binary classification

- Neural Networks: Deep learning architectures for complex pattern recognition

- K-Nearest Neighbors (KNN): Instance-based learning using proximity measures

- Naive Bayes: Probabilistic classifiers based on Bayes’ theorem

Regression algorithms predict continuous numerical values. Common approaches include:

- Linear Regression: Models linear relationships between variables

- Polynomial Regression: Captures non-linear patterns using polynomial features

- Ridge and Lasso Regression: Regularized versions preventing overfitting

- Gradient Boosting: Ensemble techniques like XGBoost and LightGBM

- Neural Networks: Deep learning for complex numerical predictions

What is Unsupervised Machine Learning

Unsupervised learning represents a machine learning paradigm where algorithms discover patterns and structures in unlabeled data without predefined outcomes. This approach works differently because there is no teacher involved to guide the machine, making it particularly valuable for exploratory data analysis and discovering hidden insights.

Unlike supervised learning, which requires extensive labeling efforts, unsupervised machine learning processes raw data autonomously. The algorithm identifies inherent structures, relationships, and groupings based on statistical properties and feature similarities. This independence makes unsupervised learning ideal for scenarios where obtaining labeled data is expensive, impractical, or impossible.

How Unsupervised Learning Works

The unsupervised learning process begins with collecting unlabeled data containing various features but no predetermined categories or target values. This method is ideal for discovering relationships and trends in raw data, particularly when the underlying structure is unknown.

The algorithm analyzes the data’s intrinsic characteristics, calculating distances, similarities, and statistical distributions. Through iterative processing, it identifies natural groupings, reduces dimensionality, or detects outliers. Unlike supervised models, there’s no explicit correctness measure since no ground truth labels exist.

Model validation in unsupervised learning relies on internal metrics like silhouette scores, within-cluster sum of squares, or reconstruction error. Domain expertise often plays a crucial role in interpreting results and determining whether discovered patterns are meaningful and actionable.

Types of Unsupervised Learning Algorithms

Unsupervised learning algorithms encompass several categories, each serving distinct purposes:

Clustering algorithms group similar data points together:

- K-Means Clustering: Partitions data into K distinct clusters

- Hierarchical Clustering: Creates nested cluster structures

- DBSCAN: Density-based clustering identifying arbitrary-shaped clusters

- Gaussian Mixture Models: Probabilistic clustering using statistical distributions

- Mean Shift: Non-parametric clustering without specifying cluster numbers

Dimensionality reduction techniques simplify high-dimensional data:

- Principal Component Analysis (PCA): Linear transformation preserving maximum variance

- t-SNE: Non-linear technique for visualization

- Autoencoders: Neural networks learning compressed representations

- Independent Component Analysis (ICA): Separates multivariate signals

Association rule learning discovers relationships between variables:

- Apriori Algorithm: Identifies frequent itemsets in transactional data

- FP-Growth: Efficient pattern mining without candidate generation

Anomaly detection identifies unusual patterns:

- Isolation Forest: Tree-based outlier detection

- One-Class SVM: Boundary-based anomaly identification

- Local Outlier Factor: Density-based anomaly detection

Key Differences Between Supervised and Unsupervised Learning

Understanding the fundamental distinctions between supervised and unsupervised learning is essential for selecting the appropriate approach for specific data science projects. These differences span multiple dimensions, including data requirements, objectives, complexity, and applications.

Data Requirements

Supervised learning needs labeled datasets, while unsupervised learning works with raw data. This represents the most significant practical difference. Labeled data requires human annotation, domain expertise, and quality assurance processes, making it expensive and time-consuming to produce. Supervised machine learning projects often allocate substantial resources to data labeling before model development begins.

Unsupervised learning eliminates this barrier, processing unlabeled information directly. This advantage becomes critical when dealing with massive datasets where manual labeling is prohibitively expensive or when exploring domains where ground truth is subjective or unknown.

Learning Objectives

Supervised learning focuses on prediction accuracy, training models to replicate known input-output relationships. The goal is to create systems that generalize well to new data, making accurate predictions or classifications. Performance is measurable against actual labels, providing clear success metrics.

Unsupervised learning pursues discovery rather than prediction. Objectives include identifying natural groupings, reducing complexity, finding associations, or detecting anomalies. Success is often evaluated subjectively based on whether discovered patterns provide actionable insights or business value.

Complexity and Interpretability

Supervised models generally offer greater interpretability. The relationship between inputs and outputs is explicit, and model decisions can be traced back to specific features and learned parameters. Techniques like feature importance analysis, SHAP values, and decision tree visualization enhance transparency.

Unsupervised algorithms present interpretation challenges. Without predefined categories, understanding what clusters represent or why patterns emerge requires domain knowledge. Results may be mathematically sound but semantically ambiguous, requiring human judgment to assess relevance and meaning.

Computational Considerations

Supervised learning often requires less computational power for training simple models on moderately sized datasets. However, deep learning approaches for supervised tasks can demand significant resources. Training time depends on dataset size, model complexity, and optimization requirements.

Unsupervised learning can be computationally intensive, particularly for large-scale clustering or dimensionality reduction. Algorithms must process entire datasets to identify structures, often requiring multiple iterations. However, once trained, unsupervised models like autoencoders can efficiently process new data.

Accuracy and Performance

Supervised learning is best for tasks where you have labeled data and need to make predictions or classifications. When properly trained with representative data, supervised models achieve high accuracy on specific tasks. Performance metrics provide a quantitative assessment of prediction quality.

Unsupervised learning lacks direct accuracy measures since there’s no ground truth for comparison. Evaluation relies on internal consistency metrics and domain expertise. Results may vary based on algorithm parameters and initialization, requiring careful validation.

Applications of Supervised Learning

Supervised machine learning powers numerous real-world applications across industries, leveraging labeled data to automate decision-making and prediction tasks. These applications demonstrate the practical value of supervised algorithms in solving business challenges and enhancing operational efficiency.

Image and Object Recognition

Computer vision applications extensively use supervised learning for identifying and classifying visual content. Training datasets with labeled images enable models to recognize faces, detect objects, classify scenes, and interpret complex visual information. Applications include facial recognition security systems, autonomous vehicle perception, medical image diagnosis, and quality control in manufacturing.

Convolutional Neural Networks (CNNs) have revolutionized image classification, achieving human-level or superior performance on various benchmarks. Transfer learning allows practitioners to leverage pre-trained models, reducing the labeled data requirements for specific applications.

Natural Language Processing

Text classification, sentiment analysis, named entity recognition, and language translation rely heavily on supervised learning. Organizations analyze customer reviews, categorize support tickets, extract information from documents, and automate content moderation using labeled text datasets.

Modern transformer architectures like BERT and GPT use supervised fine-tuning to adapt pre-trained models for specific NLP tasks. These approaches combine the benefits of unsupervised pre-training on massive text corpora with supervised learning on task-specific labeled data.

Fraud Detection and Credit Scoring

Supervised learning models can be trained on historical transaction data labeled as “fraudulent” or “legitimate”. Financial institutions use classification algorithms to identify suspicious transactions, detect credit card fraud, and assess loan default risks in real-time.

Features like transaction amount, location, time, and user behavior patterns feed into models such as decision trees, support vector machines, and neural networks. These systems continuously learn from new labeled data, adapting to evolving fraud patterns.

Medical Diagnosis and Healthcare

Supervised learning assists healthcare professionals in disease diagnosis, treatment recommendation, and patient outcome prediction. Models trained on medical imaging data identify tumors, detect diabetic retinopathy, and analyze X-rays with remarkable accuracy.

Clinical decision support systems use patient records, symptoms, and test results to predict disease progression, recommend treatments, and identify at-risk populations. These applications improve diagnostic accuracy while reducing healthcare costs.

Recommendation Systems

Collaborative filtering and content-based recommendation engines use supervised learning to predict user preferences. Streaming services recommend movies, e-commerce platforms suggest products, and social media platforms personalize content feeds based on historical user interactions and explicit ratings. Hybrid approaches combine supervised and unsupervised techniques to handle cold-start problems and improve recommendation diversity while maintaining relevance.

Predictive Maintenance

Supervised learning excels in prediction tasks like stock price forecasting and equipment failure prediction. Manufacturing and industrial operations use sensor data labeled with equipment failure events to train models predicting maintenance needs. This proactive approach reduces downtime, optimizes maintenance schedules, and extends equipment lifespan. Regression models predict remaining useful life, while classification algorithms identify failure modes.

Applications of Unsupervised Learning

Unsupervised machine learning enables organizations to extract value from unlabeled data, discovering hidden patterns and structures that inform strategic decisions. These applications showcase the exploratory power of unsupervised algorithms across diverse domains.

Customer Segmentation

Unsupervised learning excels when exploring data structures without predefined labels, like customer segmentation. Marketing teams use clustering algorithms to group customers based on purchasing behavior, demographics, and engagement patterns without predetermined categories.

These segments enable personalized marketing campaigns, targeted product development, and optimized resource allocation. K-means clustering and hierarchical methods identify natural customer groups, revealing insights that manual analysis might miss.

Anomaly Detection

Unsupervised learning excels at identifying unusual patterns in data without requiring labeled examples of anomalies. Applications include anomaly detection tasks in cybersecurity, network monitoring, and quality assurance.

Intrusion detection systems identify suspicious network traffic, fraud detection identifies unusual transaction patterns, and manufacturing systems detect defective products. These applications benefit from unsupervised approaches because anomalies are rare, making labeled datasets difficult to obtain.

Market Basket Analysis

Retail organizations use association rule learning to discover product relationships and purchasing patterns. The Apriori algorithm identifies items frequently bought together, informing store layout optimization, cross-selling strategies, and inventory management. These insights drive promotional campaigns, bundle offers, and recommendation systems. Unsupervised learning reveals non-obvious associations that impact purchasing decisions.

Data Compression and Feature Extraction

Dimensionality reduction techniques compress high-dimensional data while preserving essential information. Principal Component Analysis transforms hundreds or thousands of features into a smaller set of principal components, reducing storage requirements and improving computational efficiency.

Applications include image compression, genetic data analysis, and preprocessing for supervised learning models. Reducing dimensionality mitigates overfitting and speeds up training while maintaining model performance.

Document Organization and Topic Modeling

Unsupervised learning automatically organizes large document collections, identifying themes and topics without manual categorization. News organizations cluster articles, research databases organize papers, and content platforms categorize user-generated content.

Latent Dirichlet Allocation (LDA) and other topic modeling techniques discover semantic themes, enabling better search functionality and content discovery. These approaches scale to millions of documents, providing structure to otherwise overwhelming information volumes.

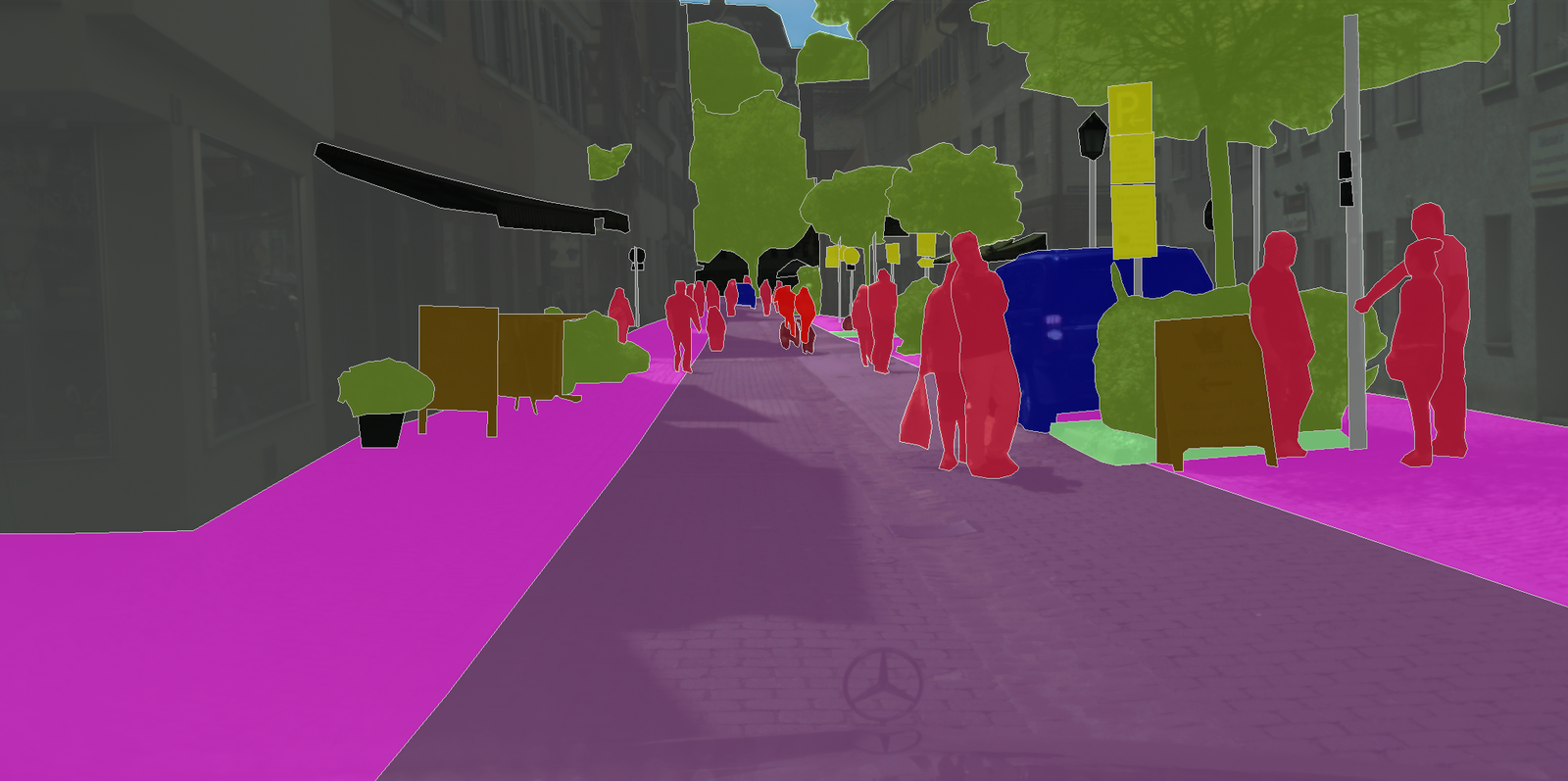

Image Segmentation

Clustering algorithms partition images into regions sharing similar characteristics, facilitating object detection and image analysis. Medical imaging uses segmentation to identify anatomical structures, satellite imagery analysis classifies land usage, and autonomous systems parse visual scenes. Unsupervised segmentation operates without labeled training examples, adapting to diverse image types and content.

Advantages and Disadvantages

Both supervised and unsupervised learning offer distinct benefits and face specific challenges. Understanding these trade-offs guides appropriate methodology selection for different scenarios.

Advantages of Supervised Learning

High accuracy and reliability: When trained on sufficient representative data, supervised models achieve excellent predictive performance. Clear objectives and measurable outcomes enable systematic optimization.

Well-defined problems: Explicit input-output relationships provide clarity in model development. Success criteria are objective and quantifiable, facilitating project management and stakeholder communication.

Mature ecosystem: Extensive literature, established best practices, and robust tools support supervised learning implementation. Practitioners access proven algorithms and comprehensive resources.

Interpretable results: Many supervised algorithms offer transparency in decision-making. Feature importance analysis reveals which inputs drive predictions, supporting regulatory compliance and building user trust.

Disadvantages of Supervised Learning

Data labeling costs: Models often need constant updating with new labeled data to stay accurate as real-world data changes over time. Creating and maintaining high-quality labeled datasets requires significant resources.

Limited generalization: Models perform well only on patterns represented in training data. Distribution shifts and novel scenarios degrade performance, requiring retraining.

Scalability challenges: Labeling large datasets becomes prohibitively expensive. This limitation restricts applications in domains with abundant data but scarce labels.

Bias amplification: Models learn and perpetuate biases present in training labels. Historical discrimination encoded in labeled data propagates through predictions, raising ethical concerns.

Advantages of Unsupervised Learning

No labeling required: Processing unlabeled data eliminates expensive annotation processes. This advantage enables exploration of massive datasets and rapid prototype development.

Discovery of hidden patterns: Unsupervised algorithms reveal structures and relationships humans might not anticipate. These insights drive innovation and inform strategic decisions.

Scalability: Methods process vast quantities of data efficiently. Cloud computing and distributed algorithms enable the analysis of datasets containing millions or billions of examples.

Adaptability: Models discover structures specific to available data without assuming predetermined categories. This flexibility supports exploration in novel domains.

Disadvantages of Unsupervised Learning

Lack of accuracy measures: Without ground truth, evaluating quality becomes subjective. Results may be mathematically sound but practically meaningless.

Interpretation challenges: Understanding what discovered patterns represent requires domain expertise. Clusters or components may lack clear semantic meaning.

Unpredictable results: Algorithm outcomes depend heavily on parameters, initialization, and data characteristics. Different runs may produce varying results, complicating reproducibility.

Limited actionability: Discovered patterns don’t automatically translate to specific actions or predictions. Additional analysis or supervised learning may be necessary to operationalize insights.

Choosing Between Supervised and Unsupervised Learning

Selecting the appropriate machine learning approach depends on multiple factors, including data availability, project objectives, resources, and business context. This decision significantly impacts project success and return on investment.

When to Use Supervised Learning

Choose supervised learning when:

Labeled data exists: If historical data with known outcomes is available, supervised models leverage this information effectively. Past customer churn events, historical sales figures, or medical diagnoses provide valuable training signals.

Prediction is the goal: When the objective involves forecasting future outcomes, classifying new instances, or automating decision-making, supervised learning excels. Applications requiring specific outputs benefit from this approach.

Clear success metrics matter: Projects requiring quantifiable performance metrics favor supervised methods. Accuracy, precision, recall, and error rates provide objective evaluation criteria.

Real-time decisions are needed: Trained supervised models make predictions rapidly, enabling real-time applications like fraud detection, recommendation systems, and autonomous systems.

Regulatory requirements exist: Industries requiring explainable AI for compliance benefit from interpretable supervised algorithms like decision trees and linear models.

When to Use Unsupervised Learning

Choose unsupervised learning when:

No labeled data is available: If obtaining labels is impossible, impractical, or prohibitively expensive, unsupervised approaches extract value from raw data. Exploratory phases often begin with unsupervised analysis.

Discovery is the objective: When goals involve understanding data structure, identifying patterns, or generating hypotheses, unsupervised learning reveals insights. Market research, customer analysis, and scientific exploration benefit from this approach.

Preprocessing is required: Dimensionality reduction and feature engineering using unsupervised techniques improve subsequent supervised learning performance. Compression reduces computational costs while maintaining information.

Anomaly detection is critical: Identifying rare events without labeled examples suits unsupervised methods. Cybersecurity, fraud detection, and quality control leverage these capabilities.

Data exploration is needed: Understanding unfamiliar datasets benefits from clustering, visualization, and pattern discovery. Initial exploration informs subsequent analysis strategies.

Hybrid Approaches

Many practical applications combine both paradigms. Semi-supervised learning uses small labeled datasets with large unlabeled collections, reducing annotation costs while maintaining prediction accuracy. Pre-training models using unsupervised learning on massive unlabeled data, then fine-tuning with supervised learning on task-specific labels, powers modern deep learning breakthroughs.

Active learning iteratively selects the most informative examples for labeling, optimizing the balance between annotation costs and model performance. These hybrid strategies leverage the strengths of both approaches while mitigating their individual weaknesses.

Real-World Examples and Case Studies

Practical implementations of supervised and unsupervised learning demonstrate their transformative impact across industries. These examples illustrate how organizations leverage machine learning to solve complex challenges and create competitive advantages.

Netflix Recommendation System

Netflix combines supervised and unsupervised learning to personalize content recommendations for millions of subscribers. Supervised algorithms predict user ratings based on historical viewing patterns and explicit ratings. Unsupervised clustering groups similar content and identifies user segments with comparable preferences. This hybrid approach delivers personalized recommendations that drive engagement and retention. The system continuously learns from user interactions, adapting to changing preferences and new content releases.

Google Photos Image Organization

Google Photos uses supervised learning for object recognition and unsupervised learning for automatic photo organization. Convolutional Neural Networks trained on massive labeled image datasets identify faces, objects, and scenes with impressive accuracy. Unsupervised clustering automatically groups photos by events, locations, and visual similarity without manual categorization. This combination creates intuitive organization and powerful search capabilities.

Amazon Customer Segmentation

Amazon employs unsupervised learning to segment customers into distinct groups based on browsing behavior, purchasing patterns, and demographic characteristics. K-means clustering and hierarchical methods identify customer segments for targeted marketing campaigns. These insights inform personalized product recommendations, email marketing strategies, and dynamic pricing. Supervised models predict purchase probability and customer lifetime value for each segment.

Credit Card Fraud Detection

Financial institutions use supervised learning to detect fraudulent transactions. Models trained on millions of historical transactions labeled as legitimate or fraudulent identify suspicious patterns in real-time. Unsupervised anomaly detection complements supervised approaches by identifying novel fraud patterns not represented in training data. This layered defense adapts to evolving criminal tactics.

Medical Image Analysis

Healthcare providers use supervised learning for diagnostic support. Models trained on labeled medical images detect diabetic retinopathy, identify pneumonia in chest X-rays, and analyze MRI scans for tumors. Unsupervised learning assists in image preprocessing, artifact removal, and feature extraction. Dimensionality reduction techniques compress complex medical imaging data while preserving diagnostic information.

Future Trends and Developments

The field of machine learning continues evolving rapidly, with emerging trends reshaping how organizations implement supervised and unsupervised learning. Understanding these developments helps practitioners prepare for future opportunities and challenges.

Self-Supervised Learning

Self-supervised learning represents a paradigm shift, creating labeled data from unlabeled inputs through clever problem formulation. Models learn representations by predicting masked portions of inputs or solving auxiliary tasks, then transferring this knowledge to downstream applications.

This approach combines the benefits of supervised and unsupervised learning, achieving strong performance with minimal manual labeling. Language models and vision systems increasingly adopt self-supervised pre-training.

Few-Shot and Zero-Shot Learning

Advances in transfer learning enable models to perform tasks with minimal or no task-specific training examples. Few-shot learning adapts pre-trained models using tiny labeled datasets, while zero-shot learning performs tasks using only natural language descriptions. These capabilities reduce data labeling requirements dramatically, democratizing machine learning for resource-constrained organizations and niche applications.

Explainable AI

Growing regulatory requirements and ethical concerns drive demand for interpretable machine learning models. New techniques provide transparency into complex neural networks, revealing how models make decisions. Explainable AI enhances trust, enables debugging, and supports compliance. Both supervised and unsupervised models increasingly incorporate interpretability features.

AutoML and Neural Architecture Search

Automated machine learning tools democratize supervised and unsupervised learning by automating algorithm selection, hyperparameter tuning, and model optimization. These systems enable non-experts to develop high-performing models efficiently. Neural Architecture Search automatically designs optimal neural network architectures for specific tasks, reducing manual engineering effort and accelerating innovation.

Edge Computing and TinyML

Deploying machine learning models on resource-constrained edge devices enables real-time processing without cloud connectivity. TinyML brings supervised and unsupervised learning to sensors, IoT devices, and embedded systems. This trend reduces latency, enhances privacy, and enables applications in environments with limited connectivity.

More Read: Machine Learning Career Path Skills and Salary Guide

Conclusion

The comparison between supervised and unsupervised machine learning reveals two complementary approaches serving distinct purposes in the data science ecosystem. Supervised learning excels in predictive tasks requiring high accuracy when labeled data is available, powering applications from fraud detection to medical diagnosis. Unsupervised learning discovers hidden patterns and structures in unlabeled data, enabling customer segmentation, anomaly detection, and exploratory analysis.

The choice between these paradigms depends on data availability, project objectives, computational resources, and business requirements. Modern machine learning solutions increasingly combine both approaches through hybrid techniques like semi-supervised learning and self-supervised learning, leveraging their complementary strengths. As the field evolves with emerging trends like few-shot learning, explainable AI, and edge computing, practitioners must understand both paradigms to build effective solutions.

Whether implementing classification algorithms, clustering techniques, or hybrid systems, success requires matching the methodology to the specific problem context, available resources, and desired outcomes. By mastering both supervised and unsupervised learning, data scientists and organizations position themselves to extract maximum value from their data assets and drive innovation in an increasingly AI-powered world.