The revolution of autonomous robots has transformed industries ranging from manufacturing and logistics to healthcare and agriculture. These intelligent machines possess the remarkable ability to perceive their environment, make informed decisions, and execute complex tasks without human intervention. At the core of this technological marvel lies three fundamental capabilities: navigation, mapping, and decision making. Together, these pillars enable robots to traverse unknown territories, construct accurate representations of their surroundings, and choose optimal actions in dynamic environments.

Autonomous Robots’ navigation represents one of the most challenging and critical aspects of robotics engineering. Whether it’s a delivery drone navigating urban landscapes, a warehouse robot transporting goods, or a Mars rover exploring extraterrestrial terrain, the ability to move safely and efficiently is paramount. Modern navigation systems integrate sophisticated sensors, powerful processors, and advanced algorithms to achieve unprecedented levels of autonomy and reliability.

Mapping technology allows robots to create detailed spatial representations of their environment, transforming raw sensor data into actionable intelligence. Through techniques like SLAM (Simultaneous Localization and Mapping), robots can build maps while simultaneously determining their position within those maps—a computational feat that mirrors human spatial awareness. This dual capability forms the foundation for intelligent exploration and interaction with the physical world.

Decision-making algorithms empower robots to process vast amounts of information and select appropriate actions in real-time. From simple rule-based systems to complex artificial intelligence and machine learning frameworks, these decision mechanisms enable robots to adapt to changing conditions, avoid obstacles, optimize paths, and accomplish mission objectives with minimal human supervision. The integration of deep reinforcement learning and neural networks has particularly revolutionized how robots learn from experience and improve their performance over time.

As we delve deeper into each of these critical components, we’ll explore the technologies, algorithms, and methodologies that enable Autonomous Robots to navigate complex environments, create accurate maps, and make intelligent decisions that drive the future of robotics forward.

Autonomous Robot Navigation

Autonomous Robots’ navigation encompasses the comprehensive set of technologies and methodologies that enable robots to move from one location to another without human guidance. This multifaceted process involves perception, localization, path planning, and motion control working harmoniously to ensure safe and efficient movement.

Core Components of Navigation Systems

The foundation of any robot navigation system consists of several interconnected components. Sensor fusion plays a crucial role by combining data from multiple sources, including LiDAR, cameras, ultrasonic sensors, and inertial measurement units (IMUs). This integrated approach provides robots with a comprehensive of their surroundings, compensating for individual sensor limitations and enhancing overall reliability.

Localization represents another critical aspect, enabling robots to determine their precise position and orientation within an environment. Modern systems employ probabilistic approaches like Adaptive Monte Carlo Localization (AMCL) and Kalman filtering to estimate position with high accuracy despite sensor noise and environmental uncertainties. These algorithms continuously update the robot’s belief about its location by comparing sensor observations with known map data.

Global vs. Local Navigation Strategies

Navigation systems typically operate on two distinct levels: global and local. Global path planning involves computing an optimal route from the starting position to the destination based on a complete map of the environment. Algorithms like *A-star (A)**, Dijkstra’s algorithm, and Rapidly-exploring Random Trees (RRT) are commonly employed for this purpose, each offering different trade-offs between computational efficiency and path optimality.

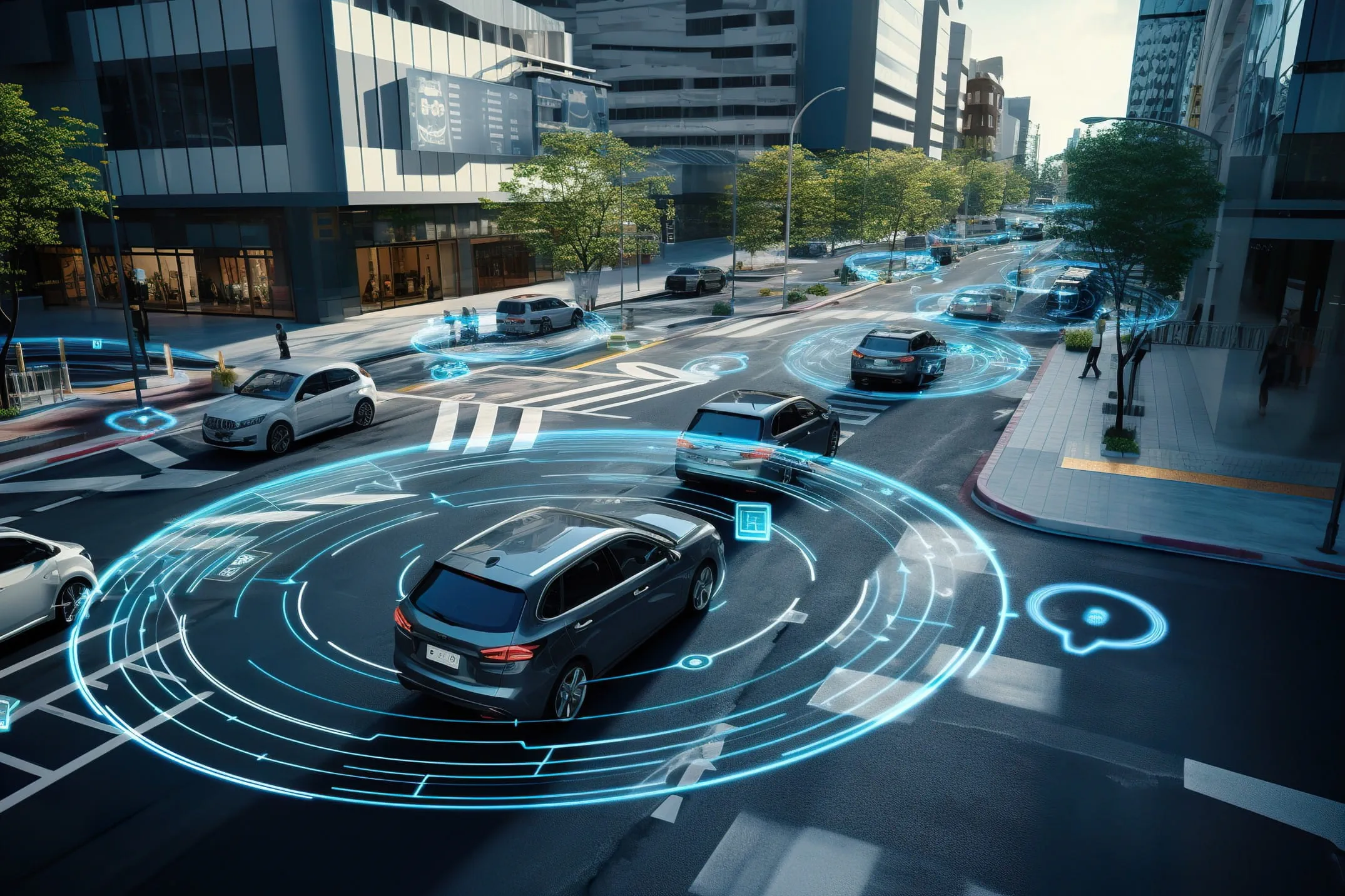

Local navigation, conversely, handles real-time obstacle avoidance and trajectory adjustment. The Dynamic Window Approach (DWA) has emerged as a popular technique for local planning, evaluating possible velocity commands within a dynamic window to select the safest and most efficient motion while considering the robot’s kinematic constraints. This approach enables Autonomous Robots to react quickly to unexpected obstacles and dynamic elements in their environment.

Vision-Based Navigation Technologies

Visual navigation has gained tremendous traction with advances in computer vision and deep learning. Robots equipped with cameras can extract rich environmental information, recognize objects, and interpret semantic meaning from their surroundings. Visual odometry estimates motion by analyzing sequential camera frames, providing position estimates even when GPS signals are unavailable or unreliable.

The integration of GPS navigation with visual systems creates robust hybrid approaches, particularly effective for outdoor applications. This combination allows robots to leverage global positioning for coarse localization while employing vision-based methods for fine-grained navigation and obstacle detection in environments like agricultural fields or urban streets.

SLAM: The Foundation of Robot Mapping

Simultaneous Localization and Mapping (SLAM) represents one of the most significant breakthroughs in autonomous robotics. This technology enables Autonomous Robots to construct maps of unknown environments while concurrently tracking their location within those maps—a chicken-and-egg problem that has captivated researchers for decades.

Types of SLAM Algorithms

SLAM algorithms can be broadly categorized based on the sensors they utilize and the mathematical frameworks they employ. Laser SLAM uses LiDAR sensors to create highly accurate 2D or 3D maps by measuring distances to surrounding objects. These systems excel in structured environments and provide consistent performance across varying lighting conditions.

Visual SLAM (V-SLAM) leverages camera data to build maps and track position simultaneously. Recent advances in V-SLAM have incorporated deep learning techniques, enabling robots to understand semantic information beyond simple geometric features. Systems like ORB-SLAM and NICE-SLAM have demonstrated remarkable performance in complex environments, utilizing features like corners, edges, and texture patterns for robust localization.

Graph-based SLAM approaches represent the environment and robot trajectory as a graph where nodes correspond to robot poses and edges represent spatial constraints between them. These methods optimize the entire graph simultaneously, correcting accumulated errors through loop closure detection—the process of recognizing previously visited locations.

Challenges in SLAM Implementation

Despite significant progress, SLAM technology faces several persistent challenges. Error accumulation remains a fundamental issue, as small uncertainties in sensor measurements compound over time, causing maps to distort and position estimates to drift. Loop closure techniques help mitigate this problem by recognizing when a robot returns to a previously mapped area and adjusting the map accordingly.

Dynamic environments pose another significant challenge. Traditional SLAM algorithms assume static surroundings, but real-world scenarios often include moving objects like people, vehicles, or other robots. Modern approaches incorporate dynamic object detection and tracking to filter out transient elements and maintain consistent map representations.

Computational efficiency presents practical limitations, especially for resource-constrained platforms. Processing high-resolution sensor data in real-time demands substantial computational power. Researchers have developed optimized algorithms and selective processing techniques to balance accuracy with computational feasibility, enabling SLAM deployment on embedded systems and mobile platforms.

Advanced SLAM Techniques

Neural implicit SLAM represents the cutting edge of mapping technology, using neural networks to encode environmental representations implicitly. These approaches can capture complex geometric details and handle sensor noise more gracefully than traditional methods. Semantic SLAM extends beyond geometric mapping to include object recognition and scene, enabling Autonomous Robots to build maps that understand what objects are present, not just where surfaces exist.

Multi-robot collaborative mapping leverages multiple robots simultaneously exploring an environment, sharing information to build comprehensive maps more quickly than individual agents. This approach requires sophisticated communication protocols and data fusion techniques to maintain consistency across distributed observations.

Path Planning Algorithms and Strategies

Path planning constitutes the computational process of determining an optimal or feasible route between a start location and a goal position. This critical capability enables autonomous robots to navigate efficiently while avoiding obstacles and satisfying various constraints like energy consumption, time, and safety margins.

Classical Path Planning Methods

Graph-based search algorithms remain foundational in path planning. Dijkstra’s algorithm guarantees finding the shortest path by systematically exploring all possible routes from the start node. While computationally intensive for large environments, its completeness makes it valuable for offline planning scenarios.

A-star (A) algorithm* improves upon Dijkstra’s approach by incorporating heuristic guidance toward the goal, dramatically reducing computational requirements. By prioritizing paths that appear promising based on estimated remaining distance, A pathfinding* efficiently navigates complex environments while maintaining optimality guarantees when using admissible heuristics.

Visibility graphs and Voronoi diagrams offer geometric approaches to path planning. Visibility graphs connect visible vertices in the environment, while Voronoi diagrams maximize clearance from obstacles. These methods excel in static environments with polygonal obstacles but require significant preprocessing for complex spaces.

Sampling-Based Planning Techniques

Rapidly-exploring Random Trees (RRT) and its variants have revolutionized path planning in high-dimensional spaces. RRT probabilistically samples the configuration space, growing a tree structure that explores the environment efficiently. RRT-star (RRT)* extends this approach by continuously refining the tree to improve path quality as more samples are added.

Probabilistic Roadmaps (PRM) create a network of collision-free configurations connected by local paths. This preprocessing phase enables rapid query responses for multiple path planning requests. PRM methods prove particularly effective in complex environments where multiple navigation queries will be executed using the same map.

Intelligent Path Planning Approaches

Reinforcement learning has emerged as a powerful paradigm for path planning, enabling Autonomous Robots to learn optimal navigation policies through trial and error. Deep Q-Networks (DQN) and other deep reinforcement learning methods allow robots to handle high-dimensional sensor inputs and discover sophisticated navigation strategies that traditional algorithms might miss.

Genetic algorithms and other evolutionary approaches optimize paths by treating potential routes as individuals in a population, applying selection, crossover, and mutation operations to evolve better solutions. These methods excel at multi-objective optimization, simultaneously considering factors like path length, smoothness, energy efficiency, and safety.

Neural network-based planning employs trained models to generate paths directly from sensor data. End-to-end learning approaches can bypass explicit map representations, learning implicit world models that support navigation. This paradigm shows particular promise for handling uncertain or partially observable environments.

Decision Making in Autonomous Robots

Decision-making represents the cognitive capability that enables robots to select appropriate actions based on environmental perception, internal goals, and learned experiences. This process transforms sensor data and mission objectives into concrete robot behaviors that accomplish desired tasks.

Rule-Based Decision Systems

Traditional robot decision-making employed rule-based systems where engineers explicitly programmed if-then logic to handle various scenarios. Finite State Machines (FSMs) structure decision-making as transitions between discrete states triggered by specific conditions. While transparent and predictable, these systems struggle with complexity and lack adaptability to unforeseen situations.

Behavior trees offer a more flexible hierarchical framework for decision making. Originally developed for video game AI, behavior trees organize actions and conditions in a tree structure that supports modular design and easier debugging. Robots can execute complex sequences of behaviors while responding dynamically to environmental changes.

Probabilistic Decision Making

Probabilistic approaches acknowledge the uncertainty inherent in sensor measurements and environmental dynamics. Markov Decision Processes (MDPs) model decision making as selecting actions that maximize expected cumulative reward over time. Partially Observable MDPs (POMDPs) extend this framework to situations where the robot cannot directly observe its complete state, maintaining probability distributions over possible states.

Bayesian networks provide a structured representation of probabilistic relationships between variables, enabling robots to reason under uncertainty. These graphical models support both forward prediction and backward inference, allowing robots to estimate hidden state variables from observations and make informed decisions based on incomplete information.

Fuzzy Logic Decision Systems

Fuzzy logic enables robots to handle imprecise and ambiguous information, mimicking human reasoning patterns. Rather than binary true/false evaluations, fuzzy systems operate with degrees of truth, allowing smooth transitions between different behaviors. This approach proves particularly valuable for obstacle avoidance and navigation in cluttered environments where crisp boundaries don’t exist.

Fuzzy controllers evaluate multiple linguistic rules simultaneously, combining their outputs through defuzzification processes to produce concrete control commands. The interpretability of fuzzy rules facilitates human and system tuning, making them popular in applications requiring transparency and safety verification.

AI and Machine Learning for Decision Making

Artificial intelligence and machine learning have revolutionized Autonomous Robots’ decision-making capabilities. Deep neural networks can learn complex decision policies from data, discovering patterns and strategies that surpass hand-engineered approaches. Convolutional Neural Networks (CNNs) excel at processing visual information for object recognition and scenes that inform navigation decisions.

Deep reinforcement learning combines neural networks with reinforcement learning principles, enabling robots to learn sophisticated behaviors through interaction with their environment. Algorithms like Deep Deterministic Policy Gradient (DDPG) and Proximal Policy Optimization (PPO) have achieved remarkable success in continuous control tasks, allowing robots to learn nuanced motor skills and navigation strategies.

Transfer learning allows robots to leverage knowledge acquired in one domain to accelerate learning in related domains. Pre-trained models can be fine-tuned for specific tasks, dramatically reducing the data requirements and training time needed to achieve competent performance.

Sensor Technologies for Autonomous Navigation

Sensors serve as the eyes and ears of autonomous robots, gathering critical information about the environment that enables perception, localization, and decision making. Modern robots typically employ multiple sensor types, leveraging their complementary strengths through sensor fusion techniques.

LiDAR and Range Sensors

LiDAR (Light Detection and Ranging) has become ubiquitous in autonomous robotics, providing precise distance measurements by measuring the laser pulse time-of-flight. These sensors generate detailed point clouds representing the 3D structure of the surroundings with millimeter-level accuracy. 2D LiDAR scans a single plane, ideal for ground-level obstacle detection, while 3D LiDAR captures volumetric data for comprehensive environmental modeling.

Ultrasonic sensors offer cost-effective proximity detection for close-range obstacle avoidance. While limited in range and resolution compared to LiDAR, their reliability and low cost make them valuable supplements in multi-sensor systems. Infrared range finders provide similar functionality with different environmental sensitivities.

Vision Sensors and Cameras

Camera systems provide rich visual information, enabling object recognition, texture analysis, and semantic analysis. Monocular cameras offer simplicity and low cost but require sophisticated algorithms to estimate depth. Stereo camera pairs mimic human binocular vision, computing depth through triangulation between corresponding features in left and right images.

RGB-D cameras combine color imaging with depth sensing, directly providing 3D structural information. These sensors enable rapid scene and facilitate visual SLAM implementation. Thermal cameras extend perception beyond visible wavelengths, detecting heat signatures useful for detecting people or equipment in low-visibility conditions.

Inertial and Position Sensors

Inertial Measurement Units (IMUs) combine accelerometers, gyroscopes, and sometimes magnetometers to measure acceleration, rotation, and orientation. These sensors provide high-frequency motion data essential for smooth control and can complement other position estimation methods. However, IMU measurements drift over time, requiring periodic correction from absolute position references.

GPS receivers provide global position estimates invaluable for outdoor navigation. While standard GPS accuracy of several meters suffices for coarse localization, Real-Time Kinematic (RTK) GPS achieves centimeter-level precision through differential corrections. However, GPS signals become unreliable or unavailable in indoor environments, dense urban areas, or underground locations.

Sensor Fusion Methodologies

Sensor fusion combines data from multiple sensors to achieve superior performance compared to individual sensors. Kalman filters remain the classical approach, recursively estimating system state by weighting measurements according to their uncertainty. Extended Kalman Filters (EKF) and Unscented Kalman Filters (UKF) extend this framework to nonlinear systems common in robotics.

Particle filters offer an alternative probabilistic approach, representing state distributions with discrete samples. These methods handle multimodal distributions and nonlinear dynamics more naturally than Kalman-based approaches, though at a higher computational cost.

Modern deep learning approaches can learn sensor fusion directly from data, discovering optimal ways to combine heterogeneous inputs. These learned fusion strategies often outperform hand-engineered approaches in complex scenarios with intricate sensor interactions.

Real-World Applications of Autonomous Robots

Autonomous robots have transcended research laboratories to become valuable tools across diverse industries. Their navigation, mapping, and decision-making capabilities enable applications that were previously impossible or impractical.

Warehouse and Logistics Automation

Autonomous mobile robots have revolutionized warehouse operations, transporting goods, organizing inventory, and optimizing storage layouts. These systems navigate crowded facilities while coordinating with human workers and other robots. Advanced fleet management systems orchestrate hundreds of robots simultaneously, optimizing traffic flow and preventing deadlocks.

Companies have deployed sophisticated Autonomous Robots navigation systems that adapt to dynamic warehouse environments where layouts change frequently. These robots handle unexpected obstacles, navigate around humans safely, and seamlessly integrate with existing warehouse management software.

Agricultural Robotics

Autonomous robots in agriculture perform tasks like crop monitoring, precision spraying, harvesting, and field mapping. GPS navigation combined with visual systems enables centimeter-level accuracy for row following and targeted interventions. Robots navigate complex plantation environments with obstacles like tree trunks while executing precision agricultural operations.

Advanced decision-making algorithms analyze crop health data, optimizing resource application and identifying areas requiring attention. Multi-robot systems can cover large fields efficiently, coordinating their activities to maximize productivity while minimizing resource consumption.

Service and Delivery Robots

Delivery robots navigate sidewalks and urban environments, transporting food, packages, and goods autonomously. These systems must handle unpredictable pedestrian behavior, varying terrain, and dynamic obstacles. Obstacle avoidance capabilities ensure safe operation in crowded spaces while maintaining delivery schedules.

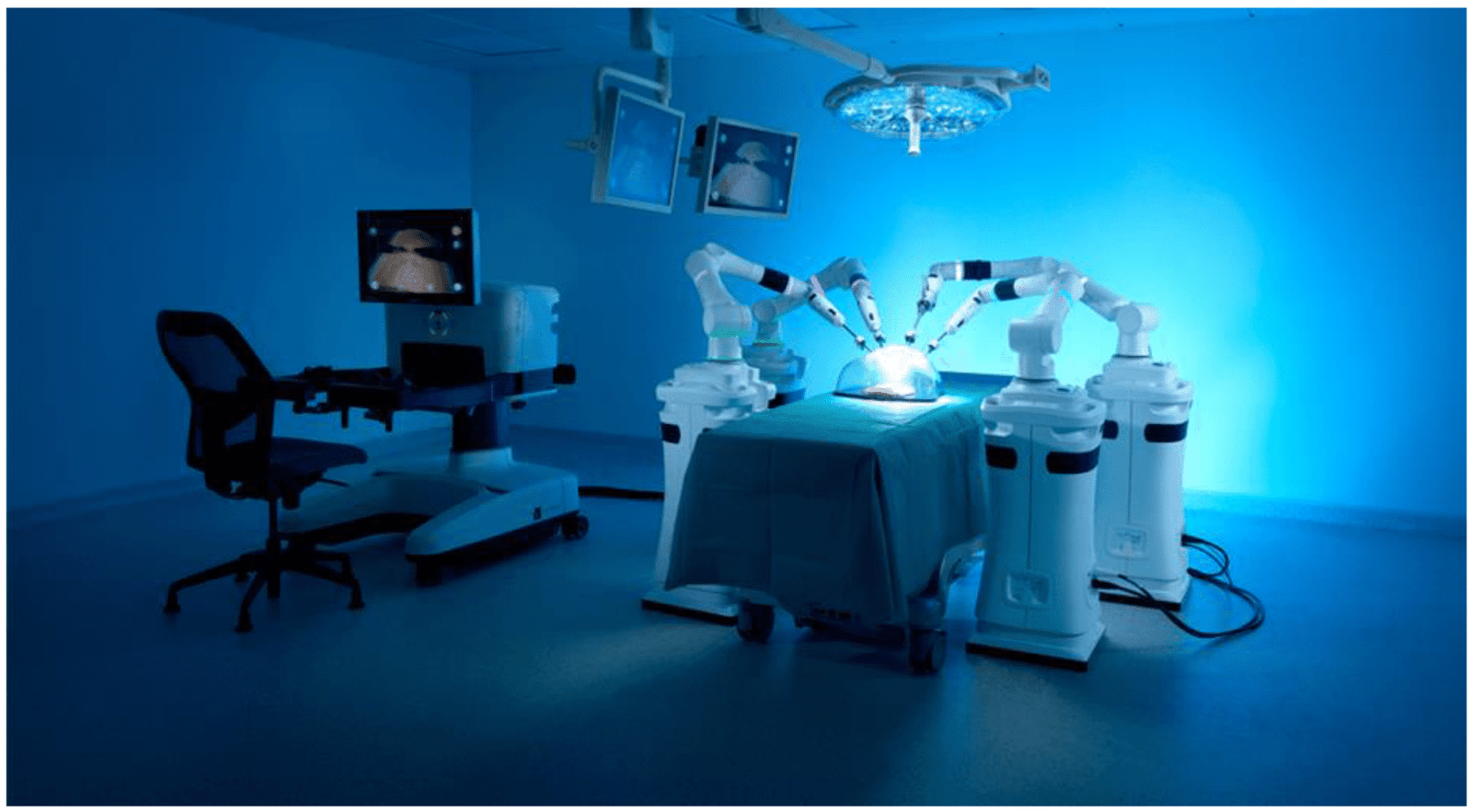

Service robots in hospitals, hotels, and public spaces navigate complex indoor environments, providing assistance, transportation, and information. Advanced path planning ensures efficient routes while maintaining comfortable social distances and respecting human preferences.

Exploration and Inspection

Autonomous robots excel at exploring dangerous or inaccessible environments. Mars rovers employ sophisticated navigation and mapping capabilities to traverse alien terrain millions of miles from Earth. Underwater robots map ocean floors and inspect underwater infrastructure where human access is limited or hazardous.

Inspection robots navigate industrial facilities, detecting equipment failures, gas leaks, or structural damage. Flying drones equipped with advanced sensors inspect bridges, power lines, and buildings, capturing detailed data while avoiding obstacles and managing limited battery capacity.

Challenges and Future Directions

Despite remarkable progress, Autonomous Robots technology continues to face significant challenges that researchers are actively addressing to enable more capable and reliable systems.

Technical Challenges

Computational limitations constrain the sophistication of algorithms deployable on mobile platforms with limited power budgets. While cloud robotics can offload processing, network latency and connectivity issues limit this approach. Developing efficient algorithms that balance performance with resource requirements remains an active research area.

Dynamic and unstructured environments present ongoing challenges for navigation systems. Handling crowds, unpredictable obstacles, and changing environmental conditions requires robust perception and adaptive decision-making. Adversarial weather conditions like rain, fog, or snow can degrade sensor performance, necessitating multi-modal sensing strategies.

Safety and Reliability

Ensuring autonomous robot safety in human-populated environments demands rigorous verification and validation. Robots must exhibit predictable behavior, fail gracefully when encountering anomalies, and never endanger humans even under fault conditions. Developing formal verification methods for learning-based systems poses particular challenges given their complexity and data-driven nature.

Decision-making transparency becomes critical as robots take on more consequential tasks. Why a robot made particular choices enables debugging, builds user trust, and facilitates legal and ethical accountability. Explainable AI research aims to make complex decision processes interpretable without sacrificing performance.

Future Research Directions

Multi-robot systems represent a frontier area where teams of robots coordinate to accomplish tasks beyond individual capabilities. Distributed decision making, communication protocols, and emergent collective behaviors offer exciting possibilities but introduce complexity in coordination and control.

Human-robot collaboration requires robots that understand human intentions, communicate naturally, and adapt to human preferences. Social navigation that respects personal space and cultural norms, combined with natural language interaction, will enable seamless human-robot teaming.

Lifelong learning capabilities would allow robots to continuously improve from experience, adapting to changing environments and accumulating knowledge over extended deployments. Transfer learning and meta-learning approaches show promise for enabling robots to generalize across tasks and environments.

More Read: Service Robots Revolutionizing Customer Experience

Conclusion

Autonomous robots have emerged as transformative technology through the integration of sophisticated navigation systems, advanced mapping algorithms, and intelligent decision-making capabilities. From SLAM-based localization to deep reinforcement learning for adaptive behavior, these systems demonstrate remarkable abilities to perceive, understand, and interact with complex environments. The convergence of sensor fusion, path planning algorithms, and artificial intelligence has enabled applications spanning warehouses, agriculture, healthcare, and exploration.

While challenges in computational efficiency, safety verification, and dynamic environment handling persist, ongoing research in multi-robot coordination, explainable AI, and lifelong learning promises even more capable systems. As autonomous mobile robots continue evolving, they will increasingly augment human capabilities, performing tasks in environments too dangerous, distant, or demanding for direct human intervention, ultimately reshaping industries and expanding the boundaries of what machines can accomplish independently.