The artificial intelligence revolution is transforming how organizations approach cloud infrastructure. As businesses increasingly rely on AI and machine learning workloads, the limitations of single-cloud deployments have become apparent. A multi-cloud strategy for AI represents a paradigm shift in how enterprises architect their AI infrastructure, distributing workloads across multiple cloud service providers to unlock unprecedented flexibility, performance, and innovation capabilities.

Recent industry data reveals that 87% of global enterprises now utilize multiple cloud providers, driven largely by the unique requirements of AI workloads and the desire to avoid vendor lock-in. This strategic approach enables organizations to leverage the specialized AI services offered by different cloud platforms—whether it’s Google Cloud’s Vertex AI, Amazon Web Services’ SageMaker, or Microsoft Azure’s Cognitive Services—without being constrained by a single provider’s ecosystem.

The complexity of modern artificial intelligence applications demands infrastructure that can adapt to rapidly evolving technological landscapes. Different cloud providers excel in various aspects of AI implementation: some offer superior natural language processing capabilities, while others provide cutting-edge computer vision tools or specialized machine learning frameworks. By adopting a multi-cloud approach, organizations position themselves to harness these diverse strengths simultaneously, creating a competitive advantage that single-cloud strategies simply cannot match.

However, implementing a successful multi-cloud AI strategy requires careful planning, robust governance frameworks, and sophisticated orchestration tools. Organizations must navigate challenges related to data portability, security consistency, cost management, and technical integration. The complexity is significant, but the rewards—enhanced resilience, optimized costs, accelerated innovation, and strategic flexibility—make it an increasingly essential approach for enterprises serious about AI-driven transformation.

This comprehensive guide explores the fundamental benefits of multi-cloud strategies for AI workloads, practical implementation frameworks, and best practices that enable organizations to maximize their AI investments while maintaining operational excellence. Whether you’re beginning your AI journey or optimizing existing deployments, multi-cloud architecture represents a critical competitive advantage in today’s technology landscape.

Multi-Cloud Strategy in AI Context

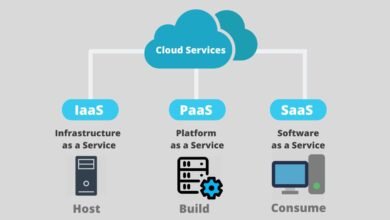

A multi-cloud strategy involves deliberately using services from multiple cloud providers to support an organization’s computing needs. In the context of AI and machine learning, this approach becomes particularly powerful because different cloud platforms offer distinct advantages for various AI workloads. Unlike hybrid cloud, which typically combines on-premises infrastructure with public cloud services, multi-cloud environments specifically leverage multiple public cloud providers simultaneously.

The strategic rationale for multi-cloud AI extends beyond simple risk diversification. Each major cloud provider has invested billions in developing proprietary AI services and machine learning platforms. AWS offers robust data analytics and deep learning frameworks through SageMaker and Rekognition. Google Cloud provides industry-leading capabilities in natural language processing and TensorFlow-based solutions. Microsoft Azure excels in enterprise AI integration and cognitive services. By adopting a multi-cloud approach, organizations can cherry-pick the best tools for specific use cases.

Cloud-agnostic architectures form the foundation of successful multi-cloud strategies. These architectures utilize containerization technologies like Docker and Kubernetes, enabling AI models to run consistently across different cloud platforms. This portability is crucial for AI workloads because it allows data scientists to develop models in one environment and deploy them wherever they’re most cost-effective or performant, without extensive reconfiguration.

The multi-cloud infrastructure for AI typically involves several key components: unified data management systems that ensure consistent access to training datasets across clouds, orchestration platforms that automate deployment and scaling, standardized monitoring and observability tools, and centralized security and governance frameworks. These elements work together to create a cohesive operational environment despite the underlying platform diversity.

Key Benefits of Multi-Cloud Strategy for AI Workloads

Avoiding Vendor Lock-In and Ensuring Flexibility

Vendor lock-in represents one of the most significant risks in cloud computing, particularly for AI workloads that require substantial infrastructure investments. When organizations commit entirely to a single cloud provider, they become dependent on that provider’s pricing structures, service availability, and technological roadmap. A multi-cloud strategy fundamentally eliminates this dependency by distributing workloads across multiple platforms.

The flexibility offered by multi-cloud deployments manifests in several critical ways. Organizations can negotiate more favorable pricing terms when they’re not entirely dependent on one provider. They can migrate workloads between clouds based on performance requirements or cost considerations. If one provider experiences service degradation or increases prices unexpectedly, workloads can be shifted to alternative platforms with minimal disruption. This negotiating leverage and operational flexibility represent substantial competitive advantages.

For AI and machine learning specifically, avoiding vendor lock-in enables access to cutting-edge innovations as they emerge. When a new AI service or capability launches on one platform, organizations with multi-cloud architecture can immediately begin testing and integration without wholesale infrastructure changes. This agility accelerates innovation cycles and ensures that AI initiatives can leverage the latest technological advancements regardless of their origin.

Cost Optimization and Resource Efficiency

Cost optimization stands as a primary driver for multi-cloud adoption in AI contexts. Different cloud providers offer varying pricing models for compute resources, storage, and specialized AI services. By strategically distributing AI workloads across multiple platforms, organizations can select the most cost-effective option for each specific use case. Training large language models might be more economical on one platform, while inference workloads might cost less elsewhere.

The dynamic nature of cloud pricing creates additional optimization opportunities. Cloud providers regularly adjust their pricing and offer promotional credits for specific services. Organizations with multi-cloud strategies can take advantage of these opportunities by shifting workloads to wherever pricing is most favorable at any given time. This approach requires sophisticated cost monitoring and workload orchestration but can yield substantial savings—often 20-30% compared to single-cloud deployments.

Resource efficiency extends beyond direct cost savings. Multi-cloud environments enable more precise matching of workload characteristics to platform capabilities. GPU-intensive machine learning training might run most efficiently on platforms with superior GPU availability and performance. Data preprocessing tasks might be handled more cost-effectively on platforms with robust data pipeline services. This granular optimization ensures that every component of the AI workflow operates in its ideal environment.

Enhanced Reliability and Disaster Recovery

Resilience and business continuity represent critical considerations for production AI systems. A multi-cloud strategy dramatically enhances reliability by eliminating single points of failure. When AI applications are distributed across multiple cloud providers, an outage affecting one platform doesn’t bring down the entire system. Critical workloads can automatically failover to alternative clouds, ensuring continuous operation even during major service disruptions.

For AI workloads that support business-critical operations—such as fraud detection, customer service automation, or predictive maintenance—this reliability becomes non-negotiable. Organizations can architect active-active configurations where identical AI services run simultaneously on multiple clouds, with load balancing distributing requests based on performance and availability. This approach not only prevents downtime but also optimizes performance by routing requests to the fastest-responding platform at any moment.

Disaster recovery capabilities are significantly enhanced in multi-cloud architectures. AI models, training data, and application configurations can be replicated across multiple cloud regions and providers. In the event of a catastrophic failure, systems can be restored from backups stored on alternative platforms. This geographic and platform diversity ensures that no single incident—whether technical failure, cyber attack, or natural disaster—can permanently compromise AI infrastructure.

Access to Best-of-Breed AI Services

Each major cloud provider has developed specialized AI services based on their unique strengths and strategic priorities. Google Cloud leads in natural language and neural network research, offering services like Vertex AI and advanced AutoML capabilities. AWS provides the broadest range of AI services with deep integration into its comprehensive cloud ecosystem. Azure excels in enterprise AI with strong integration to Microsoft’s productivity tools and specialized industry solutions.

A multi-cloud approach for AI enables organizations to leverage these specialized capabilities simultaneously. An enterprise might use Google Cloud’s natural language processing for customer sentiment analysis, AWS SageMaker for predictive modeling, and Azure Cognitive Services for computer vision applications. This best-of-breed selection ensures that each AI use case is powered by the most advanced, suitable technology available rather than being constrained by a single provider’s offerings.

Innovation acceleration represents another significant benefit. Cloud providers continuously release new AI capabilities and improve existing services. Organizations with multi-cloud strategies can immediately begin experimenting with these innovations without waiting for equivalent capabilities to appear on their primary cloud platform. This parallel access to multiple innovation streams creates competitive advantages by enabling faster adoption of breakthrough technologies.

Improved Data Sovereignty and Compliance

Data sovereignty requirements increasingly shape cloud strategy decisions, particularly for organizations operating across multiple jurisdictions. Different countries impose varying regulations about where data can be stored and processed. A multi-cloud strategy provides flexibility to address these requirements by enabling data and AI workloads to be placed in specific geographic regions or on platforms that meet particular compliance standards.

For organizations handling sensitive data—healthcare, financial services, government—multi-cloud architectures enable sophisticated data governance strategies. Different data classifications can be processed on different cloud platforms based on their security certifications and compliance attestations. High-sensitivity AI training data might be processed on platforms with specific certifications, while less sensitive inference workloads run on platforms optimized for performance and cost.

Regulatory compliance becomes more manageable when workloads can be distributed strategically. GDPR compliance might require that EU citizen data be processed within European data centers. HIPAA compliance might necessitate specific security controls available on certain platforms. By utilizing multiple cloud providers, organizations can ensure that each workload operates in an environment that meets its specific regulatory requirements without forcing all workloads into the most restrictive environment.

Implementation Framework for Multi-Cloud AI Strategy

Assessment and Planning Phase

Successful multi-cloud implementation begins with a comprehensive assessment of existing AI workloads, organizational capabilities, and strategic objectives. Organizations must inventory their current AI and machine learning applications, identifying dependencies, performance requirements, data sources, and integration points. This assessment reveals which workloads are suitable candidates for multi-cloud deployment and which might need to remain on single platforms due to technical constraints.

Workload characterization forms a critical component of planning. Different AI applications have vastly different requirements. Real-time inference workloads demand low latency and high availability. Model training workloads require massive compute resources but can tolerate longer completion times. Data preprocessing might need proximity to large datasets. These characteristics enable intelligent placement decisions across the multi-cloud infrastructure.

Organizations must also assess their technical capabilities and skills gaps. Multi-cloud management requires expertise in multiple platforms, sophisticated orchestration tools, and advanced networking. Teams may need training on cloud-agnostic technologies like Kubernetes, infrastructure-as-code tools, and multi-cloud management platforms. Building or acquiring this expertise before deployment prevents costly mistakes and accelerates time-to-value.

Selecting the Right Cloud Providers

Cloud provider selection should align with specific workload requirements and organizational priorities rather than following a one-size-fits-all approach. Organizations typically anchor their multi-cloud strategy around two or three primary providers, avoiding the complexity of managing too many platforms. The selection criteria should include AI service capabilities, pricing models, geographic coverage, compliance certifications, and integration with existing tools.

For AI workloads, evaluating each provider’s machine learning platforms is essential. Organizations should compare factors like supported frameworks (TensorFlow, PyTorch, scikit-learn), AutoML capabilities, model deployment options, GPU and TPU availability, data labeling services, and MLOps tooling. Hands-on proof-of-concept projects help validate that theoretical capabilities translate into practical value for specific use cases.

Partnership and support considerations also matter significantly. Different cloud providers offer varying levels of technical support, training resources, and professional services. For organizations with limited cloud expertise, providers with robust support ecosystems may deliver better outcomes even if their raw capabilities are slightly behind competitors. Long-term vendor relationships, strategic alignment, and cultural fit should factor into selection decisions.

Designing Cloud-Agnostic Architecture

Cloud-agnostic design principles enable AI applications to run consistently across multiple platforms, maximizing flexibility and portability. Containerization using Docker forms the foundation, packaging AI models, dependencies, and runtime environments into portable units. Kubernetes provides orchestration capabilities, managing container deployment, scaling, and operations consistently across different cloud platforms.

Microservices architecture complements containerization by decomposing AI applications into small, independent services. Each microservice handles a specific function—data preprocessing, model serving, results post-processing—and can be deployed independently on the most suitable cloud platform. This modularity enables granular optimization and easier troubleshooting compared to monolithic architectures.

Implementing abstraction layers prevents direct dependencies on cloud-specific services. Rather than calling AWS-specific APIs directly, applications interact through abstraction interfaces that can be implemented across multiple platforms. Infrastructure-as-code tools like Terraform enable multi-cloud infrastructure management through unified configuration files, maintaining consistency while supporting platform-specific optimizations where beneficial.

Data Management and Integration

Data strategy represents perhaps the most complex aspect of multi-cloud AI implementation. Training datasets must be accessible across multiple cloud environments, requiring sophisticated data replication and synchronization strategies. Organizations must balance data locality for performance with the costs and complexity of maintaining multiple data copies across clouds.

Data lakes and lakehouses provide architectural patterns for multi-cloud data management. These systems can span multiple cloud platforms, presenting a unified view of data to AI applications regardless of where data physically resides. Technologies like Apache Spark and Delta Lake enable consistent data processing across clouds, while data catalogs provide metadata management and governance.

Implementing data pipelines that work across multiple clouds requires careful orchestration. Extract-transform-load (ETL) processes must handle data movement between platforms efficiently, considering bandwidth costs and latency implications. Cloud-agnostic data integration platforms like Apache Airflow enable workflow orchestration across diverse environments, ensuring that data flows reliably through complex multi-cloud pipelines.

Security and Governance Implementation

Security in multi-cloud environments requires consistent policy enforcement across diverse platforms, each with unique security models and capabilities. Organizations must implement unified identity and access management, ensuring that authentication, authorization, and audit controls work seamlessly across all clouds. Federated identity systems enable single sign-on while respecting platform-specific security features.

Encryption strategies must address data at rest, data in transit, and data in use across multiple platforms. Organizations should implement encryption key management systems that work across clouds, preventing vendor-specific dependencies while maintaining strong security. For highly sensitive AI workloads, confidential computing technologies provide additional protection by encrypting data even during processing.

Governance frameworks ensure consistent operations and compliance across the multi-cloud infrastructure. Policy-as-code approaches enable automated enforcement of security standards, cost controls, and operational requirements. Regular security audits across all platforms identify vulnerabilities and ensure configuration consistency. Centralized logging and monitoring provide visibility into activities across the entire multi-cloud estate, enabling rapid incident detection and response.

Best Practices for Multi-Cloud AI Operations

Implementing Effective Monitoring and Observability

Observability across multi-cloud AI systems requires unified monitoring that provides visibility into application performance, infrastructure health, and cost consumption across all platforms. Organizations should implement centralized monitoring tools that aggregate metrics, logs, and traces from multiple clouds into a single pane of glass. This consolidated view enables operators to understand system behavior and troubleshoot issues regardless of where workloads are running.

Performance monitoring for AI workloads must track both infrastructure metrics (CPU, GPU utilization, memory, network) and application-specific metrics (model accuracy, inference latency, training progress). Different cloud platforms provide native monitoring tools, but relying solely on these creates management complexity. Third-party monitoring platforms like Datadog, New Relic, or Prometheus can aggregate data from multiple sources, providing consistent visibility.

Implementing distributed tracing becomes critical in multi-cloud architectures where a single AI workflow might span multiple platforms. Tracing tools track requests as they flow through various microservices across different clouds, identifying performance bottlenecks and dependency failures. This capability is essential for maintaining service-level objectives in complex distributed systems.

Cost Management and FinOps Practices

Cost management in multi-cloud environments presents unique challenges because different platforms use varying pricing models and billing structures. Organizations must implement robust FinOps practices that provide visibility into spending across all clouds, allocate costs to appropriate business units or projects, and identify optimization opportunities. Cloud-agnostic cost management tools aggregate billing data from multiple providers, enabling comprehensive financial analysis.

Resource rightsizing requires continuous monitoring and adjustment. AI workloads have varying resource requirements throughout their lifecycle—training requires massive compute resources, while inference might need less. Multi-cloud strategies enable dynamic resource allocation, using spot instances or preemptible VMs for training workloads on whichever cloud offers the best pricing at any moment. Automated policies can shut down unused resources, preventing waste.

Implementing chargeback or showback models helps organizations understand the true cost of AI initiatives. By accurately attributing cloud spending to specific projects, teams, or products, companies can make informed decisions about resource allocation and identify opportunities for consolidation or optimization. This financial transparency drives accountability and encourages efficient resource utilization across the organization.

Automation and DevOps Integration

Automation is non-negotiable for managing the complexity of multi-cloud AI environments. Infrastructure-as-code practices enable consistent, repeatable deployments across multiple platforms. Organizations should standardize on tools that support multi-cloud deployment—Terraform for infrastructure provisioning, Ansible for configuration management, and Kubernetes for container orchestration.

CI/CD pipelines for AI workloads must handle the unique requirements of machine learning applications. Beyond traditional software deployment, MLOps pipelines automate model training, validation, versioning, and deployment across multiple clouds. Tools like MLflow, Kubeflow, or cloud-agnostic alternatives enable consistent workflows regardless of where models are trained or deployed.

Implementing GitOps practices provides version control and audit trails for infrastructure and application configurations. All changes to multi-cloud environments should flow through Git repositories, enabling rollback capabilities, change tracking, and collaborative reviews. This approach reduces errors, improves consistency, and enables faster troubleshooting when issues arise.

Overcoming Multi-Cloud Challenges

Managing Complexity and Technical Debt

The inherent complexity of multi-cloud architectures represents the most significant challenge organizations face. Each cloud platform has unique APIs, management consoles, and operational models. Multiplying this complexity across several providers can overwhelm operations teams if not properly managed. Organizations must invest in standardization, automation, and training to prevent complexity from eroding the benefits of multi-cloud adoption.

Technical debt accumulates when quick solutions take precedence over architectural consistency. Allowing platform-specific implementations creates fragmentation that becomes increasingly expensive to maintain. Organizations should establish architectural standards that apply across all clouds, with exceptions granted only when compelling business cases justify platform-specific approaches. Regular technical debt remediation sprints help prevent accumulation.

Skill development requires ongoing investment. Teams must maintain expertise across multiple platforms, cloud-agnostic tools, and integration technologies. Cross-training programs help ensure that knowledge isn’t siloed with specific individuals. Partnerships with managed service providers or consultants can supplement internal capabilities during initial implementation or for specialized expertise.

Addressing Network and Data Transfer Costs

Network costs between clouds can become substantial, particularly for data-intensive AI workloads. Egress fees—charges for data leaving one cloud platform—add up quickly when applications span multiple providers. Organizations must architect systems to minimize unnecessary data movement, using data locality principles to process data near where it’s stored whenever possible.

Data transfer optimization strategies include compressing data before transmission, implementing caching layers to reduce redundant transfers, and batching operations to minimize API calls across clouds. For training workloads, organizations might replicate datasets to multiple clouds rather than repeatedly transferring them. For inference workloads, edge caching or content delivery networks can reduce bandwidth costs.

Negotiating with cloud providers can yield significant cost reductions. Enterprise agreements might include data transfer credits or reduced egress fees. Some providers offer direct interconnection options with lower costs than standard internet-based transfers. Organizations should evaluate these options as part of their overall multi-cloud cost management strategy.

Ensuring Consistent Security Posture

Maintaining consistent security across diverse cloud platforms requires disciplined governance and automated enforcement. Security misconfigurations represent a primary source of breaches, and the risk multiplies in multi-cloud environments where teams must manage different security models. Implementing infrastructure-as-code with built-in security controls helps ensure that deployments meet security standards regardless of platform.

Identity and access management complexity increases substantially in multi-cloud environments. Organizations must implement a robust identity federation that works seamlessly across platforms while enforcing least-privilege access principles. Regular access reviews, automated credential rotation, and just-in-time access provisioning help maintain security hygiene across the multi-cloud estate.

Compliance verification requires continuous monitoring across all clouds. Automated compliance scanning tools assess configurations against security benchmarks and regulatory requirements, flagging deviations for remediation. Regular penetration testing and security audits across the entire multi-cloud infrastructure identify vulnerabilities before attackers can exploit them.

Future Trends in Multi-Cloud AI

Edge Computing Integration

The convergence of multi-cloud strategies with edge computing creates powerful new architectures for AI applications. Edge deployments enable ultra-low-latency inference by processing data near where it’s generated—in IoT devices, retail locations, or manufacturing facilities. Multi-cloud infrastructure can orchestrate and manage these distributed edge deployments, with centralized clouds handling model training and edge nodes performing inference.

Federated learning represents an emerging pattern where AI models are trained across distributed data sources without centralizing data. This approach addresses privacy concerns and reduces data transfer costs while enabling collaboration. Multi-cloud architectures can coordinate federated learning across geographically dispersed environments, aggregating model improvements without exposing sensitive data.

Serverless and Function-Based Architectures

Serverless computing is increasingly relevant for AI workloads, particularly inference applications. Functions-as-a-service offerings from multiple cloud providers enable event-driven AI processing with automatic scaling and consumption-based pricing. Multi-cloud strategies can leverage serverless across platforms, routing inference requests to whichever cloud offers the best performance or cost at any moment.

The rise of specialized AI chips and accelerators across different cloud platforms drives further multi-cloud adoption. As providers develop custom silicon optimized for AI workloads—like Google’s TPUs, AWS Inferentia, or Azure’s custom AI hardware—organizations gain advantages by accessing these specialized resources through multi-cloud strategies rather than being limited to one provider’s offerings.

Sustainability and Green AI

Environmental sustainability is becoming a critical factor in cloud strategy decisions. Different cloud providers achieve varying levels of renewable energy usage across their data centers. Organizations committed to sustainability can use multi-cloud approaches to preferentially route workloads to clouds and regions with the highest renewable energy percentages, reducing the carbon footprint of AI operations.

Green AI practices focus on efficiency optimization to minimize energy consumption. Multi-cloud architectures enable workload placement strategies that prioritize energy-efficient clouds for appropriate workloads. As carbon accounting and sustainability reporting become standard business practices, the ability to track and optimize the environmental impact of AI across multiple clouds will become increasingly valuable.

More Read: How to Migrate AI Workloads to the Cloud Successfully

Conclusion

A multi-cloud strategy for AI represents a sophisticated approach to modern enterprise computing that delivers substantial benefits in flexibility, cost optimization, resilience, and innovation velocity. By distributing AI workloads across multiple cloud providers, organizations avoid vendor lock-in, access best-of-breed AI services, and create resilient architectures capable of supporting mission-critical applications. However, successful implementation requires careful planning, robust governance frameworks, and significant investment in cloud-agnostic technologies and automation.

Organizations must balance the compelling benefits against the inherent complexity, implementing standardization and automation to manage multi-cloud environments effectively. As AI continues transforming business operations and cloud providers invest billions in specialized AI capabilities, multi-cloud strategies will become increasingly essential for enterprises seeking a competitive advantage. The future of AI infrastructure lies not in choosing a single cloud provider, but in architecting sophisticated multi-cloud environments that leverage the unique strengths of each platform while maintaining operational excellence across the entire ecosystem.