AI Hardware Technology GPUs TPUs and Beyond

AI hardware technology innovations: GPUs vs TPUs, neuromorphic chips, quantum computing. Complete 2025 guide to AI accelerators

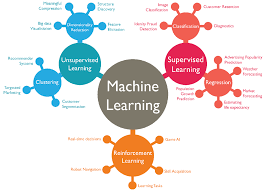

The artificial intelligence revolution has fundamentally transformed how we approach computing, demanding unprecedented computational power and efficiency. As AI hardware technology continues to evolve at breakneck speed, the landscape of specialized processors has become crucial for businesses, researchers, and technology enthusiasts alike. The traditional paradigm of relying solely on general-purpose CPUs has given way to a diverse ecosystem of AI accelerators specifically designed to handle the complex mathematical operations that power modern machine learning models.

From the ubiquitous Graphics Processing Units (GPUs) that initially drove the AI boom to Google’s purpose-built Tensor Processing Units (TPUs), the hardware landscape has undergone a dramatic expansion. Today’s AI practitioners must navigate an increasingly complex array of options, each optimized for specific workloads and use cases. Neural Processing Units (NPUs), neuromorphic chips, and emerging quantum computing solutions represent the cutting edge of this technological evolution, promising to unlock new possibilities in artificial intelligence applications.

By 2025, the AI hardware market is expected to reach $150 billion, driven by the increasing complexity of machine learning models and the need for efficient computation. This explosive growth reflects not just the commercial potential of AI technologies but also the fundamental shift toward specialized AI processors that can deliver the performance, efficiency, and scalability required for next-generation applications. These technologies are no longer optional for organizations seeking to leverage AI effectively – it’s become a competitive necessity.

The journey from traditional computing architectures to AI-optimized hardware represents one of the most significant technological transitions of our time. As we explore the current state and prospects of AI hardware, we’ll examine how different processor types stack up against each other, their unique advantages and limitations, and the emerging technologies that promise to reshape the landscape once again.

The Evolution of AI Hardware

The transformation of AI Hardware Technology represents a paradigm shift from general-purpose computing to specialized AI acceleration. Initially, artificial intelligence applications relied heavily on traditional Central Processing Units (CPUs), which, while versatile, struggled with the parallel processing demands of modern machine learning algorithms. This limitation became increasingly apparent as deep learning models grew in complexity and size, requiring massive computational resources for both training and inference tasks.

The breakthrough came with the adaptation of Graphics Processing Units for AI workloads. Originally designed for rendering graphics in gaming and professional applications, GPUs possessed the inherent parallel processing capabilities that aligned perfectly with the mathematical operations fundamental to neural networks. This serendipitous match between existing hardware and emerging AI needs catalyzed the first wave of practical deep learning applications, from image recognition to natural language processing.

However, as AI models became more sophisticated, the limitations of repurposed graphics hardware became evident. The need for purpose-built AI processors led to the development of specialized architectures designed specifically for artificial intelligence workloads. These AI-specific chips incorporated optimizations for tensor operations, reduced precision arithmetic, and memory architectures tailored to the data flow patterns typical in machine learning applications.

The evolution continues today with next-generation AI hardware that pushes beyond traditional silicon-based architectures. Neuromorphic computing, photonic processors, and quantum AI accelerators represent the frontier of this technological progression, each offering unique approaches to solving the computational challenges posed by increasingly complex AI applications.

Graphics Processing Units (GPUs): The Foundation of Modern AI

Graphics Processing Units have become the backbone of modern AI infrastructure, powering everything from research breakthroughs to commercial AI deployments. The parallel architecture that made GPUs excellent for rendering graphics translates remarkably well to the matrix operations that form the core of machine learning computations. Unlike CPUs, which excel at sequential processing with a few powerful cores, GPUs feature thousands of smaller, more efficient cores designed to handle multiple tasks simultaneously.

NVIDIA GPUs like the A100 and H100 are now AI workhorses, supported by a mature software stack (CUDA, cuDNN) and frameworks like PyTorch and TensorFlow. This ecosystem advantage has proven crucial in GPU adoption, as developers can leverage extensive libraries, tools, and frameworks specifically optimized for GPU acceleration. The CUDA programming model has become synonymous with GPU computing in AI, providing a comprehensive platform for developing and deploying machine learning applications.

GPU Architecture for AI Workloads

Modern AI-optimized GPUs incorporate several architectural innovations specifically designed for machine learning tasks. Tensor cores, specialized processing units within the GPU, are engineered to accelerate the mixed-precision operations common in deep learning. These cores can perform multiple matrix operations simultaneously, dramatically improving throughput for neural network training and inference tasks.

Memory architecture represents another crucial aspect of GPU design for AI Hardware Technology. High-bandwidth memory (HBM) provides the rapid data access necessary for large-scale model training, while advanced memory hierarchies help manage the data flow between different levels of the system. The ability to efficiently move data between memory and processing units often determines the overall performance of AI workloads more than raw computational power.

The GPU programming ecosystem extends beyond hardware to encompass a comprehensive software stack. Libraries like cuDNN provide optimized implementations of common deep learning operations, while frameworks such as TensorFlow and PyTorch offer high-level interfaces for model development. This mature ecosystem gives GPUs a significant advantage in terms of ease of development and deployment.

GPU performance for AI applications depends on several factors

Performance Characteristics and Use Cases

, including memory bandwidth, core count, and architectural optimizations. Modern high-end GPUs can deliver impressive throughput for both training large language models and serving inference requests at scale. The parallel nature of GPU architecture makes them particularly well-suited for batch processing, where multiple inputs can be processed simultaneously.

Training large-scale AI models often requires multiple GPUs working in concert, leveraging technologies like NVIDIA’s NVLink for high-speed inter-GPU communication. This multi-GPU scaling capability has enabled the training of increasingly large models, from GPT-style language models to diffusion models for image generation. The ability to scale across multiple devices and even multiple nodes in a cluster has made GPUs the de facto standard for AI Hardware Technology research and development.

GPU inference performance varies significantly depending on the specific use case. For applications requiring low latency, such as real-time computer vision or interactive AI assistants, the parallel processing capability of GPUs can provide significant advantages. However, for applications with sporadic usage patterns, the power consumption and cost of maintaining GPU infrastructure may outweigh the performance benefits.

Tensor Processing Units (TPUs): Google’s AI-Specific Innovation

Tensor Processing Units represent Google’s bold bet on purpose-built AI Hardware Technology, designed specifically to accelerate the tensor operations that form the foundation of modern machine learning. Unlike GPUs, which evolved from graphics rendering to AI applications, TPUs were built for neural network operations. This ground-up approach allowed Google to optimize every aspect of the architecture for AI workloads, from the instruction set to the memory hierarchy.

The TPU architecture centers around a systolic array design that efficiently performs the matrix multiplications central to neural network operations. This approach differs fundamentally from the GPU’s parallel processing model, instead using a more structured data flow that minimizes energy consumption and maximizes throughput for specific types of computations. The result is hardware that can achieve exceptional performance-per-watt for supported operations while consuming significantly less power than equivalent GPU solutions.

Over the years, Google has released several generations of TPUs and, in May 2024, announced Trillium, the sixth-generation TPU. Each generation has brought improvements in performance, efficiency, and capabilities, with the latest Trillium TPUs offering substantial advances in both training and inference performance. These evolutionary improvements reflect Google’s commitment to maintaining its competitive edge in AI infrastructure.

TPU Architecture and Design Philosophy

The systolic array architecture at the heart of TPUs processes data in a highly structured, predictable pattern that maximizes efficiency for matrix operations. Data flows through the array in a coordinated manner, with each processing element performing computations on the data stream as it passes through. This design eliminates many of the inefficiencies associated with more general-purpose architectures, particularly the overhead of coordinating thousands of independent cores.

Memory architecture in TPUs is specifically designed to support the data access patterns typical in neural network operations. High-bandwidth memory provides rapid access to model parameters and activations, while on-chip memory hierarchies help minimize data movement. The unified memory architecture allows for efficient sharing of data between different parts of the computation, reducing bottlenecks that can limit performance in other architectures.

The TPU instruction set is optimized for the specific operations used in machine learning, with native support for reduced-precision arithmetic and specialized tensor operations. This specialization allows TPUs to achieve higher efficiency than more general-purpose processors, but it also limits their applicability to non-AI workloads. The trade-off between specialization and flexibility represents a fundamental design decision that distinguishes TPUs from more versatile alternatives.

Performance and Efficiency Advantages

TPU performance characteristics reflect their specialized design, with exceptional efficiency for supported operations and workloads. TPUs are generally faster and less precise than GPUs, which is usually acceptable for most ML and AI Hardware Technology math tasks. This performance-precision trade-off is carefully calibrated to match the requirements of most machine learning applications, where slight reductions in numerical precision rarely impact final model accuracy.

Energy efficiency represents one of the most significant advantages of TPUs over general-purpose alternatives. The specialized architecture and optimized data paths result in significantly lower power consumption per operation, making TPUs attractive for large-scale deployments where energy costs represent a significant portion of operating expenses. This efficiency advantage compounds when considering the cooling and infrastructure requirements of data center deployments.

Scalability characteristics of TPUs support both single-device and distributed training scenarios. TPU pods can scale to hundreds or thousands of individual processing units, providing the computational resources necessary for training the largest AI models. The high-speed interconnect between TPUs in a pod enables efficient distributed training, with specialized software stacks handling the complexity of coordinating computation across multiple devices.

Neural Processing Units (NPUs): Edge AI Acceleration

Neural Processing Units represent the next evolution in AI Hardware Technology, designed specifically to bring artificial intelligence capabilities to edge devices and mobile platforms. Unlike their data center counterparts, NPUs prioritize energy efficiency and compact form factors over raw computational power, enabling AI functionality in smartphones, IoT devices, and autonomous systems where power consumption and physical constraints are critical considerations.

NPUs use a different architecture designed to optimize for the specific requirements of edge AI applications. This architecture typically incorporates dedicated hardware for common neural network operations while maintaining the flexibility to support various AI models and frameworks. The design philosophy emphasizes inference optimization rather than training capabilities, reflecting the primary use case of running pre-trained models on edge devices.

The mobile AI revolution has been largely enabled by the integration of NPUs into system-on-chip (SoC) designs used in smartphones and tablets. Major chipmakers like Qualcomm, Apple, and MediaTek have incorporated increasingly sophisticated NPUs into their flagship processors, enabling features like real-time photo enhancement, voice recognition, and augmented reality applications without relying on cloud connectivity.

NPU Architecture and Optimization Strategies

NPU design prioritizes efficiency over flexibility, incorporating architectural elements specifically chosen to minimize power consumption while maximizing performance for common AI operations. Dedicated multiply-accumulate units handle the core mathematical operations of neural networks, while specialized memory hierarchies reduce data movement and associated energy costs. The overall architecture represents a careful balance between computational capability and power efficiency.

Quantization support is a fundamental feature of most NPU designs, enabling models to run with reduced-precision arithmetic that significantly decreases computational requirements. 8-bit and 16-bit integer operations replace the floating-point arithmetic common in training scenarios, providing substantial energy savings with minimal impact on model accuracy. This quantization capability is essential for making complex AI models practical on resource-constrained devices.

On-device AI processing enabled by NPUs provides several advantages beyond energy efficiency. Reduced latency results from eliminating network round-trips to cloud services, while improved privacy comes from processing sensitive data locally rather than transmitting it to remote servers. These benefits have made NPUs increasingly important for applications where real-time response or data privacy are critical requirements.

Applications and Market Impact

The edge AI ecosystem powered by NPUs encompasses a diverse range of applications, from consumer electronics to industrial automation. Smartphone AI features like computational photography, real-time translation, and voice assistants rely heavily on NPU acceleration to provide responsive, always-available functionality. The ability to perform these computations locally has transformed user expectations for AI-powered mobile applications.

Autonomous systems represent another significant application area for NPU technology. Self-driving vehicles, drones, and robotic systems require real-time AI processing capabilities that can operate reliably without constant cloud connectivity. NPUs provide the computational foundation for these applications, enabling complex perception and decision-making algorithms to run locally with low latency and high reliability.

Industrial IoT applications benefit from NPU-enabled edge intelligence, allowing smart sensors and monitoring systems to perform local analysis and decision-making. This capability reduces bandwidth requirements, improves system responsiveness, and enables operation in environments where network connectivity may be limited or unreliable. The distributed intelligence enabled by NPUs is transforming industrial automation and monitoring applications.

Beyond Traditional Silicon: Emerging AI Hardware Technology

The future of AI hardware technology extends far beyond incremental improvements to existing silicon-based processors. Neuromorphic computing, photonic processors, and quantum AI accelerators represent fundamentally different approaches to computation that could revolutionize how we build and deploy artificial intelligence systems. These emerging technologies address the growing limitations of traditional architectures while opening new possibilities for AI applications.

Instead of relying on traditional CPUs and GPUs that linearly process information, neuromorphic systems mimic the structure and function of biological neural networks. This biological inspiration offers the potential for dramatically improved energy efficiency and novel computational capabilities that more closely match the operating principles of the neural networks they’re designed to accelerate.

The convergence of advanced materials science, quantum physics, and computer architecture is creating opportunities for breakthrough innovations in AI hardware. By 2025, even policymakers took note – the U.S. Department of Energy started funding photonic computing, highlighting the strategic importance of these next-generation technologies for maintaining technological competitiveness.

Neuromorphic Computing: Brain-Inspired Architecture

Neuromorphic chips attempt to replicate the fundamental operating principles of biological neural networks, using spiking neural networks and event-driven processing to achieve unprecedented energy efficiency. Unlike traditional processors that operate on fixed clock cycles, neuromorphic systems process information only when events occur, dramatically reducing power consumption for applications that involve sparse or intermittent data.

The brain-inspired architecture of neuromorphic processors incorporates several key innovations borrowed from neuroscience. Synaptic plasticity allows these systems to learn and adapt in real-time, while distributed memory and processing eliminate the traditional separation between computation and storage. These characteristics make neuromorphic systems particularly well-suited for applications involving continuous learning, adaptation, and real-time sensory processing.

Intel’s Loihi and IBM’s TrueNorth represent pioneering efforts in neuromorphic computing, demonstrating the potential for orders-of-magnitude improvements in energy efficiency for certain types of AI workloads. While still in early stages of development, these platforms have shown promising results in applications ranging from robotics to sensor processing, suggesting significant potential for future commercial applications.

Photonic AI Accelerators: Computing at Light Speed

Photonic computing leverages the properties of light to perform computations, offering the potential for dramatically higher speeds and lower energy consumption compared to electronic alternatives. Optical neural networks can perform matrix operations using interference patterns and optical modulators, enabling massively parallel computations at the speed of light.

In April 2025, a new startup, Lumai (Oxford spin-out), announced $10M funding to develop an optical AI accelerator for transformer models, demonstrating growing commercial interest in photonic AI acceleration. The ability to perform certain types of neural network operations optically could provide significant advantages for applications requiring extremely high throughput or energy efficiency.

The technical challenges of photonic computing include the need for optical-to-electronic conversion at interfaces with traditional systems and the current limitations of optical memory and storage technologies. However, ongoing research in integrated photonics and silicon photonics is addressing many of these challenges, making photonic AI accelerators increasingly viable for specialized applications.

Quantum AI Computing: The Ultimate Frontier

Quantum computing represents perhaps the most ambitious frontier in next-generation AI hardware, offering the theoretical potential to solve certain classes of problems exponentially faster than classical computers. Quantum machine learning algorithms could revolutionize optimization problems, cryptography, and complex simulation tasks that are fundamental to many AI applications.

Hardware such as neuromorphic computing and quantum computing will allow companies to build AI Hardware Technology solutions that are extremely fast and can encapsulate more data and knowledge. While still in early stages of development, quantum computers have demonstrated quantum advantage for specific problem types, suggesting significant potential for future AI applications.

The current limitations of quantum computing include issues with quantum decoherence, error rates, and the need for extreme operating conditions such as near-absolute-zero temperatures. However, advances in quantum error correction and fault-tolerant quantum computing are gradually addressing these challenges, bringing practical quantum AI applications closer to reality.

Comparative Analysis: Choosing the Right AI Hardware

Selecting appropriate AI Hardware Technology requires careful consideration of multiple factors, including performance requirements, cost constraints, energy efficiency needs, and application-specific characteristics. While TPUs excel in specific AI tasks, particularly those involving large-scale tensor operations and deep learning models, GPUs offer greater versatility and are compatible with a wider range of machine learning frameworks. These trade-offs are essential for making informed decisions about AI infrastructure investments.

Performance characteristics vary significantly between different types of AI processors, with each architecture optimizing for different aspects of the computational workload. Training performance typically favors high-memory-bandwidth solutions like high-end GPUs or TPUs, while inference performance may benefit from more specialized solutions like NPUs or custom ASICs, depending on the specific requirements of the application.

Cost considerations extend beyond initial hardware acquisition to encompass operational expenses, including power consumption, cooling requirements, and maintenance costs. Total cost of ownership calculations must account for the entire lifecycle of the hardware, including software development costs, integration complexity, and the availability of skilled personnel to manage and optimize the systems.

Performance Benchmarking and Metrics

AI hardware benchmarking requires careful consideration of relevant metrics that accurately reflect real-world application requirements. Raw computational throughput measured in operations per second provides one perspective, but energy efficiency measured in operations per watt often proves more relevant for practical deployments. Memory bandwidth and latency characteristics can significantly impact performance for memory-intensive AI workloads.

Application-specific benchmarks provide more meaningful comparisons than synthetic performance tests. Natural language processing tasks, computer vision workloads, and recommendation system performance can vary dramatically between different hardware architectures, making it essential to benchmark using workloads representative of intended applications. The choice of software frameworks and optimization levels can also significantly impact comparative performance results.

Scalability characteristics become increasingly important for large-scale AI Hardware Technology deployments. The ability to efficiently distribute training across multiple devices, handle batch processing workloads, and maintain performance as system size increases varies significantly between hardware architectures. These scaling properties are crucial for organizations planning to deploy AI at scale.

Cost-Benefit Analysis Framework

A comprehensive cost-benefit analysis for AI Hardware Technology selection must consider both quantitative and qualitative factors. Capital expenditure for hardware acquisition represents only one component of the total economic equation, with operational costs often dominating over the system lifecycle. Power consumption, cooling requirements, and facility costs can significantly impact the economic viability of different hardware choices.

Development and deployment costs associated with different hardware platforms can vary substantially. Software ecosystem maturity, availability of development tools, and developer expertise requirements all influence the total cost of implementing AI solutions on different hardware platforms. The time-to-market implications of hardware choices can also have significant economic consequences for competitive AI applications.

Future-proofing considerations involve evaluating the likely evolution of AI algorithms and applications to ensure hardware investments remain viable over their intended lifecycle. The rapid pace of AI development makes this particularly challenging, but technology roadmaps and ecosystem trends can help inform more robust investment decisions.

Future Trends and Implications

The future of AI Hardware Technology will be shaped by several converging trends, including the continued growth in model size and complexity, increasing emphasis on energy efficiency and sustainability, and the proliferation of AI applications across diverse domains. Future AI systems will combine TPUs, IPUs, and neuromorphic processors, dynamically selecting the best hardware for different tasks. This heterogeneous computing approach represents a fundamental shift toward adaptive AI infrastructure that can optimize performance and efficiency across diverse workloads.

Sustainability concerns are driving increased focus on energy-efficient AI Hardware Technology as the environmental impact of large-scale AI deployments becomes more apparent. Neuromorphic chips are the future of green tech in 2025—slashing AI power use by 90% and driving sustainability. This dramatic improvement in energy efficiency could make previously impractical AI applications viable while reducing the carbon footprint of existing deployments.

The democratization of AI enabled by more efficient and accessible hardware will likely accelerate innovation across industries and applications. As AI acceleration becomes more affordable and easier to deploy, we can expect to see artificial intelligence capabilities embedded in an increasingly diverse range of products and services, from consumer electronics to industrial systems.

Technology Roadmaps and Market Projections

Industry roadmaps suggest continued rapid evolution in AI hardware capabilities, with major improvements expected in both performance and efficiency over the next five years. Moore’s Law scaling continues to provide benefits for AI applications, while architectural innovations and specialized design approaches offer additional performance improvements beyond those achievable through process technology alone.

Market projections indicate substantial continued growth in AI hardware spending, driven by both expanding applications and increasing computational requirements of advanced AI models. The enterprise AI market, cloud AI services, and edge AI applications all represent significant growth opportunities for hardware vendors, though the competitive landscape is likely to remain highly dynamic.

Emerging applications in areas such as autonomous systems, scientific computing, and creative AI will drive demand for new types of hardware capabilities. The requirements for real-time AI processing, distributed intelligence, and ultra-low-power operation will continue to push hardware design in new directions, creating opportunities for innovative architectural approaches.

Strategic Implications for Organizations

Organizations developing AI strategies must carefully consider the evolving hardware landscape and its implications for their technology investments and competitive positioning. AI Hardware Technology choices made today will influence AI capabilities and cost structures for years to come, making it essential to understand both current options and likely future developments.

Skills and expertise requirements are evolving along with the hardware landscape, creating new challenges and opportunities for organizations building AI capabilities. The programming models, optimization techniques, and deployment strategies for different hardware platforms will become increasingly important for maximizing the value of AI investments.

Partnership and ecosystem considerations play an increasingly important role in AI hardware decisions. The availability of cloud services, software support, and development communities around different hardware platforms can significantly impact the success of AI initiatives. Organizations must evaluate not just hardware capabilities but also the broader ecosystem supporting their chosen platforms.

More Read: The Future of Natural Language Processing Technology

Conclusion

The evolution of AI Hardware Technology from general-purpose CPUs to specialized AI accelerators represents one of the most significant technological transformations of our time. GPUs established the foundation for practical deep learning applications, while TPUs demonstrated the power of purpose-built AI hardware. NPUs are bringing AI capabilities to edge devices and mobile platforms, enabling new categories of applications with improved privacy and responsiveness.

Looking beyond traditional silicon, neuromorphic chips, photonic processors, and quantum computing promise to unlock even greater capabilities while addressing the growing energy and scalability challenges of AI deployments. As we move forward, the heterogeneous computing approach that combines multiple specialized processors will likely become the norm, enabling AI systems to dynamically optimize performance and efficiency across diverse workloads. Organizations must carefully evaluate their AI Hardware Technology choices, not just based on current capabilities, but also considering future trends, ecosystem support, and total cost of ownership to remain competitive in the rapidly evolving AI landscape.