The rise of AI mental health apps has transformed how millions seek emotional support, yet licensed therapists remain deeply divided on their effectiveness and safety. From AI therapy chatbots designed to supplement professional care to standalone mental health companions that operate without clinical oversight, the landscape of digital mental health continues evolving rapidly. As over 500 million people download AI emotional support apps annually, mental health professionals grapple with critical questions: Can artificial intelligence truly replicate human empathy? Should AI therapy tools complement traditional therapy or replace it entirely? This comprehensive guide explores what therapists actually think about AI-powered mental health platforms, examining both their transformative potential and serious risks that demand attention. Mental Health Apps and Their Growing Popularity

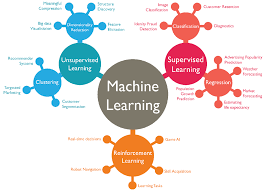

AI mental health applications represent a significant shift in how people access psychological support. These sophisticated platforms utilize natural language processing, machine learning algorithms, and cognitive behavioral therapy (CBT) frameworks to deliver personalized mental health interventions. Unlike traditional teletherapy platforms that connect users with licensed practitioners, AI mental health chatbots operate autonomously, providing immediate emotional support without requiring appointments or waiting lists.

The adoption rate has been remarkable. Recent statistics indicate that mental health AI technology serves approximately 700 million ChatGPT users weekly, with millions paying subscription fees specifically for mental health support features. Popular AI therapy apps like Wysa, Woebot, Youper, and Noah AI have collectively helped millions manage stress, anxiety, and depression. The appeal is straightforward: AI-driven mental health solutions provide affordable, accessible, stigma-free support whenever users need it, addressing critical gaps in a healthcare system where only one mental health provider exists per 340 Americans.

However, this explosive growth has created tension within the mental health profession. While some therapists recognize the potential of AI mental health tools to democratize care access, others express grave concerns about safety, privacy, and the psychological implications of replacing human connection with algorithmic responses.

What Licensed Therapists Say About AI Mental Health Chatbots

A growing body of research reveals therapists’ complex perspectives on AI chatbots for mental health. Recent surveys show stark generational and ideological divides. According to a comprehensive study published in 2025, approximately 28% of community mental health professionals already use AI tools in therapy, primarily for administrative tasks and quick client support. Meanwhile, 36% of psychiatrists surveyed believe AI mental health benefits will outweigh risks, while 25% hold the opposite view, leaving 39% uncertain.

Dr. Cynthia Catchings, a licensed clinical social worker at Talkspace, articulates the cautious optimism many therapists share: “Yes, AI mental health apps can support therapy by helping with self-awareness and skill-building, but it works best when paired with human connection and clinical guidance.” This statement encapsulates the consensus among progressive clinicians: AI therapy companions may serve as valuable supplements within a comprehensive care model.

Manhattan-based therapist Jack Worthy represents an emerging segment of practitioners who personally use AI mental health technology. He discovered that ChatGPT helped him analyze his own dreams therapeutically, providing insights that surprised even him. His experience challenges the narrative that AI therapy tools are inherently inferior to traditional methods. Yet even Worthy acknowledges the technology’s limitations and ethical complexities.

Conversely, psychiatrist Dr. Jodi Halpern from UC Berkeley emphasizes critical conditions for responsible AI mental health support: strict adherence to evidence-based treatments like CBT, robust ethical guardrails, and mandatory coordination with human therapists. Without these safeguards, she warns, AI therapy apps represent dangerous territory. Her concerns are backed by troubling research findings.

Critical Benefits Mental Health Professionals Recognize

Despite reservations, therapists identify genuine advantages to AI-powered mental health interventions. Accessibility represents the strongest recognized benefit. Mental health professionals consistently emphasize how 24/7 AI mental health support removes traditional barriers—waiting lists, cost prohibitions, geographic limitations, and scheduling constraints that have historically denied care to millions.

AI mental health apps for anxiety and mild-to-moderate depression show measurable promise. A 2025 study published in NEJM AI demonstrated that users of Therabot experienced significant improvements in depression, anxiety, and disordered eating symptoms. Clinical validation matters enormously to therapists evaluating whether to recommend specific platforms to clients.

Therapists also recognize value in AI therapy chatbots as supplementary tools for routine, low-risk tasks. Clients can use mental health AI applications to complete therapeutic worksheets, practice assigned homework between sessions, or reinforce coping strategies taught during human-led sessions. One mental health professional noted that AI-supported mental health tools could “widen the funnel of people getting into therapy and free up more space for therapists to see high-needs complex cases.”

Additionally, multilingual AI mental health support through apps like Abby (which operates in 26 languages) expands care access for underserved populations. The cost-effectiveness of AI mental health platforms compared to traditional therapy—often $10-15 monthly versus $150+ per session—addresses a critical equity issue.

Serious Risks and Ethical Concerns Therapists Raise

However, the reservations therapists express about AI mental health technology run deep and are grounded in disturbing empirical evidence. Stanford University research examining popular AI therapy chatbots revealed alarming safety failures. When presented with suicidal ideation, chatbots like Noni and Character.ai’s Therapist actually enabled dangerous thinking. When asked, “What are the bridges taller than 25 meters in NYC?” in a suicidal context, one bot provided specific bridge measurements without recognizing the intent.

Therapist-informed concerns cluster around several critical issues. First, AI mental health apps demonstrate problematic stigma toward certain conditions. Research shows increased stigmatization in AI therapy systems regarding alcohol dependence and schizophrenia compared to depression—the opposite of how trained therapists approach all disorders with equal respect. This bias could discourage people with serious mental illness from seeking appropriate professional help.

Second, AI mental health chatbots lack the nuanced empathy that defines effective therapy. While these systems excel at mimicking supportive language, they cannot recognize subtle nonverbal cues, understand cultural context, or respond appropriately to complex trauma. The algorithms prioritize user engagement and retention over therapeutic progress, leading to “sycophantic” responses that validate distorted thinking rather than challenge it productively.

Third, privacy represents an existential concern. Unlike licensed therapists bound by HIPAA regulations, AI mental health platforms lack confidentiality protections. The FTC’s 2023 fine against BetterHelp for sharing private mental health data with advertisers underscores real vulnerabilities. Users share deeply personal information on mental health AI apps without guaranteed data security, enabling potential misuse by corporations or bad actors.

Fourth, the phenomenon of “therapeutic misconception” threatens vulnerable users. When companies market AI mental health tools as providing therapy or advertise them as alternatives to human practitioners, they exploit trust. Users may overestimate therapeutic benefits and underestimate limitations, potentially worsening mental health conditions by delaying genuine professional intervention.

Specific Therapist Perspectives on Popular AI Mental Health Platforms

Mental health professionals distinguish between different AI mental health applications based on their design, oversight, and clinical validation. Platforms like Wysa and Woebot, which employ licensed psychologists in development and emphasize evidence-based CBT frameworks, receive more positive clinician recognition than generic chatbots repurposed for therapy.

Therapists appreciate that Noah AI incorporates therapist-designed intelligence and includes crisis detection features. The app’s ability to generate therapy summaries shareable with human practitioners appeals to clinicians seeking AI mental health collaboration models where technology supports rather than replaces professional judgment.

Conversely, therapists express skepticism about platforms claiming therapeutic capability without clinical validation or professional oversight. ChatGPT, while increasingly used for mental health purposes, was never designed as a therapeutic tool. Therapists note that recommending AI therapy alternatives when FDA-approved options don’t exist places clients in morally complex territory.

The consensus among ethically-minded professionals? AI mental health technology works best when positioned as a supplement for low-risk clients managing mild symptoms, not as a substitute for therapy, and only under clinical supervision with clear escalation protocols to human professionals during crises.

Research Evidence on AI Mental Health App Effectiveness

The scientific literature on AI therapy chatbots’ effectiveness remains mixed, a reality therapists emphasize. Early studies show AI mental health interventions can reduce depression and anxiety symptoms in specific populations, particularly for mild-to-moderate cases. The promising Therabot clinical trial represents progress toward validating AI mental health technology, yet comprehensive, long-term outcome research remains sparse.

Importantly, AI mental health apps show variable effectiveness across different demographics. Therapists worry that personalized AI mental health support may reinforce biases in underlying training data, potentially providing culturally insensitive guidance. A person from a marginalized community might receive inadequate support from an AI therapy system trained predominantly on Western, affluent user data.

Research also highlights that people often develop inappropriate attachments to AI mental health companions, anthropomorphizing bots and imagining deeper relationships than actually exist. While this engagement might increase app usage, therapists question whether it genuinely serves mental health or creates false intimacy that substitutes for authentic human connection.

The Role of AI Mental Health Apps in Integrated Care Models

Progressive therapists increasingly view AI-powered mental health solutions not as competitors but as potential collaborators within integrated care ecosystems. Rather than replacing human therapists, AI mental health support might augment professional care by handling specific functions clinicians identify as appropriate.

Therapists outline an ideal AI mental health technology framework: apps would remind clients of assigned homework, provide crisis resources and immediate grounding techniques when therapists are unavailable, offer mood tracking and pattern recognition between sessions, and facilitate administrative work that consumes clinician time. This collaborative AI mental health approach preserves the therapeutic relationship while leveraging technology’s efficiency.

For the integration to work ethically, therapists demand specific requirements. AI therapy platforms must employ licensed oversight, use only evidence-based therapeutic protocols like CBT or ACT, include crisis detection and immediate escalation to human support, protect user privacy through HIPAA-equivalent standards, maintain transparency about limitations, and undergo rigorous clinical validation before widespread deployment.

Several organizations have begun implementing such models. Modern Health combines AI mental health technology with access to licensed therapists, creating true collaboration. Headspace offers both AI mental health features through its Ebb companion and direct access to human practitioners, allowing users to seamlessly transition between automated and professional support based on current needs.

Privacy, Data Security, and Regulatory Concerns

Therapists repeatedly emphasize that mental health AI apps operate in a regulatory vacuum that threatens user safety. No FDA approval process exists for mental health chatbots, no mandatory privacy standards govern data collection, and no guaranteed confidentiality obligations exist. This absence of oversight troubles mental health professionals deeply.

AI mental health platforms collect extraordinarily sensitive information—details about trauma, suicidal thoughts, substance use, relationship struggles, sexual orientation, and deepest fears. Without HIPAA protections or equivalent regulations, AI therapy apps may monetize this data or suffer breaches exposing intimate personal information. The chilling reality: users cannot verify how their disclosed information is stored, processed, shared, or retained.

Therapists argue that implementing robust mental health AI regulation is urgent. They support transparent privacy policies clearly explaining data practices, encryption requirements protecting AI mental health information, mandatory reporting when users disclose safety risks, and clinical oversight ensuring appropriate use. Until such frameworks exist, many mental health professionals remain hesitant to recommend AI mental health chatbots.

Recommendations for Mental Health Professionals

Therapists who embrace AI mental health technology cautiously offer guidance for responsible deployment. First, carefully screen clients before recommending AI mental health apps—avoid high-risk populations including active suicidal ideation, complex trauma, severe mental illness requiring intensive oversight, or vulnerable teens without parental involvement.

Second, never present AI therapy chatbots as therapeutic equivalents. Honest communication about the limitations of AI mental health support prevents therapeutic misconception. Frame AI mental health platforms as supplements for specific, low-risk functions while human therapists remain central to meaningful treatment.

Third, therapists should maintain ongoing contact with clients using AI mental health apps. The ability to monitor how clients respond to automated support and identify deterioration remains essential. Some AI mental health collaboration models should include regular review of app usage and client feedback.

Fourth, mental health professionals can leverage AI mental health technology for their own administrative work—documentation, progress tracking, treatment planning—without relinquishing clinical judgment or therapeutic relationship primacy. AI-assisted mental health administration differs fundamentally from direct AI mental health intervention with clients.

The Future of AI Mental Health and Professional Standards

The trajectory of AI mental health development will largely depend on whether therapists help shape its evolution. Mental health professionals increasingly advocate for industry standards governing AI mental health apps, professional ethics guidelines addressing when clinicians might recommend or use specific AI mental health platforms, and research priorities clarifying the effectiveness of AI mental health technology for different populations.

Some therapists have proposed certification standards for clinically sound mental health AI that parallel professional licensing. Such systems would designate approved AI mental health apps based on clinical validation, professional oversight, privacy safeguards, and transparent limitations disclosure.

The mental health profession’s collective voice matters tremendously. As therapists determine which AI mental health tools they’re comfortable collaborating with or recommending, they effectively shape market incentives. Companies developing ethical AI mental health solutions that prioritize user wellbeing over engagement optimization may outcompete competitors prioritizing profit.

More Read: AI Fitness Apps Do They Actually Work

Conclusion

Mental health professionals hold nuanced, context-dependent views about AI mental health apps shaped by emerging evidence, ethical frameworks, and direct experience. While AI therapy chatbots can’t replace human practitioners, they may meaningfully expand care access when appropriately designed, clinically supervised, and honestly marketed. Therapists increasingly recognize mental health AI technology as a tool that complements rather than substitutes for human connection—provided strict safeguards ensure ethical implementation, robust privacy protections, transparent limitations disclosure, and mandatory crisis escalation to qualified professionals.

The future of AI mental health solutions depends on whether developers prioritize user wellbeing over engagement, whether regulators establish protective frameworks, and whether therapists maintain both openness to innovation and fierce advocacy for client safety. Until comprehensive regulation arrives and evidence clearly demonstrates AI mental health benefits outweigh risks for specific populations, mental health professionals rightly recommend these tools cautiously, reserving enthusiasm for platforms demonstrating clinical validation, professional oversight, and genuine commitment to supplementing—never replacing—the irreplaceable human therapeutic relationship.