Cloud AI Services Comparison The rapid evolution of artificial intelligence and machine learning has transformed how businesses operate, innovate, and compete in today’s digital landscape. As organizations increasingly seek to harness the power of Cloud AI Services Comparison, three major players dominate the market: Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP). Each cloud service provider offers a comprehensive suite of AI and ML capabilities, but which platform aligns best with your specific requirements can be challenging.

The global cloud computing market continues to expand exponentially, with enterprises investing billions in AI infrastructure and machine learning platforms. AWS currently maintains the largest market share in cloud services, while Azure offers seamless integration with Microsoft’s enterprise ecosystem, and Google Cloud leverages its deep expertise in artificial intelligence and data analytics. Choosing the right cloud AI platform involves evaluating multiple factors including pricing structures, available AI services, scalability options, compliance certifications, and ease of implementation.

This comprehensive comparison examines the AI and ML services offered by these three industry giants, providing detailed insights into their strengths, weaknesses, and ideal use cases. Whether you’re a startup exploring natural language processing capabilities, an enterprise requiring sophisticated computer vision solutions, or a data scientist building custom machine learning models, the nuances of each platform is crucial for making informed decisions.

Throughout this analysis, we’ll explore critical aspects such as pre-trained AI models, development frameworks, pricing strategies, performance benchmarks, and integration capabilities. We’ll also examine specialized services including speech recognition, document analysis, predictive analytics, and generative AI offerings. By the end of this guide, you’ll have a clear of which Cloud AI Services Comparison provider best matches your organizational objectives, technical requirements, and budget constraints, enabling you to leverage artificial intelligence effectively and drive meaningful business outcomes.

Cloud AI Services

Cloud AI services represent a transformative approach to implementing artificial intelligence solutions without the need for extensive on-premises infrastructure. These platforms provide managed AI services that allow developers, data scientists, and businesses to build, train, and deploy machine learning models at scale. The fundamental advantage of cloud-based AI lies in its accessibility—organizations can leverage sophisticated algorithms and powerful computing resources on a pay-as-you-go basis.

The three major cloud providers—AWS, Azure, and Google Cloud—offer comprehensive AI and ML ecosystems that include pre-trained models, custom model development tools, and specialized services for various applications. These cloud AI platforms handle the complex infrastructure management, automatic scaling, and resource optimization, allowing teams to focus on solving business problems rather than maintaining hardware and software stacks.

Cloud AI services typically fall into several categories: vision and image recognition, natural language processing, speech services, recommendation engines, forecasting, and document intelligence. Additionally, these platforms provide robust development environments, including Jupyter notebooks, integrated development environments (IDEs), and MLOps tools for streamlining the machine learning lifecycle from experimentation to production deployment.

Key Components of Cloud AI Infrastructure

Modern cloud AI infrastructure comprises multiple layers that work together to deliver comprehensive artificial intelligence capabilities. The foundation includes scalable compute resources, such as GPU and TPU instances optimized for deep learning workloads. Storage solutions are specifically designed to handle large datasets efficiently, with options for both structured and unstructured data.

Machine learning frameworks form the next layer, with support for popular tools like TensorFlow, PyTorch, scikit-learn, and proprietary frameworks developed by each cloud provider. These frameworks enable data scientists to build everything from simple regression models to complex neural networks. The platforms also include AutoML capabilities that automate model selection, hyperparameter tuning, and feature engineering processes.

The top layer consists of pre-built Cloud AI Services Comparison accessible through APIs, requiring minimal machine learning expertise to implement. These services cover computer vision, natural language , speech-to-text, text-to-speech, translation, and sentiment analysis. Organizations can integrate these capabilities into their applications within hours rather than months, dramatically accelerating time-to-market for AI-powered features.

AWS AI and Machine Learning Services

Amazon Web Services pioneered many cloud computing innovations and has built an extensive portfolio of AI and machine learning services that cater to diverse use cases. AWS offers solutions ranging from fully managed ML platforms to specialized AI services designed for specific applications. The platform’s maturity and breadth make it a popular choice for enterprises with complex requirements.

Amazon SageMaker serves as AWS’s flagship machine learning platform, providing a comprehensive environment for building, training, and deploying models at scale. SageMaker includes features like SageMaker Studio for collaborative development, SageMaker Autopilot for automated machine learning, and SageMaker Ground Truth for data labeling. The platform supports all major machine learning frameworks and offers optimized algorithms for common tasks.

AWS also provides numerous specialized Cloud AI Services Comparison that don’t require machine learning expertise. Amazon Rekognition delivers powerful image and video analysis capabilities, while Amazon Comprehend offers natural language processing for text analysis. Amazon Transcribe converts speech to text, and Amazon Polly generates natural-sounding speech from text. For business document processing, Amazon Textract extracts text and data from scanned documents with high accuracy.

AWS AI Service Pricing and Performance

AWS pricing for AI services follows a pay-per-use model with costs varying based on the specific service and usage volume. Amazon SageMaker charges for notebook instances, training hours, and endpoint hosting separately. For example, training instances range from affordable CPU-based options to high-performance GPU and custom silicon instances optimized for deep learning workloads.

Pre-trained AI services on AWS typically charge based on the number of API requests or the volume of data processed. Amazon Rekognition prices vary depending on whether you’re analyzing images or videos, with volume discounts available for large-scale operations. Amazon Comprehend charges per unit of text analyzed, with custom entity recognition and classification incurring additional costs.

Performance-wise, AWS leverages its global infrastructure with data centers across multiple regions, ensuring low latency and high availability. The platform’s custom AI chips, including AWS Inferentia for inference and AWS Trainium for training, provide cost-effective alternatives to traditional GPU instances while delivering competitive performance for machine learning workloads.

AWS Machine Learning Integration and Ecosystem

The AWS ecosystem offers seamless integration between AI services and other cloud components, making it easier to build end-to-end intelligent applications. Amazon SageMaker integrates directly with Amazon S3 for data storage, AWS Lambda for serverless computing, and Amazon CloudWatch for monitoring and logging. This tight integration streamlines the machine learning pipeline from data ingestion to model deployment.

AWS also provides extensive support for MLOps practices through services like SageMaker Pipelines for workflow automation, SageMaker Model Monitor for detecting model drift, and SageMaker Feature Store for managing and sharing features across teams. These capabilities help organizations maintain model quality and compliance in production environments.

The platform’s marketplace includes thousands of pre-trained models and algorithms developed by AWS and third-party vendors. This machine learning marketplace allows organizations to quickly deploy proven solutions for common use cases such as fraud detection, demand forecasting, and personalization, significantly reducing development time and costs.

Microsoft Azure AI Capabilities

Microsoft Azure has rapidly expanded its AI and machine learning offerings, leveraging the company’s extensive experience in enterprise software and deep research in artificial intelligence. Azure’s strength lies in its seamless integration with Microsoft’s ecosystem, making it particularly attractive for organizations already using Office 365, Dynamics, or other Microsoft products.

Azure Machine Learning serves as the platform’s comprehensive service for building, training, and deploying machine learning models. The platform provides a drag-and-drop designer for visual model building, supports popular ML frameworks, and includes automated machine learning capabilities. Azure ML Studio offers a collaborative workspace where data scientists and developers can work together efficiently.

One of Azure’s standout offerings is the Azure OpenAI Service, which provides API access to OpenAI’s powerful models including GPT-4, GPT-3.5, and DALL-E. This service enables organizations to implement cutting-edge generative AI capabilities for content creation, code generation, and conversational AI applications. The integration ensures enterprise-grade security and compliance while accessing these advanced models.

Azure Cognitive Services Overview

Azure Cognitive Services comprises pre-built AI services organized into five main categories: vision, speech, language, decision, and search. These services allow developers to add intelligent features to applications without requiring deep machine learning expertise. Each category includes multiple specialized services addressing specific use cases.

For computer vision, Azure offers services like Computer Vision API for image analysis, Face API for facial recognition and verification, and Custom Vision for training custom image classification models. Azure Form Recognizer (now part of Document Intelligence) extracts information from documents and forms with high accuracy, supporting both pre-built and custom models.

Azure Cognitive Services for Language includes Text Analytics for sentiment analysis and entity recognition, Question Answering for building conversational bots, and Translator for multi-language support. Speech Services provide speech-to-text, text-to-speech, and speech translation capabilities with support for numerous languages and dialects. These services compete directly with similar offerings from AWS and Google Cloud.

Azure AI Pricing Structure

Azure pricing for AI services is competitive and generally structured around consumption-based models with options for committed use discounts. Azure Machine Learning charges for compute resources, storage, and specific features like automated ML and designer usage. The platform offers various compute tiers from CPU-based instances to GPU-accelerated virtual machines optimized for deep learning.

Cognitive Services typically use tiered pricing where costs decrease as usage volume increases. Free tiers are available for most services, allowing developers to experiment and build proof-of-concept applications before committing to production pricing. For example, Computer Vision API offers free monthly transactions before charging per thousand API calls.

The Azure OpenAI Service pricing differs from standard Cognitive Services, with costs based on token usage for different model types. GPT-4 models command premium pricing compared to GPT-3.5 models, reflecting their enhanced capabilities. Azure also offers reserved capacity options for predictable workloads, allowing organizations to secure lower rates through upfront commitments.

Google Cloud AI and ML Platform

Google Cloud Platform brings the company’s extensive AI research and infrastructure expertise to its cloud AI services. Google pioneered many modern machine learning techniques and open-sourced TensorFlow, one of the most widely used deep learning frameworks. This deep technical foundation gives Google Cloud unique advantages in AI capabilities and innovation.

Vertex AI is Google’s unified machine learning platform that consolidates previous services like AI Platform and AutoML into a single comprehensive environment. Vertex AI provides tools for the entire ML lifecycle, from data preparation and feature engineering to model training, deployment, and monitoring. The platform supports both custom model development and AutoML for users with varying levels of machine learning expertise.

Google Cloud’s pre-trained APIs deliver powerful capabilities across multiple domains. Vision API offers advanced image analysis including object detection, OCR, and explicit content detection. Natural Language API provides entity recognition, sentiment analysis, and content classification. Speech-to-Text and Text-to-Speech APIs leverage Google’s cutting-edge research in voice technology, supporting over 125 languages.

Google Cloud AI Specializations

Google Cloud particularly excels in areas where Google’s consumer products have driven innovation. Google Translation API benefits from decades of translation research and massive multilingual datasets, offering superior quality for many language pairs. Video Intelligence API provides sophisticated video analysis capabilities, including shot change detection, explicit content detection, and automatic video transcription.

Document AI combines optical character recognition with machine learning to extract structured information from documents, forms, and invoices. The service includes specialized processors for common document types and allows organizations to train custom processors for specialized needs. This service competes directly with AWS Textract and Azure Form Recognizer.

For conversational AI, Google offers Dialogflow, a comprehensive platform for building chatbots and voice assistants. Dialogflow leverages Google’s natural language capabilities and integrates seamlessly with Google Assistant, providing developers with powerful tools for creating sophisticated conversational experiences across multiple channels.

Google Cloud AI Pricing and Infrastructure

Google Cloud pricing for AI services is generally competitive with AWS and Azure, often featuring aggressive pricing for compute resources and storage. Vertex AI charges separately for training, prediction, and storage, with pricing varying based on the machine types and accelerators used. Google’s custom Tensor Processing Units (TPUs) offer cost-effective alternatives to GPUs for certain machine learning workloads.

Pre-trained API pricing typically follows a tiered structure based on usage volume, with free monthly quotas available for most services. For example, Vision API includes free monthly units before charging per thousand images processed. Google Cloud also offers sustained use discounts that automatically apply to long-running workloads, potentially reducing costs compared to competitors.

Google’s network infrastructure provides exceptional performance for data-intensive AI applications. The company’s private global network ensures low latency and high throughput between regions, which is particularly valuable for distributed machine learning training and inference at scale. Additionally, Google’s commitment to sustainability means their data centers often have lower environmental impact than alternatives.

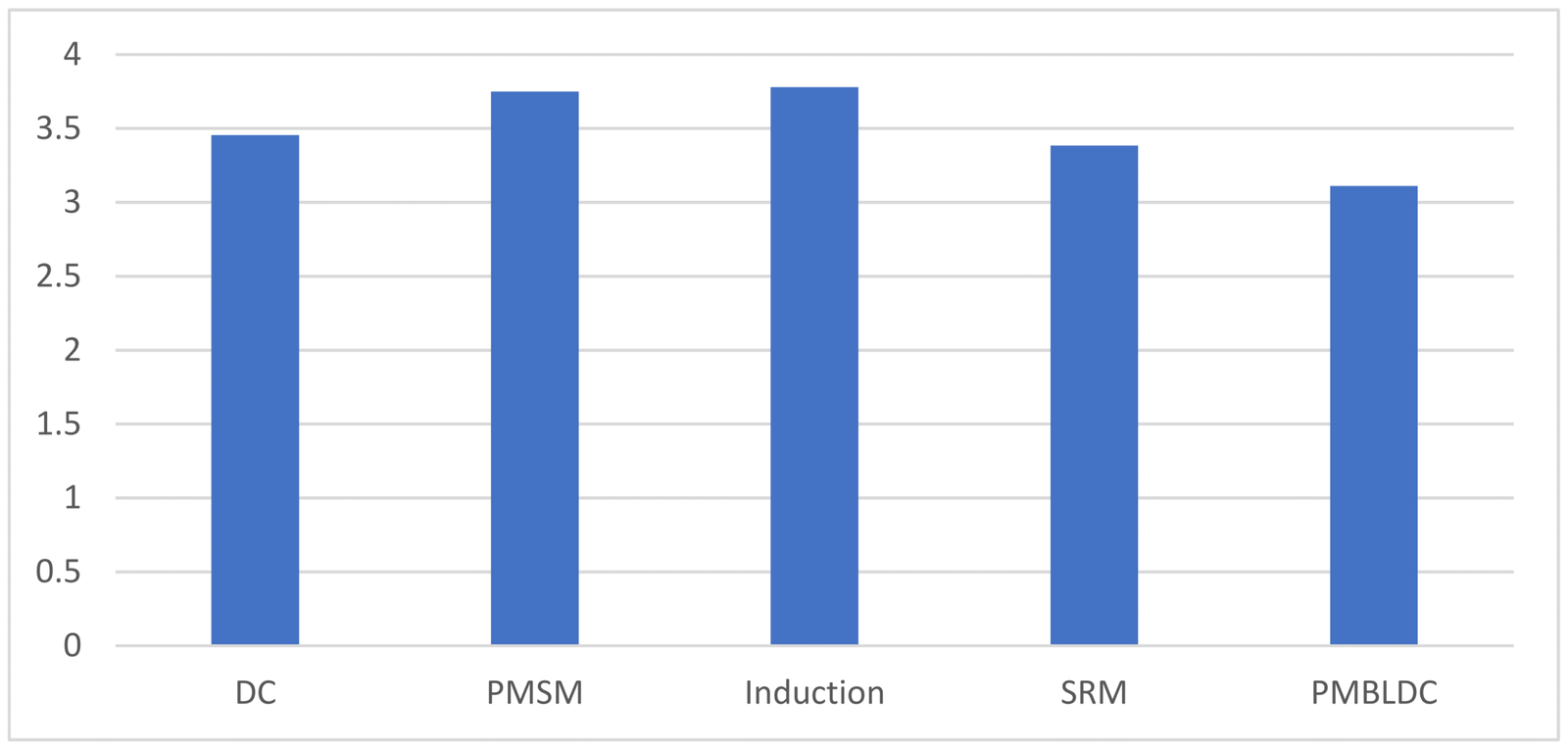

Comparative Analysis: AWS vs Azure vs Google Cloud

When comparing cloud AI services across AWS, Azure, and Google Cloud, several key factors emerge that differentiate these platforms. Each provider has unique strengths that appeal to different organizational needs, technical requirements, and existing infrastructure investments. these distinctions is crucial for making an informed decision.

Market position plays a significant role in this comparison. AWS maintains the largest overall cloud market share and offers the most extensive catalog of services across all categories. Azure leverages Microsoft’s dominant position in enterprise software and provides superior integration with existing Microsoft tools. Google Cloud, while third in market share, leads in AI innovation and offers competitive pricing with advanced networking capabilities.

Service maturity varies across platforms. AWS has the longest history in cloud computing and generally offers the most comprehensive documentation and community support. Azure has rapidly caught up in recent years, particularly with its Azure OpenAI Service offering exclusive access to GPT models. Google Cloud’s services often reflect cutting-edge research but may have smaller user communities and fewer third-party integrations.

AI Service Feature Comparison

The breadth and depth of Cloud AI Services Comparison differ across providers. AWS offers the widest range of specialized services with options like Amazon Fraud Detector, Amazon Forecast, and Amazon Personalize addressing specific business use cases. These purpose-built services reduce development time but may lock organizations into AWS-specific implementations.

Azure Cognitive Services provides a well-organized portfolio that balances breadth and depth. The platform’s standout feature is the Azure OpenAI Service, which gives Azure a significant advantage for organizations prioritizing generative AI capabilities. Azure also excels in multimodal AI scenarios where vision, speech, and language services work together seamlessly.

Google Cloud offers fewer specialized services but maintains high quality in core capabilities. The platform’s strengths lie in areas where Google has consumer product experience: search, translation, speech recognition, and image analysis. For organizations building custom machine learning models, Vertex AI provides arguably the most streamlined and modern development environment among the three platforms.

Integration and Developer Experience

Developer experience significantly impacts productivity and time-to-market for AI projects. AWS provides extensive SDKs, CLI tools, and comprehensive documentation, but the sheer number of services can create complexity. The platform’s naming conventions and service organization sometimes confuse newcomers, though experienced users appreciate the granular control.

Azure benefits from familiarity for organizations already using Microsoft development tools like Visual Studio and GitHub. The platform’s integration with Active Directory simplifies identity management, and Azure DevOps provides robust CI/CD capabilities for MLOps workflows. Azure’s unified portal experience is generally considered more user-friendly than AWS’s console.

Google Cloud offers a clean, intuitive interface and excellent documentation. The platform’s APIs follow consistent design patterns, making it easier to learn multiple services. Google’s commitment to open-source tools like TensorFlow and Kubernetes gives developers flexibility to avoid vendor lock-in. However, some users report that Google Cloud’s ecosystem has fewer third-party tools and integrations compared to AWS and Azure.

Machine Learning Frameworks and Tools

All three cloud platforms support popular machine learning frameworks, but their approaches and optimizations differ. AWS, Azure, and Google Cloud recognize that data scientists have framework preferences and provide flexibility while offering their own optimized implementations and managed services.

TensorFlow receives first-class support on all platforms, being open-sourced by Google and widely adopted in the industry. Google Cloud naturally offers the tightest integration with TensorFlow, including optimized performance on TPUs. PyTorch, increasingly popular in research and industry, is fully supported across all three platforms with GPU-accelerated instances and container support.

Each provider also maintains proprietary frameworks and tools. AWS developed Amazon SageMaker with optimized built-in algorithms. Azure provides ML.NET for .NET developers and integrates deeply with the ONNX (Open Neural Network Exchange) standard. Google created TensorFlow Extended (TFX) for production ML pipelines and offers JAX for high-performance research.

AutoML and No-Code Solutions

Automated machine learning democratizes AI by enabling non-experts to build effective models. AWS offers SageMaker Autopilot, which automatically explores different algorithms and hyperparameters to find optimal models. The service provides explainability features showing which features contribute most to predictions, addressing the “black box” concern.

Azure AutoML is integrated into Azure Machine Learning Studio and supports classification, regression, time-series forecasting, and natural language processing tasks. The service includes guardrails to prevent overfitting and provides transparent model explanations. Azure’s visual designer also allows users to build ML pipelines through drag-and-drop interfaces without writing code.

Vertex AI AutoML on Google Cloud covers vision, natural language, tabular, and video data. The service is known for producing high-quality models with minimal configuration, leveraging Google’s neural architecture search technology. Vertex AI also offers AutoML Tables specifically optimized for structured data, competing directly with automated ML offerings from AWS and Azure.

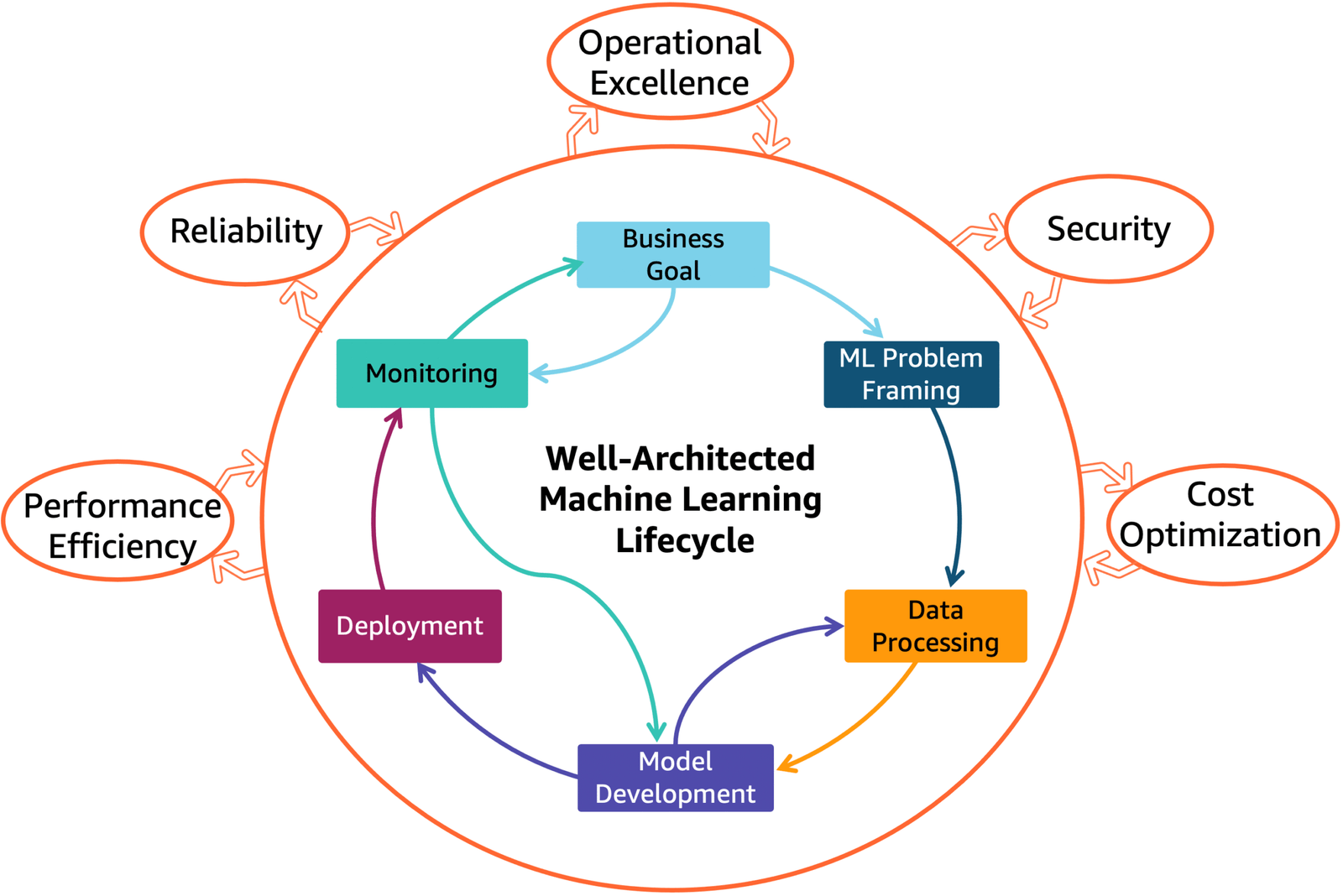

MLOps and Production Deployment

Successfully deploying and maintaining machine learning models in production requires robust MLOps practices. All three platforms provide tools for versioning, monitoring, and retraining models, but their approaches reflect different philosophies.

AWS SageMaker offers comprehensive MLOps capabilities through SageMaker Pipelines for workflow orchestration, Model Registry for version control, and Model Monitor for detecting data drift and model performance degradation. The platform integrates with AWS DevOps tools like CodePipeline and CloudFormation for infrastructure as code.

Azure Machine Learning provides similar capabilities with Azure ML Pipelines, model registry, and data drift detection. The platform’s integration with Azure DevOps and GitHub Actions enables sophisticated CI/CD workflows. Azure’s responsible AI dashboard includes features for assessing model fairness, explainability, and error analysis.

Vertex AI Pipelines on Google Cloud uses the Kubeflow Pipelines SDK, offering portability across environments. The platform includes Vertex AI Model Monitoring for continuous evaluation and Vertex AI Feature Store for centralized feature management and serving. Google Cloud’s commitment to Kubernetes means MLOps workflows can often be migrated between environments more easily.

Cost Optimization Strategies

Managing costs effectively is crucial when working with Cloud AI Services Comparison, as compute-intensive training and high-volume inference can quickly accumulate expenses. Each platform offers various mechanisms for cost optimization, from instance selection to commitment-based pricing.

Right-sizing compute resources is the first step in cost optimization. AWS offers various instance families including EC2 P4d instances with NVIDIA A100 GPUs for demanding training workloads and lighter Inf1 instances with AWS Inferentia chips for cost-effective inference. Choosing appropriate instance types based on workload characteristics can reduce costs significantly.

Reserved instances and committed use discounts provide substantial savings for predictable workloads. Azure offers reserved VM instances with discounts up to 72% compared to pay-as-you-go pricing. Google Cloud’s committed use contracts provide similar savings and automatically apply to matching usage. AWS Savings Plans offer flexibility by applying discounts across various services.

Spot instances and preemptible VMs enable even greater cost savings for fault-tolerant workloads. AWS Spot Instances can reduce costs by up to 90% for interruptible training jobs. Azure Spot VMs and Google Cloud Preemptible VMs offer similar economics. Implementing checkpointing strategies allows training to resume after interruptions, making these options viable for many machine learning workflows.

Monitoring and Budget Management

Effective cost monitoring prevents unexpected bills and identifies optimization opportunities. All three platforms provide cost management tools, but their sophistication and ease of use vary.

AWS Cost Explorer offers detailed analysis of spending patterns with customizable reports and forecasting. AWS Budgets can trigger alerts when costs approach thresholds or anomalous spending is detected. For AI workloads specifically, SageMaker provides usage metrics showing costs by experiment, model, and endpoint.

Azure Cost Management provides similar capabilities with spending analysis, budgets, and recommendations. The service includes Azure Advisor, which suggests specific optimizations based on actual usage patterns. Azure’s cost alerts can be configured at subscription, resource group, or individual resource levels.

Google Cloud Cost Management tools include detailed billing reports, budgets, and cost allocation by labels. Recommender actively suggests ways to reduce costs, such as removing idle resources or right-sizing VMs. Google’s sustained use discounts automatically apply, simplifying cost optimization compared to manually managing reserved instances.

Security and Compliance Considerations

Security and compliance are paramount when deploying AI services in the cloud, particularly for regulated industries like healthcare, finance, and government. All three major cloud providers invest heavily in security, but their certifications, features, and regional availability differ.

Data encryption is standard across AWS, Azure, and Google Cloud, with encryption at rest and in transit. Each platform offers various key management options, from platform-managed keys to customer-managed keys using dedicated hardware security modules. AWS Key Management Service (KMS), Azure Key Vault, and Google Cloud Key Management Service provide centralized key management with audit logging.

Network isolation protects sensitive workloads from unauthorized access. AWS offers Virtual Private Cloud (VPC) with security groups and network ACLs. Azure uses Virtual Networks (VNet) with network security groups. Google Cloud provides VPC Service Controls for creating security perimeters around cloud resources, offering particularly strong protection for multi-tenant environments.

Compliance Certifications

Compliance certifications vary by region and service, with Azure generally offering the broadest range of certifications globally. Azure maintains over 90 compliance offerings, including HIPAA, HITRUST, FedRAMP, ISO 27001, SOC 1/2/3, and numerous country-specific certifications. This extensive compliance portfolio makes Azure particularly attractive for highly regulated industries.

AWS also maintains comprehensive compliance certifications, though the specific services covered vary. Amazon SageMaker is HIPAA-eligible, PCI DSS compliant, and covered by various regional certifications. AWS’s Artifact service provides on-demand access to compliance reports and certifications, simplifying audit processes.

Google Cloud has expanded its compliance certifications significantly in recent years. The platform now supports HIPAA, FedRAMP, ISO 27001, and industry-specific standards. Google’s Assured Workloads feature allows organizations to configure environments that meet specific compliance requirements automatically, reducing configuration complexity.

AI Ethics and Responsible AI

Responsible AI practices are increasingly important for organizations deploying machine learning models at scale. Each cloud provider offers tools and frameworks for addressing bias, fairness, and transparency in AI systems.

AWS provides SageMaker Clarify for detecting bias in training data and models. The service can identify potential bias before training and monitor predictions for unfair outcomes. Amazon’s AI Service Cards document the intended use cases and limitations of pre-trained models, promoting transparency.

Azure offers a comprehensive Responsible AI dashboard integrated into Azure Machine Learning. The dashboard includes tools for model explainability, fairness assessment, error analysis, and counterfactual what-if analysis. Azure also provides the Fairlearn toolkit for bias mitigation and fairness constraints during training.

Google Cloud emphasizes responsible AI through its Vertex AI Model Monitoring, which includes fairness metrics alongside performance metrics. The platform’s Explainable AI features help data scientists understand model predictions. Google also publishes extensive responsible AI practices documentation and offers specialized AI ethics training.

Use Cases and Industry Applications

Different industries and use cases may favor specific cloud AI platforms based on their unique requirements, existing technology investments, and regulatory constraints. which platform excels in particular scenarios helps organizations make informed decisions.

Healthcare and life sciences organizations often prefer Azure due to its extensive health-specific compliance certifications and purpose-built services. Azure Health Bot and Text Analytics for Health provide specialized capabilities for medical applications. Microsoft’s partnerships with healthcare organizations and proven track record in regulated environments increase confidence for sensitive workloads.

Financial services companies frequently choose AWS for its maturity, comprehensive services, and strong track record in the financial sector. Services like Amazon Fraud Detector address industry-specific needs. AWS’s extensive compliance certifications, including PCI DSS for payment processing, and its large ecosystem of fintech partners make it attractive for banks and financial institutions.

Retail and e-commerce businesses benefit from AWS’s specialized services like Amazon Personalize for recommendation engines and Amazon Forecast for demand prediction. These services leverage Amazon’s retail expertise. However, Google Cloud’s superior data analytics capabilities and competitive pricing also appeal to retailers with significant data processing requirements.

Media and Entertainment Applications

Media and entertainment companies increasingly rely on cloud AI for content analysis, recommendation systems, and automated workflows. Each platform offers relevant capabilities, but strengths vary.

AWS dominates in media services overall, with AWS Elemental for video processing and Amazon Rekognition for content moderation and analysis. The platform’s extensive content delivery network capabilities and media-specific partnerships make it popular for streaming services and content producers.

Google Cloud excels in video analysis through Video Intelligence API, which provides sophisticated scene detection and content classification. The platform’s strength in advertising technology and analytics appeals to media companies monetizing through digital advertising. Google’s research in video compression and streaming also influences its cloud offerings.

Azure Media Services provides competitive capabilities with strong integration into Microsoft’s broader ecosystem. For media companies using Microsoft’s creative tools or enterprise software, Azure’s seamless integration can streamline workflows and reduce integration complexity.

Manufacturing and IoT

Manufacturing and Internet of Things (IoT) applications benefit from AI-powered predictive maintenance, quality control, and supply chain optimization. Each cloud platform offers IoT-specific services alongside AI capabilities.

AWS IoT services integrate well with SageMaker for building predictive maintenance models. AWS Panorama brings computer vision to edge devices for quality inspection on manufacturing floors. Amazon’s extensive partner network includes numerous industrial IoT vendors, facilitating integration with existing equipment.

Azure IoT Hub and Azure Digital Twins provide robust IoT infrastructure with strong integration into Azure AI services. Microsoft’s partnerships with industrial equipment manufacturers and its presence in enterprise IT make Azure appealing for manufacturing environments with existing Microsoft investments.

Google Cloud IoT (now integrated into broader platform services) offers strong data analytics capabilities essential for processing IoT sensor data at scale. Google’s leadership in data processing and machine learning makes it suitable for manufacturers prioritizing advanced analytics and AI-driven insights.

Performance Benchmarks and Latency

Performance characteristics significantly impact user experience and operational efficiency for AI applications. Training speed, inference latency, and throughput vary across platforms based on hardware, software optimization, and network architecture.

Training performance depends heavily on accelerator choice and framework optimization. AWS’s P4d instances with NVIDIA A100 GPUs deliver exceptional performance for large-scale deep learning training. Google’s TPU v4 pods offer competitive performance, particularly for TensorFlow workloads, with excellent performance-per-dollar for certain model architectures.

Inference latency matters critically for real-time applications. AWS Inferentia chips provide low-latency inference at reduced cost compared to GPU-based inference. Azure offers ONNX Runtime optimization for inference acceleration across various hardware. Google Cloud’s global network infrastructure can provide lower latency for users distributed globally.

Geographic distribution of data centers affects latency for applications serving international users. AWS operates in more regions and availability zones than competitors, enabling lower latency for users worldwide. Azure’s global presence is similarly extensive, while Google Cloud operates fewer regions but leverages its private global network for efficient inter-region communication.

Scalability and Throughput

Scalability requirements vary from prototypes handling dozens of requests to production systems processing millions. All three platforms support massive scale, but their approaches differ.

AWS offers virtually unlimited scalability through services like Lambda for serverless inference and SageMaker endpoints with auto-scaling. The platform’s maturity means most scaling patterns are well-documented, and numerous third-party tools exist for optimization.

Azure provides comparable scalability with Azure Functions for serverless deployment and Azure Kubernetes Service (AKS) for containerized workloads. Azure’s integration with Azure Traffic Manager facilitates global load balancing across multiple regions.

Google Cloud excels in handling massive scale through its experience running consumer services. Cloud Run offers excellent serverless inference capabilities, and Google Kubernetes Engine (GKE) benefits from Google’s Kubernetes expertise. The platform’s Autopilot mode automates much of cluster management.

Migration and Multi-Cloud Strategies

Organizations increasingly adopt multi-cloud strategies to avoid vendor lock-in, optimize costs, and leverage best-of-breed services. Successfully implementing multi-cloud AI requires careful planning around data portability, model compatibility, and operational complexity.

Containerization using Docker and Kubernetes provides a path to portability across cloud platforms. All three providers offer managed Kubernetes services: AWS EKS, Azure AKS, and Google GKE. Deploying machine learning models in containers allows organizations to move workloads between clouds, though provider-specific services may complicate migration.

Open-source frameworks reduce lock-in by enabling model portability. TensorFlow, PyTorch, and scikit-learn models can generally move between platforms, though provider-specific optimizations may not transfer. ONNX format provides additional portability, particularly relevant for Azure users given Microsoft’s strong ONNX support.

Data gravity often determines cloud strategy more than application portability. Moving large datasets between clouds incurs egress fees and time delays. Organizations may choose to keep data on one platform while accessing it from applications running elsewhere, accepting the latency and bandwidth costs.

Hybrid Cloud Considerations

Hybrid cloud deployments combine on-premises infrastructure with cloud services, common in regulated industries or organizations with significant existing investments. Each platform offers hybrid capabilities but with different approaches.

AWS Outposts brings AWS services on-premises, allowing organizations to run SageMaker and other services in their data centers. This approach maintains consistency between cloud and on-premises environments but requires managing physical infrastructure.

Azure Stack and Azure Arc provide the most mature hybrid capabilities among the three platforms. Azure Arc extends Azure management to any infrastructure, including competitors’ clouds. For organizations with significant on-premises investments, particularly in Microsoft technologies, Azure’s hybrid story is compelling.

Google Anthos enables running Google Cloud services on-premises and on competitor clouds. The Kubernetes-based approach provides consistency across environments. However, Anthos adoption has been slower than Azure’s hybrid offerings, partly due to its relative complexity and Google Cloud’s smaller overall market presence.

More Read: AI Trade Shows Where Business Meets Innovation

Conclusion

Choosing between AWS, Azure, and Google Cloud for AI and machine learning services ultimately depends on your organization’s specific requirements, existing technology investments, and strategic priorities. AWS offers the broadest service catalog and most mature ecosystem, making it ideal for organizations requiring comprehensive cloud capabilities and extensive third-party integrations. Azure excels in enterprise scenarios, particularly for organizations already invested in the Microsoft ecosystem, and provides unique advantages through the Azure OpenAI Service for accessing cutting-edge generative AI models.

Google Cloud stands out for its AI innovation, superior data analytics capabilities, competitive pricing, and user-friendly interfaces, appealing to organizations prioritizing technical excellence and modern development practices. While all three platforms provide robust, enterprise-grade AI services with strong security and compliance features, success depends less on choosing the “best” platform and more on selecting the one that aligns with your team’s expertise, business requirements, and long-term strategic vision for artificial intelligence adoption.