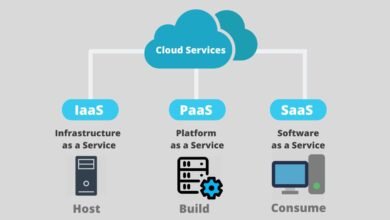

The convergence of cloud-native technologies and artificial intelligence has revolutionized how organizations build, deploy, and scale AI applications. Cloud-native AI development represents a paradigm shift that combines the flexibility of cloud infrastructure with the computational demands of machine learning workloads. As businesses increasingly adopt AI-driven solutions, the tools and methodologies for cloud-native AI have become essential for developers, data scientists, and DevOps teams.

Traditional AI development often faces challenges related to scalability, resource management, and deployment complexity. Cloud-native architectures address these limitations by leveraging containerization, orchestration platforms, and microservices-based designs that enable seamless scaling and resource optimization. This approach transforms how AI models are developed, trained, and deployed across distributed environments.

The cloud-native ecosystem offers numerous advantages for AI workloads, including elastic resource allocation, automated scaling, and improved collaboration between cross-functional teams. Organizations can now build AI applications that adapt dynamically to changing demands while maintaining high availability and performance. The integration of MLOps practices with cloud-native tools has further streamlined the entire machine learning lifecycle, from data preparation to model monitoring.

Modern cloud-native AI development relies on a robust toolkit that includes container orchestration platforms like Kubernetes, containerization technologies such as Docker, and specialized frameworks designed for machine learning operations. These tools work in concert to create reproducible, scalable, and maintainable AI systems. Whether you’re deploying deep learning models for image recognition or building conversational AI assistants, cloud-native approaches provide the foundation for enterprise-grade solutions.

This comprehensive guide explores the essential tools and best practices that define successful cloud-native AI development. We’ll examine the architectural principles, deployment strategies, and operational frameworks that enable organizations to harness the full potential of AI in cloud environments. From core concepts to implementing production-ready solutions, this article provides actionable insights for building scalable, efficient, and secure AI infrastructures that drive business value.

Cloud-Native AI Development

What is Cloud-Native AI

Cloud-native AI refers to the practice of building and deploying artificial intelligence applications using cloud-native principles and technologies. This approach leverages containerization, dynamic orchestration, and microservices architectures to create AI systems that are inherently scalable, resilient, and portable across different cloud environments. Unlike traditional monolithic AI deployments, cloud-native AI systems are designed to fully exploit cloud infrastructure capabilities.

The fundamental distinction of cloud-native AI development lies in its architectural approach. Applications are decomposed into smaller, independent services that can be developed, deployed, and scaled individually. This modular design allows teams to update specific AI components without affecting the entire system, enabling faster iteration cycles and reduced deployment risks. Containerization ensures that AI models run consistently across development, testing, and production environments.

Core Principles of Cloud-Native Architecture

Cloud-native architectures for AI workloads are built on several foundational principles. First, containerization packages AI models and their dependencies into isolated, portable units that run consistently across any infrastructure. Second, orchestration through platforms like Kubernetes automates deployment, scaling, and management of containerized applications. Third, microservices decompose complex AI systems into loosely coupled services that communicate through well-defined APIs.

Immutability represents another crucial principle where deployed containers are never modified in place; instead, new versions are deployed to replace old ones. This approach enhances reliability and simplifies rollback procedures when issues arise. Declarative configuration allows teams to specify desired system states rather than step-by-step procedures, enabling infrastructure-as-code practices. These principles collectively create AI systems that are more maintainable, scalable, and resilient.

Benefits of Cloud-Native AI Development

Organizations adopting cloud-native AI development experience significant advantages in scalability and resource efficiency. Horizontal scaling capabilities allow AI applications to handle varying workloads by automatically adjusting the number of running instances. This elasticity optimizes costs by allocating resources only when needed, particularly important for computationally intensive machine learning workloads that may have sporadic demand patterns.

Faster deployment cycles represent another compelling benefit. Cloud-native tools enable continuous integration and continuous deployment (CI/CD) pipelines that automate testing and deployment processes. Data scientists can push model updates to production more frequently with reduced risk, accelerating the time-to-market for AI innovations. The standardized environments created through containerization eliminate the notorious “it works on my machine” problem that plagues traditional deployments.

Improved collaboration across teams emerges naturally from cloud-native practices. Data scientists, developers, and operations teams work within shared frameworks and toolchains, breaking down organizational silos. Version control for models, reproducible experiments, and automated testing creates transparency and accountability throughout the AI lifecycle. This collaborative environment enhances productivity and reduces errors in production deployments.

Essential Tools for Cloud-Native AI Development

Kubernetes: The Orchestration Foundation

Kubernetes has become the de facto standard for orchestrating containerized AI workloads in cloud-native environments. This open-source platform automates deployment, scaling, and management of containerized applications, making it ideal for the complex requirements of AI and machine learning systems. Kubernetes provides robust mechanisms for resource allocation, load balancing, and self-healing that are essential for production AI applications.

For AI workloads, Kubernetes offers specialized features including GPU scheduling, which ensures efficient utilization of expensive computational resources. The platform’s declarative configuration model allows teams to define desired states for AI deployments, and Kubernetes automatically maintains those states. Horizontal pod autoscaling adjusts the number of running instances based on CPU utilization or custom metrics, ensuring optimal performance during varying demand periods.

Kubeflow extends Kubernetes specifically for machine learning workflows. This toolkit provides components for every stage of the ML lifecycle, including Jupyter notebooks for development, pipelines for workflow orchestration, and serving infrastructure for model deployment. Kubeflow Pipelines enable data scientists to create reproducible, portable ML workflows that can run anywhere Kubernetes is available, promoting consistency across environments.

Docker and Containerization Technologies

Docker revolutionized application deployment by introducing lightweight, portable containers that package applications with their dependencies. For AI development, Docker containers solve the dependency management nightmare that often accompanies complex machine learning frameworks. A Docker image encapsulates the ML model, runtime environment, libraries, and configuration files, ensuring consistent behavior across different infrastructures.

Creating Docker images for AI applications follows best practices that optimize performance and security. Using minimal base images reduces container size and attack surface. Multi-stage builds separate development dependencies from production runtime, creating leaner final images. Layer caching accelerates build times by reusing unchanged layers. These optimizations are particularly important for AI models that may require frequent updates and redeployments.

Docker Compose facilitates multi-container development environments where AI applications comprise multiple interconnected services. Data scientists can define entire stacks, including model serving containers, database services, and monitoring tools in a single configuration file. This approach simplifies local development and testing before deploying to Kubernetes in production, creating seamless transitions between development and production environments.

MLOps Platforms and Frameworks

MLOps platforms bridge the gap between machine learning development and production operations, applying DevOps principles to the ML lifecycle. These platforms provide integrated tools for experiment tracking, model versioning, deployment automation, and monitoring. MLflow, an open-source platform, offers comprehensive experiment tracking that records parameters, metrics, and artifacts for every training run, enabling reproducibility and comparison across experiments.

Kubeflow represents a complete MLOps solution built specifically for Kubernetes. It provides pre-built components for distributed training, hyperparameter tuning, and model serving. The platform’s pipeline functionality creates directed acyclic graphs (DAGs) that define ML workflows, enabling version control and automated execution. Kubeflow integrates with popular ML frameworks, including TensorFlow, PyTorch, and scikit-learn, providing flexibility for diverse use cases.

Cloud provider-managed MLOps platforms offer additional convenience and integration. AWS SageMaker provides a fully managed service for building, training, and deploying machine learning models at scale. Azure Machine Learning delivers similar capabilities within the Azure ecosystem, while Google AI Platform serves GCP users. These managed services abstract infrastructure complexity, allowing teams to focus on model development rather than operational concerns.

Model Serving and Inference Tools

Model serving represents a critical component of cloud-native AI systems, handling the deployment and execution of trained models in production. TensorFlow Serving provides high-performance serving for TensorFlow models with features like model versioning, request batching, and GPU acceleration. Its gRPC and REST APIs enable easy integration with various client applications, making it suitable for diverse deployment scenarios.

Seldon Core offers a more framework-agnostic approach to model serving on Kubernetes. This platform supports multiple ML frameworks and provides advanced deployment patterns, including A/B testing, canary deployments, and multi-armed bandits. Seldon Core wraps models in containers and manages their lifecycle within Kubernetes clusters, providing consistent serving infrastructure regardless of the underlying ML framework used.

KServe (formerly KFServing) extends Kubernetes with serverless inference capabilities for machine learning models. It provides autoscaling based on demand, reducing costs during low-traffic periods while ensuring responsiveness during peaks. KServe supports multiple frameworks through a standardized prediction interface and integrates seamlessly with Kubeflow for end-to-end ML pipelines. The platform handles model versioning, traffic splitting, and explainability features out of the box.

Monitoring and Observability Tools

Monitoring AI applications requires specialized tools that track both infrastructure metrics and model-specific performance indicators. Prometheus, the standard monitoring solution for Kubernetes, collects time-series metrics from applications and infrastructure. For AI workloads, custom metrics track prediction latency, throughput, and resource utilization. Prometheus’s flexible query language enables complex analytics and alerting based on these metrics.

Grafana complements Prometheus by providing visualization dashboards that display metrics in real-time. Teams can create comprehensive dashboards showing model performance, infrastructure health, and business metrics side by side. For ML models, specific visualizations track accuracy drift, prediction distributions, and error rates over time, enabling proactive identification of model degradation or data drift issues.

Specialized ML monitoring platforms like Evidently AI and Fiddler focus specifically on model performance and data quality. These tools detect concept drift, data drift, and model bias that may not be apparent from infrastructure metrics alone. They provide model-specific insights, including feature importance changes, prediction distribution shifts, and fairness metrics. Integrating these specialized tools with general monitoring infrastructure creates comprehensive observability for cloud-native AI systems.

Best Practices for Cloud-Native AI Development

Infrastructure Design and Architecture

Designing robust cloud-native AI infrastructure begins with proper resource planning and segmentation. Separate environments for development, staging, and production ensure that experimental work doesn’t impact production systems. Namespace isolation in Kubernetes provides logical separation between projects and teams, enhancing security and resource management. Resource quotas prevent individual workloads from consuming excessive cluster resources.

Infrastructure as Code (IaC) practices use tools like Terraform or Pulumi to define and manage cloud resources programmatically. This approach creates reproducible environments and enables version control for infrastructure changes. For AI workloads, IaC ensures consistent provisioning of GPU nodes, storage systems, and networking configurations. Teams can rapidly recreate entire environments for disaster recovery or multi-region deployments.

High availability considerations are crucial for production AI applications. Deploying models across multiple availability zones ensures continued operation during infrastructure failures. Load balancers distribute inference requests across multiple model replicas, preventing single points of failure. Regular backup procedures for model artifacts and training data protect against data loss. These architectural decisions create resilient AI systems that maintain availability even during disruptions.

Containerization Best Practices

Creating optimized Docker images for AI models requires attention to several key factors. Using official framework base images from trusted repositories ensures security and compatibility. Minimizing image layers by combining related commands reduces image size and build time. Multi-stage builds separate build dependencies from runtime requirements, creating production images that contain only necessary components.

Security scanning of container images identifies vulnerabilities in dependencies before deployment. Tools like Trivy and Grype scan images for known security issues and can be integrated into CI/CD pipelines to block vulnerable images from reaching production. Regular image updates and patching processes address newly discovered vulnerabilities. For AI applications, these practices protect sensitive model intellectual property and training data.

Version tagging strategies maintain clarity about deployed model versions. Semantic versioning communicates the nature of changes between releases, while immutable tags prevent confusion about image contents. Tagging images with both version numbers and git commit hashes creates traceability between code and deployed artifacts. This versioning discipline is essential for MLOps practices that require reproducibility and rollback capabilities.

CI/CD for Machine Learning

Continuous integration for machine learning extends traditional software CI practices with ML-specific testing. Automated tests verify model performance against benchmark datasets, ensuring that code changes don’t degrade accuracy. Unit tests validate data preprocessing pipelines and feature engineering logic. Integration tests confirm that models integrate correctly with serving infrastructure and downstream services.

Continuous deployment pipelines automate the path from model training to production serving. Successful training runs trigger automated deployment to staging environments where additional validation occurs. Gradual rollout strategies, like canary deployments, expose new models to small percentages of traffic before full deployment. Automated monitoring watches for performance degradation during rollouts, enabling automatic rollback if issues arise.

GitOps practices store all configuration in version-controlled repositories and use automated processes to synchronize declared states with running systems. For AI workloads, GitOps manages Kubernetes manifests, model configurations, and deployment parameters. Changes to models or infrastructure follow standard code review processes, creating audit trails and enabling collaborative development. Tools like ArgoCD or Flux implement GitOps workflows for Kubernetes environments.

Model Management and Versioning

Model versioning maintains clear records of every model iteration, including training data, hyperparameters, code versions, and resulting metrics. Model registries like MLflow Model Registry or Kubeflow’s Model Registry provide centralized storage for model artifacts with associated metadata. Teams can compare models across experiments, promote successful models to production, and maintain lineage information that traces models back to their source data and code.

A/B testing infrastructure enables data-driven decisions about model updates. Traffic splitting routes specified percentages of requests to different model versions, allowing direct comparison of their performance on real production traffic. Automated analysis determines which version performs better based on predefined success metrics. This approach reduces risk when deploying new models and provides empirical evidence for model improvement.

Model governance establishes processes and policies for managing models throughout their lifecycle. Documentation requirements ensure that each model has clear descriptions of its purpose, training data, limitations, and expected performance. Approval workflows require stakeholder sign-off before production deployment. Audit logs track who deployed which models and when. These governance practices create accountability and support regulatory compliance requirements.

Resource Optimization and Scaling

Resource allocation for AI workloads balances performance requirements with cost efficiency. Right-sizing involves selecting appropriate instance types and quantities based on actual workload characteristics. GPU-accelerated instances provide superior performance for training and inference of deep learning models, while CPU instances may suffice for simpler models or preprocessing tasks. Profiling tools identify bottlenecks and guide resource allocation decisions.

Autoscaling policies adjust resources dynamically based on workload demands. Horizontal scaling adds or removes model serving replicas based on request rates or queue lengths. Vertical scaling adjusts CPU and memory allocations for individual pods. For AI applications, custom metrics like inference latency or GPU utilization trigger scaling actions. Proper autoscaling configuration maintains performance during traffic spikes while minimizing costs during quiet periods.

Batch inference strategies process multiple predictions simultaneously, improving throughput and reducing per-prediction costs. Request batching collects individual requests over a short time window and processes them together. Offline batch processing handles large-scale inference jobs efficiently by leveraging spot instances or other low-cost compute options. These strategies optimize resource utilization for AI workloads with diverse performance requirements.

Security and Compliance

Security for cloud-native AI systems encompasses multiple layers from infrastructure to application. Network policies in Kubernetes restrict communication between pods, implementing zero-trust principles. Service mesh technologies like Istio provide mutual TLS authentication between services and fine-grained access control. These measures protect sensitive model intellectual property and customer data processed by AI applications.

Data privacy considerations are paramount when handling training data and inference requests. Encryption protects data at rest and in transit. Access control policies limit who can view or modify sensitive data. For applications handling personal information, privacy-preserving techniques like differential privacy or federated learning may be appropriate. Compliance with regulations like GDPR or HIPAA requires careful attention to data handling practices throughout the ML lifecycle.

Model security addresses threats specific to AI systems. Adversarial example detection identifies inputs designed to fool models. Model inversion attack prevention protects against attempts to extract training data from deployed models. Regular security audits assess the attack surface of AI applications. Rate limiting and authentication prevent abuse of model serving endpoints. These measures protect both the AI system and its users from security threats.

Implementation Strategies

Setting Up Your Cloud-Native AI Environment

Establishing a cloud-native AI development environment begins with selecting appropriate cloud infrastructure. Public cloud providers like AWS, Azure, and GCP offer managed Kubernetes services (EKS, AKS, GKE) that simplify cluster management. These managed services handle control plane operations, security patches, and scaling, allowing teams to focus on AI workloads rather than cluster administration.

Installing essential tooling creates a functional development environment. kubectl enables command-line interaction with Kubernetes clusters. Helm simplifies the deployment of complex applications through packaged charts. Cloud-specific CLI tools (aws-cli, az, gcloud) facilitate resource management and authentication. Container runtime tools like Docker Desktop enable local development and testing before deploying to shared clusters.

Configuring namespaces and resource quotas establishes organizational structure within clusters. Separate namespaces for data science teams, development, and production workloads provide isolation and prevent resource conflicts. Role-based access control (RBAC) policies define who can deploy and modify resources in each namespace. These foundational configurations create a secure, organized environment for cloud-native AI development.

Deploying Your First AI Model

Deploying an AI model to Kubernetes begins with containerizing the model and its serving logic. Creating a Docker image packages the trained model file, serving framework (like Flask or FastAPI), and dependencies. The Dockerfile defines build instructions, starting from a lightweight Python base image, installing required libraries, copying the model file, and exposing the serving endpoint.

Building and pushing the Docker image to a container registry makes it available for Kubernetes deployment. Cloud provider registries (ECR, ACR, GCR) offer secure, high-performance storage for container images. Authentication credentials configure Kubernetes to pull images from private registries. Image scanning validates that no critical vulnerabilities exist before deployment.

Creating Kubernetes deployment manifests defines how the application runs in the cluster. YAML configuration files specify the container image, resource requirements (CPU, memory, GPU), replica count, and environment variables. Service definitions create stable network endpoints for accessing deployed models. LoadBalancer or Ingress resources expose services to external clients. Applying these manifests with kubectl deploys the AI model and makes it available for inference requests.

Building ML Pipelines

Machine learning pipelines automate workflows from data ingestion through model deployment. Kubeflow Pipelines provides a platform for defining, orchestrating, and managing ML workflows. Pipeline components represent individual steps like data preprocessing, model training, evaluation, and deployment. These components can be reused across different pipelines, promoting consistency and reducing development time.

Pipeline definitions in Kubeflow use the Python SDK to create directed acyclic graphs (DAGs) representing workflow dependencies. Each component runs in its own container, ensuring isolation and reproducibility. Parameters allow customization of pipeline behavior without modifying code. Caching mechanisms reuse outputs from previous runs when inputs haven’t changed, accelerating development iterations.

Orchestrating complex workflows involves coordinating multiple components with dependencies. Training components may run only after data preprocessing completes. Model deployment waits for a successful evaluation. Conditional logic enables branching based on results, such as deploying only if evaluation metrics meet thresholds. These workflow patterns create robust, automated ML pipelines that require minimal manual intervention.

Monitoring and Maintenance

Implementing comprehensive monitoring for cloud-native AI applications requires collecting metrics at multiple levels. Infrastructure metrics track CPU, memory, GPU utilization, and network performance across cluster nodes. Application metrics measure request rates, response times, and error rates for serving endpoints. Model-specific metrics include prediction accuracy, confidence scores, and feature distributions that indicate model performance.

Alerting rules notify teams when metrics exceed acceptable thresholds or anomalies occur. Prometheus AlertManager routes notifications to appropriate channels like Slack, email, or PagerDuty. Alerts for high error rates, elevated latency, or resource exhaustion enable rapid response to issues. ML-specific alerts trigger when model accuracy degrades or data drift is detected, prompting model retraining or investigation.

Regular maintenance activities ensure continued system health and performance. Updating base images and dependencies addresses security vulnerabilities. Retraining models with fresh data maintains accuracy as real-world patterns evolve. Capacity planning reviews resource utilization trends and adjusts cluster size accordingly. Performance optimization identifies bottlenecks and implements improvements. These ongoing activities keep cloud-native AI systems running smoothly over time.

Advanced Patterns and Techniques

Distributed Training

Distributed training accelerates model development by parallelizing computation across multiple GPUs or machines. Data parallelism replicates the model across devices, with each device processing different data batches. Gradients are synchronized across devices to update model weights consistently. This approach scales linearly with the number of devices for many model architectures, dramatically reducing training time.

Model parallelism splits large models across multiple devices when they cannot fit in a single device’s memory. Different layers or components of the model run on different devices, with activations passed between them during forward and backward passes. This technique enables training of massive models that would be impossible on single devices. Pipeline parallelism further optimizes this by processing multiple micro-batches simultaneously.

Kubernetes facilitates distributed training through specialized operators. The Training Operator (formerly tf-operator, pytorch-operator) manages distributed training jobs for popular frameworks. It handles worker pod creation, configuration, and coordination. Gang scheduling ensures all workers for a training job start simultaneously, preventing deadlocks. These tools abstract the complexity of distributed training, making it accessible to more users.

Edge AI and Hybrid Deployments

Edge AI extends cloud-native principles to edge computing environments where low latency or offline operation is required. Lightweight container runtimes like K3s bring Kubernetes to resource-constrained edge devices. Model optimization techniques like quantization and pruning reduce model size and computational requirements, enabling deployment to edge hardware.

Hybrid deployments distribute AI workloads across cloud and edge infrastructure based on requirements. Latency-sensitive predictions run on edge devices close to data sources, while model training and heavy processing occur in the cloud. Cloud-native tools facilitate this architecture through consistent deployment patterns and management interfaces across environments.

Edge device management platforms coordinate deployments to distributed fleets of devices. GitOps workflows push configuration and model updates to edge locations. Monitoring aggregate metrics from edge devices to cloud platforms for centralized visibility. Bandwidth-efficient update mechanisms use differential updates or compressed models. These patterns enable scalable management of AI applications across geographically distributed infrastructure.

Multi-Cloud and Hybrid Cloud Strategies

Multi-cloud strategies distribute AI workloads across multiple cloud providers, avoiding vendor lock-in and leveraging best-of-breed services. Containerization and Kubernetes provide portability, enabling applications to run on any cloud. Abstraction layers like Crossplane manage infrastructure across providers through unified APIs. These approaches create flexibility to optimize for cost, performance, or compliance requirements.

Hybrid cloud architectures combine on-premises infrastructure with public cloud resources. Organizations may keep sensitive training data on-premises while using cloud GPU resources for model training. Cloud-native tools like Kubernetes federation coordinate deployments across clusters regardless of location. Service meshes extend networking across environments, enabling seamless communication between components.

Managing multi-cloud AI deployments requires careful consideration of data gravity and network latency. Placing the model serving close to data sources minimizes latency. Replicating models across regions ensures availability and reduces response times for geographically distributed users. Cost optimization strategies like spot instances on different clouds provide the best pricing. These considerations create efficient, resilient multi-cloud AI architectures.

Common Challenges and Solutions

Managing Computational Resources

Computational demands of AI workloads create resource management challenges. GPU scarcity and high costs require efficient utilization strategies. GPU sharing through time-slicing or multi-instance GPUs (MIG) allows multiple workloads to use the same device. Resource quotas prevent individual experiments from monopolizing cluster capacity. Priority classes ensure critical production workloads preempt less important jobs when resources are scarce.

Cost optimization balances performance requirements with budget constraints. Spot instances offer significant discounts for interruptible workloads like model training. Autoscaling adjusts resource allocation dynamically, avoiding overprovisioning. Storage tiering uses faster expensive storage for active projects and cheaper storage for archived data. These strategies control costs while maintaining necessary performance for AI development.

Handling Data Pipeline Complexity

Data pipelines for machine learning involve complex transformations, validations, and orchestration. Pipeline frameworks like Apache Airflow or Kubeflow Pipelines provide workflow orchestration that handles dependencies, retries, and error handling. Modular pipeline components enable the reuse and testing of individual transformation steps. Version control for pipeline definitions tracks changes and enables reproducibility.

Data versioning ensures reproducibility by tracking datasets used for model training. Tools like DVC (Data Version Control) integrate with Git to version large datasets efficiently. Linking models to specific data versions creates traceability from predictions back to source data. This versioning discipline is essential for debugging model issues and complying with regulatory requirements.

Ensuring Model Reproducibility

Reproducibility challenges arise from non-deterministic operations, dependency variations, and environmental differences. Containerization addresses environment variations by packaging all dependencies with consistent versions. Setting random seeds controls non-deterministic algorithms. Recording complete experiment configurations, including hyperparameters, data versions, and code commits, enables exact reproduction of training runs.

Experiment tracking platforms like MLflow automatically record the information needed for reproducibility. Each training run captures metrics, parameters, artifacts, and environment details. Comparing experiments identifies which changes improved model performance. Programmatic access to tracked experiments enables automated analysis and reporting. These practices transform ad-hoc experimentation into systematic, reproducible machine learning development.

Addressing Security Concerns

Security vulnerabilities in dependencies threaten AI applications. Regular scanning identifies known vulnerabilities in container images and Python packages. Automated updates apply security patches, while testing ensures compatibility. Software composition analysis tracks all dependencies, including transitive ones, providing visibility into the entire supply chain.

Model stealing and adversarial attacks represent AI-specific security threats. Rate limiting and authentication prevent unauthorized access to model endpoints. Adversarial training improves model robustness against malicious inputs. Monitoring detects unusual inference patterns that may indicate attacks. Implementing defense-in-depth strategies provides multiple security layers protecting AI systems.

Future Trends in Cloud-Native AI

Serverless AI and Function-as-a-Service

Serverless computing is extending to AI workloads, enabling pay-per-inference pricing models. Function-as-a-Service (FaaS) platforms like AWS Lambda or Google Cloud Functions now support ML inference workloads. Containerized functions package models and serving logic, automatically scaling from zero to thousands of requests. This approach eliminates infrastructure management and optimizes costs for variable workloads.

Event-driven AI architectures use serverless functions to process streams of events. Image processing, natural language analysis, or anomaly detection triggers automatically when new data arrives. Cloud-native event systems like Kafka or cloud-native messaging services coordinate processing. These architectures enable real-time AI applications without maintaining an always-on infrastructure.

AI-Optimized Hardware and Accelerators

Specialized AI accelerators continue evolving beyond traditional GPUs. Tensor Processing Units (TPUs), developed by Google, optimize neural network operations. Custom silicon from cloud providers like AWS (Inferentia, Trainium) and Azure provides cost-effective alternatives for specific workloads. Cloud-native platforms are abstracting hardware differences, allowing applications to leverage optimal accelerators without code changes.

Heterogeneous computing environments mix different accelerator types based on workload characteristics. Training uses high-memory GPUs, inference uses efficient custom silicon, and preprocessing runs on CPUs. Kubernetes device plugins and resource managers coordinate workload placement across diverse hardware. This specialization improves both performance and cost efficiency for AI applications.

Integration with Emerging Technologies

Quantum computing integration represents a future frontier for cloud-native AI. Hybrid quantum-classical algorithms may enhance specific AI workloads like optimization or simulation. Cloud providers are making quantum computing accessible through APIs, enabling experimentation without hardware ownership. As quantum technology matures, cloud-native orchestration will coordinate quantum and classical resources.

5G networks and edge computing convergence enable new AI applications. Ultra-low latency supports real-time inference for autonomous systems, augmented reality, and industrial automation. Cloud-native architectures spanning edge and cloud seamlessly handle computation distribution. These enabling technologies expand AI application domains beyond traditional data center deployments.

More Read: Hybrid Cloud Solutions for Enterprise AI Deployment

Conclusion

Cloud-native AI development has transformed how organizations build, deploy, and scale artificial intelligence applications. By leveraging containerization, orchestration platforms like Kubernetes, and MLOps practices, teams can create AI systems that are scalable, resilient, and maintainable. The tools and best practices discussed in this article—from Docker and Kubeflow to monitoring solutions and security frameworks—provide a comprehensive toolkit for modern AI development.

As the field continues evolving with serverless computing, specialized accelerators, and edge deployment patterns, the fundamental principles of cloud-native architecture remain essential. Organizations that master these tools and methodologies position themselves to harness AI’s full potential while maintaining operational excellence. Success in cloud-native AI requires continuous learning, experimentation with emerging technologies, and commitment to security, reproducibility, and collaboration across teams. The future of AI development lies firmly in cloud-native approaches that enable rapid innovation, efficient resource utilization, and enterprise-grade reliability for production systems that drive real business value.