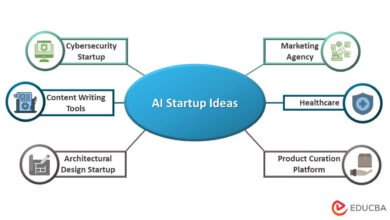

The rapid adoption of artificial intelligence and machine learning technologies has revolutionized how organizations process data and make decisions. However, this digital transformation brings unprecedented security challenges that demand immediate attention. As businesses increasingly deploy AI models in cloud environments, the need for comprehensive cloud security for AI has become paramount. The convergence of cloud computing and artificial intelligence creates a unique threat landscape where sensitive data, proprietary algorithms, and valuable machine learning models face sophisticated attacks from cybercriminals.

Organizations leveraging cloud-based AI solutions must navigate a complex security ecosystem that extends beyond traditional cybersecurity measures. The stakes are incredibly high—a single security breach can expose confidential customer information, compromise intellectual property worth millions, and destroy years of competitive advantage. From data poisoning attacks that corrupt training datasets to model theft that enables competitors to replicate proprietary innovations, the threats are both diverse and evolving. Recent statistics indicate that cybercrime damages are projected to reach $10.5 trillion annually, with AI-powered attacks significantly contributing to this alarming figure.

Cloud security for AI encompasses multiple layers of protection, including data encryption, access control mechanisms, model integrity verification, and continuous threat monitoring. Organizations must implement robust security frameworks that protect AI assets throughout their entire lifecycle—from development and training to deployment and inference. The challenge intensifies as AI systems handle increasingly sensitive data, including personal identifiable information, financial records, healthcare data, and confidential business intelligence. Moreover, the distributed nature of cloud infrastructure means that security vulnerabilities can exist at multiple points, from API endpoints to storage repositories.

This comprehensive guide explores the critical aspects of securing AI in cloud environments, providing actionable strategies for protecting your most valuable digital assets. Whether you’re deploying machine learning models on AWS, Azure, or Google Cloud Platform, and implementing proper security measures is no longer optional—it’s essential for survival in today’s threat-laden digital landscape. We’ll examine industry best practices, emerging threats, compliance requirements, and practical solutions that enable organizations to harness the power of AI while maintaining robust security postures.

Cloud Security Challenges in AI Systems

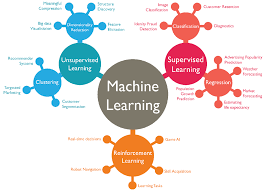

The integration of artificial intelligence with cloud computing introduces unique security vulnerabilities that traditional cybersecurity approaches often fail to address adequately. Unlike conventional applications, AI systems process massive volumes of data, employ complex algorithms, and make autonomous decisions that can have far-reaching consequences. The cloud infrastructure hosting these systems provides scalability and flexibility, but simultaneously creates expanded attack surfaces that malicious actors can exploit.

The Unique Vulnerability Landscape

AI models deployed in cloud environments face threats that differ fundamentally from traditional software applications. These systems are vulnerable to adversarial attacks where attackers manipulate input data to cause incorrect predictions or classifications. For instance, subtle changes to images can fool facial recognition systems, while carefully crafted text can bypass content moderation algorithms. The machine learning pipeline—from data collection and preprocessing to model training and deployment—presents multiple potential entry points for attackers.

Data poisoning represents one of the most insidious threats to AI security. Attackers can inject malicious data into training datasets, causing models to learn incorrect patterns or behaviors. This contamination can remain undetected for extended periods, gradually degrading model performance or creating backdoors that activate under specific conditions. The distributed nature of cloud storage means training data often resides across multiple locations, increasing the complexity of maintaining data integrity.

Infrastructure and Access Control Challenges

Cloud-based AI deployments rely on complex infrastructure involving multiple components—data lakes, compute clusters, API gateways, and model serving platforms. Each component requires proper configuration and security hardening, yet many organizations struggle with misconfigured cloud resources that inadvertently expose sensitive information. According to security research, misconfiguration remains one of the leading causes of cloud security breaches, affecting AI systems particularly severely due to their distributed architecture.

Access control becomes exponentially more complicated in AI environments where numerous stakeholders—data scientists, engineers, DevOps teams, and business users—require varying levels of system access. Implementing least privilege principles while maintaining operational efficiency presents significant challenges. Additionally, API security for AI services demands rigorous authentication and authorization mechanisms to prevent unauthorized model access or data exfiltration.

Model Theft and Intellectual Property Risks

AI model theft poses severe financial and competitive risks for organizations that invest heavily in developing proprietary algorithms. Attackers employ various techniques to extract model parameters, including model inversion attacks that reconstruct training data from model outputs and extraction attacks that create functional replicas through repeated querying. The value of trained models—representing months of computation time, expert knowledge, and competitive advantage—makes them extremely attractive targets for industrial espionage.

Cloud environments can inadvertently facilitate model theft when organizations fail to implement proper model encryption and monitoring. Exposed API endpoints, inadequate logging, and insufficient rate limiting enable attackers to systematically probe models and extract valuable information. The economic impact extends beyond immediate losses, as stolen models can be sold on dark web markets or used by competitors to neutralize technological advantages.

Essential Data Protection Strategies for AI in the Cloud

Protecting sensitive data in cloud-based AI systems requires implementing comprehensive security measures that address data throughout its entire lifecycle. From collection and storage to processing and disposal, every stage presents unique security challenges that organizations must address systematically.

Implementing End-to-End Encryption

Data encryption serves as the foundational layer of protection for AI systems. Organizations must implement encryption at rest for all stored data, including training datasets, model parameters, and inference results. Modern cloud platforms like AWS, Azure, and Google Cloud offer native encryption services, but organizations must configure these properly and maintain control over encryption keys. Key management becomes critical—storing encryption keys separately from encrypted data prevents single points of failure.

Encryption in transit protects data moving between cloud components, from client applications to backend services. Implementing TLS/SSL protocols for all network communications ensures that intercepted data remains unreadable to attackers. For particularly sensitive AI applications, organizations should consider homomorphic encryption, which allows computations on encrypted data without decryption, though this approach currently imposes significant performance overhead.

Data Anonymization and Privacy-Preserving Techniques

Data anonymization techniques help organizations leverage sensitive information for AI training while minimizing privacy risks. Differential privacy adds carefully calibrated noise to datasets, protecting individual records while preserving statistical properties necessary for machine learning. This approach enables organizations to share and analyze data without exposing personal information, though it requires balancing privacy protection against model accuracy.

Tokenization and pseudonymization replace sensitive data elements with surrogate values, allowing AI systems to process information without accessing underlying sensitive details. For healthcare and financial AI applications, these techniques enable compliance with regulations like HIPAA and GDPR while maintaining functionality. Organizations should implement data masking in development and testing environments to prevent unnecessary exposure of production data.

Secure Data Storage and Access Patterns

Cloud storage security for AI data demands careful attention to access controls, retention policies, and backup strategies. Organizations should segment data based on sensitivity levels, applying appropriate security controls to each category. Object storage services like Amazon S3 or Azure Blob Storage require proper bucket policies, access logging, and versioning configurations to prevent unauthorized access or accidental deletion.

Implementing data loss prevention (DLP) tools helps organizations monitor and control data movement within cloud environments. These solutions can detect attempts to exfiltrate sensitive training data or model outputs, triggering alerts or automatically blocking suspicious transfers. Regular security audits of data access patterns help identify anomalies that might indicate compromised credentials or insider threats.

Training Data Integrity and Validation

Maintaining training data integrity requires implementing validation mechanisms that detect data poisoning attempts. Organizations should establish baseline data quality metrics and continuously monitor for statistical anomalies that might indicate contaminated datasets. Data provenance tracking creates audit trails showing data origins, transformations, and access history, enabling organizations to trace security incidents back to their sources.

Input validation at ingestion points prevents malicious data from entering training pipelines. Implementing schema validation, range checks, and anomaly detection helps filter out potentially harmful inputs before they affect model training. Organizations should also maintain golden datasets—verified, clean reference data—for periodic model retraining and integrity verification.

Securing AI Models: Protection, Monitoring, and Access Control

AI model security encompasses protecting model integrity, preventing unauthorized access, and monitoring for suspicious activities throughout the model lifecycle. Organizations must treat trained models as critical intellectual property requiring security measures comparable to source code or trade secrets.

Model Encryption and Secure Storage

Model encryption protects the parameters, weights, and architectures that constitute trained AI models. Organizations should encrypt models both at rest in model registries and during transit between training infrastructure and deployment environments. Hardware-based encryption using trusted execution environments (TEEs) or secure enclaves provides additional protection for models during inference, preventing unauthorized access even from privileged system administrators.

Model versioning systems should implement access controls that track who modifies models, when changes occur, and what specific alterations are made. This audit trail proves invaluable for security investigations and compliance requirements. Organizations deploying models across multiple cloud regions must ensure consistent encryption and access control policies across all locations.

Implementing Robust Access Controls

Access control for AI models requires implementing zero-trust architecture principles, where every access request undergoes continuous verification regardless of network location. Role-based access control (RBAC) assigns permissions based on job functions, while attribute-based access control (ABAC) makes decisions based on multiple contextual factors, including time, location, and device security posture.

Multi-factor authentication (MFA) should be mandatory for accessing production AI models and training infrastructure. For API-based model access, organizations must implement strong authentication mechanisms such as OAuth 2.0 or API key rotation policies. Rate limiting prevents attackers from executing extraction attacks that attempt to replicate models through excessive querying.

Model Monitoring and Anomaly Detection

Continuous monitoring of AI model behavior helps detect security incidents, performance degradation, or adversarial attacks. Organizations should establish baseline performance metrics and implement alerting systems that trigger when models exhibit unusual behavior—sudden accuracy drops, unexpected prediction distributions, or abnormal resource consumption patterns.

Model validation before deployment helps ensure models haven’t been tampered with during training or storage. Implementing cryptographic signatures for models enables verification that deployed versions match approved, tested models. Organizations should also monitor for model drift, where performance degrades over time, which might indicate data quality issues or ongoing adversarial attacks.

Protecting Against Adversarial Attacks

Adversarial robustness requires implementing defenses against attacks designed to manipulate model behavior. Adversarial training, where models are exposed to crafted malicious inputs during training, improves resilience against such attacks. Input validation and sanitization at inference endpoints help detect and filter adversarial examples before they reach models.

Organizations should implement model output validation that checks predictions for reasonableness before acting on them. For critical applications, ensemble approaches using multiple models can provide redundancy—if models disagree significantly, the system can flag potential adversarial manipulation. Regular penetration testing specifically targeting AI models helps identify vulnerabilities before attackers exploit them.

Cloud Infrastructure Security for AI Workloads

Securing the underlying cloud infrastructure supporting AI workloads requires comprehensive approaches that address compute resources, networking, and orchestration platforms. The complexity of modern AI deployments, often involving distributed training across multiple nodes and microservices architectures for inference, demands careful security architecture.

Securing Compute Resources and Containers

AI workloads typically run on specialized compute resources, including GPU clusters, TPUs, or custom AI accelerators. Organizations must secure these resources through proper network segmentation, ensuring training infrastructure remains isolated from production systems. Container security becomes critical as many AI deployments use Docker containers and Kubernetes for orchestration—implementing image scanning, runtime protection, and pod security policies helps prevent compromised containers from affecting AI systems.

Virtual machine security for AI compute instances requires hardened operating systems, minimal software installations, and regular patching schedules. Organizations should implement immutable infrastructure approaches where compute resources are replaced rather than updated, reducing the window for exploitation. Secrets management systems like HashiCorp Vault or AWS Secrets Manager prevent hardcoding credentials in AI application code or configuration files.

Network Security and Segmentation

Network security for cloud-based AI demands implementing microsegmentation that limits lateral movement if attackers compromise individual components. Virtual private clouds (VPCs) should isolate AI workloads from other applications, with carefully controlled ingress and egress points. Web application firewalls (WAFs) protect API endpoints serving AI models, filtering malicious requests and preventing common attack patterns.

DDoS protection becomes essential for public-facing AI services that might be targeted to disrupt operations or mask other attacks. Cloud providers offer native DDoS mitigation services that organizations should configure appropriately. Implementing intrusion detection systems (IDS) and intrusion prevention systems (IPS) specifically tuned for AI traffic patterns helps identify suspicious activities.

Identity and Access Management for AI Services

Identity and access management (IAM) for cloud AI services requires granular permission models that enforce least privilege principles. Organizations should create service-specific roles with minimal required permissions rather than granting broad administrative access. Service accounts for automated AI workflows must have credentials rotated regularly and activities logged comprehensively.

Federated identity solutions enable organizations to manage access centrally while leveraging cloud provider services. Conditional access policies that consider device health, location, and risk assessments add additional security layers. Organizations should regularly review and audit IAM permissions, removing unused accounts and overly permissive policies that accumulate over time.

Supply Chain Security for AI Components

AI supply chain security addresses risks from third-party libraries, pre-trained models, and external data sources. Organizations should implement software composition analysis to identify vulnerabilities in open-source libraries used for machine learning. Downloading pre-trained models from public repositories introduces risks—models might contain backdoors or have been trained on poisoned data.

Organizations must establish vendor risk management processes for cloud AI services and tools. This includes reviewing security certifications, data handling practices, and establishing incident response procedures. Model provenance verification ensures that models come from trusted sources and haven’t been tampered with during distribution.

Compliance, Governance, and Regulatory Considerations

Cloud security for AI must align with increasingly complex regulatory requirements governing data privacy, algorithmic fairness, and system transparency. Organizations operating globally face multiple, sometimes conflicting, regulatory frameworks that demand careful navigation.

Data Privacy Regulations and AI

GDPR (General Data Protection Regulation) in Europe establishes strict requirements for processing personal data, including transparency about automated decision-making. Organizations using AI must provide explanations for algorithmic decisions affecting individuals and implement technical measures ensuring data subject rights like deletion requests don’t compromise model performance. CCPA (California Consumer Privacy Act) and similar U.S. state privacy laws impose comparable obligations for organizations handling California residents’ data.

AI-specific regulations are emerging globally, with the EU’s AI Act establishing risk-based frameworks for AI system deployment. High-risk applications—including those used in healthcare, employment, and law enforcement—face stringent requirements for documentation, testing, and human oversight. Organizations must maintain comprehensive records of AI system development, validation, and deployment decisions to demonstrate compliance.

Industry-Specific Compliance Requirements

Healthcare AI applications must comply with HIPAA (Health Insurance Portability and Accountability Act) requirements for protecting patient data. This includes implementing business associate agreements with cloud providers, maintaining audit logs of all data access, and ensuring patient data never leaves authorized processing environments. HITECH Act provisions extend HIPAA requirements to cloud service providers and impose breach notification obligations.

Financial services AI faces regulations including SOX (Sarbanes-Oxley), PCI DSS for payment data, and emerging guidelines on algorithmic trading and credit decisioning. Financial regulators increasingly scrutinize AI model risk management, demanding documentation of model development, validation, and monitoring processes. Organizations must demonstrate that AI systems don’t introduce discriminatory bias in lending or insurance decisions.

Governance Frameworks for AI Security

Implementing AI governance frameworks helps organizations systematically address security, ethical, and operational concerns. Frameworks should define roles and responsibilities for AI development, establish model approval processes, and create mechanisms for ongoing monitoring and accountability. AI ethics committees review proposed AI applications for potential risks and ensure alignment with organizational values.

Model inventory management provides visibility into all AI models deployed across organizations, including ownership, data sources, risk classifications, and security controls. This inventory supports security assessments, compliance audits, and incident response activities. Organizations should implement change management processes for AI systems that require security reviews before deploying model updates or infrastructure changes.

Audit Trails and Documentation

Comprehensive logging and monitoring create audit trails demonstrating compliance with security policies and regulatory requirements. Organizations must log all access to sensitive data, model training activities, deployment events, and prediction requests. These logs should be stored in tamper-evident systems with appropriate retention periods—often seven years for regulatory compliance.

Documentation requirements for AI systems extend beyond traditional software documentation, including dataset descriptions, model architecture decisions, hyperparameter choices, and validation results. Organizations must document security controls, risk assessments, and incident response procedures. This documentation supports regulatory audits, security assessments, and forensic investigations following security incidents.

Best Practices for Implementing Cloud AI Security

Successfully implementing cloud security for AI requires adopting proven best practices that address technical, organizational, and procedural aspects of security management. These practices help organizations build robust security postures that evolve with emerging threats and business requirements.

Security-by-Design Approach

Security-by-design principles embed security considerations throughout the AI development lifecycle rather than adding them as afterthoughts. Organizations should conduct threat modeling during project planning, identifying potential attack vectors and implementing preventive controls. Security requirements should influence architecture decisions, data collection strategies, and deployment approaches from inception.

Secure development practices for AI include code reviews focusing on security vulnerabilities, automated security testing in CI/CD pipelines, and secure coding standards tailored to machine learning frameworks. Organizations should establish security champions within AI development teams who receive specialized training and promote security awareness among colleagues.

Continuous Security Validation and Testing

Penetration testing for AI systems should extend beyond traditional application security testing to include attacks specific to machine learning—model inversion, membership inference, and adversarial example generation. Organizations should engage security firms with AI expertise to conduct regular assessments, identifying vulnerabilities before attackers exploit them.

Red team exercises simulate real-world attack scenarios against AI systems, testing both technical controls and incident response procedures. These exercises help organizations understand security weaknesses, refine detection capabilities, and improve response times. Results should drive continuous improvement of security controls and team training.

Incident Response Planning for AI Security

Incident response plans for AI security incidents must address scenarios beyond traditional cybersecurity breaches. Organizations need procedures for responding to data poisoning discoveries, model theft attempts, or adversarial attacks affecting production systems. Response plans should define roles, communication protocols, containment strategies, and recovery procedures specific to AI assets.

Organizations should establish incident response teams with expertise in both cybersecurity and machine learning. These teams need access to tools for analyzing compromised models, identifying poisoned training data, and assessing the scope of security incidents. Regular tabletop exercises test incident response procedures and identify gaps requiring remediation.

Security Automation and Orchestration

Security automation helps organizations scale security operations to match the pace of AI development and deployment. Automated security scanning in development pipelines identifies vulnerabilities before code reaches production. Security orchestration tools coordinate responses across multiple security systems, accelerating incident detection and remediation.

Organizations should implement automated compliance checking that validates AI systems against security policies and regulatory requirements continuously rather than through periodic manual audits. Infrastructure as code approaches with embedded security controls ensure consistent security configurations across all cloud environments.

Continuous Training and Awareness

Security awareness training for AI practitioners must address threats specific to machine learning systems. Data scientists and ML engineers need education on secure data handling, model security best practices, and recognizing social engineering attempts targeting proprietary algorithms. Organizations should provide role-specific training that makes security relevant to daily responsibilities.

Cross-functional collaboration between security teams and AI development groups prevents security from becoming an afterthought or bottleneck. Regular security reviews, shared threat intelligence, and joint planning sessions build organizational cultures where security and innovation coexist productively. Organizations should celebrate security successes and learn publicly from security incidents to promote continuous improvement.

More Read: Cloud AI Services Comparison AWS vs Azure vs Google Cloud

Conclusion

Cloud security for AI represents a critical imperative for organizations leveraging artificial intelligence to drive innovation and competitive advantage. As AI systems increasingly handle sensitive data and make consequential decisions, implementing comprehensive security measures becomes essential rather than optional. The unique challenges posed by AI—from data poisoning and model theft to adversarial attacks—demand security approaches extending beyond traditional cybersecurity frameworks.

Organizations must protect AI assets throughout their entire lifecycle, implementing encryption, access controls, continuous monitoring, and robust governance frameworks. Success requires embedding security-by-design principles, maintaining continuous vigilance through testing and validation, and fostering collaboration between security professionals and AI practitioners. The regulatory landscape continues evolving with new requirements for AI transparency, data privacy, and algorithmic accountability, making compliance an ongoing journey rather than a destination.

By adopting the strategies and best practices outlined in this guide, organizations can harness the transformative power of cloud-based AI while maintaining strong security postures that protect valuable data, models, and ultimately, stakeholder trust. The investment in comprehensive AI security not only prevents costly breaches but enables organizations to innovate confidently, knowing their most valuable digital assets remain protected against emerging threats in an increasingly complex threat landscape.