Data Visualization Best Practices for AI Insights

Transform AI data into actionable insights with proven data visualization best practices. Learn techniques, tools, and strategies for effective.

In today’s data-driven landscape, artificial intelligence has revolutionized how organizations extract meaningful patterns from vast datasets. However, the true power of AI insights remains locked without effective data visualization best practices. As businesses generate unprecedented volumes of information, the ability to transform complex AI-generated analytics into clear, actionable visual representations has become a critical competitive advantage. The intersection of data visualization and artificial intelligence is reshaping how decision-makers interpret predictive models, identify anomalies, and communicate findings across organizational hierarchies.

Machine learning algorithms can process millions of data points in seconds, but humans need visual context to understand these discoveries. From predictive analytics dashboards to real-time monitoring systems, visualization serves as the essential bridge between raw computational output and human comprehension. Organizations implementing robust visualization frameworks report significantly faster decision-making cycles and improved stakeholder engagement. Whether you’re working with natural language processing results, anomaly detection outputs, or neural network predictions, following proven data visualization techniques ensures your AI investments deliver measurable business impact.

This comprehensive guide explores fundamental principles that transform overwhelming AI data into compelling visual narratives. You’ll discover how to select appropriate chart types for different AI models, implement interactive dashboards that reveal hidden patterns, and establish data governance practices that maintain visualization integrity. We’ll examine industry-leading AI visualization tools, discuss common pitfalls in presenting algorithmic insights, and provide actionable frameworks for creating user-centric visual experiences. By mastering these best practices, data professionals can ensure their AI-driven insights don’t just inform—they inspire action and drive organizational transformation in an increasingly competitive digital environment.

AI Data Visualization Fundamentals

- Data visualization for AI insights represents more than simply creating charts—it involves translating complex algorithmic outputs into formats that facilitate human decision-making. AI-powered analytics generate multidimensional datasets that require sophisticated visualization approaches to reveal their true value—the fundamental principle centers on matching data types with appropriate visual representations while maintaining clarity and accessibility.

- Machine learning models produce various output types, including classification probabilities, regression predictions, clustering results, and feature importance rankings. Each requires distinct visualization strategies to communicate effectively. For instance, confusion matrices effectively display classification model performance, while scatter plots with color coding reveal clustering patterns in unsupervised learning results. These foundational relationships between AI output types and visual formats form the cornerstone of effective data visualization practices.

- The complexity of AI data demands that visualizations balance technical accuracy with interpretability. Deep learning models may involve hundreds of parameters and layers, yet stakeholders need simplified views showing model confidence, prediction accuracy, and decision boundaries. Data visualization frameworks must accommodate both technical audiences requiring detailed model diagnostics and executive stakeholders seeking high-level insights. This dual requirement makes AI visualization uniquely challenging compared to traditional business intelligence reporting.

Choosing the Right Visualization Types for AI Models

Selecting appropriate visualization types for AI insights directly impacts how effectively audiences comprehend and act upon machine learning results. Different AI models generate distinct output formats that align with specific chart types and graphical representations. Classification models benefit from ROC curves, precision-recall graphs, and confusion matrices that display prediction accuracy across categories. These visualizations allow data scientists to identify model performance issues and optimization opportunities quickly.

- Regression models require different visualization approaches focusing on predicted versus actual values, residual patterns, and confidence intervals. Scatter plots with trend lines effectively communicate regression relationships, while residual plots reveal whether models meet statistical assumptions. For time series forecasting, line charts with confidence bands show predictions alongside historical data, enabling stakeholders to assess forecast reliability at a glance.

- Clustering algorithms in unsupervised learning present unique visualization challenges requiring techniques like dimensionality reduction. T-SNE plots and UMAP visualizations compress high-dimensional cluster assignments into two-dimensional representations, revealing group separations and overlap patterns. Dendrograms display hierarchical clustering relationships, showing how data points group at different similarity thresholds. Heatmaps effectively visualize correlation matrices and feature relationships within clusters.

- Neural networks and deep learning models demand specialized visualization techniques, including activation maps, saliency maps, and layer output visualizations. These approaches reveal what features the model considers important for predictions, supporting interpretability requirements. Feature importance charts using bar graphs or horizontal rankings communicate which variables most influence model decisions, essential information for stakeholders evaluating AI system trustworthiness and regulatory compliance.

Data Preparation and Quality Assurance for Visualizations

- Data quality fundamentally determines visualization effectiveness when working with AI insights. Before creating any visual representation, thorough data preprocessing ensures accuracy and reliability. Missing values, outliers, and inconsistencies in AI-generated datasets can distort visual narratives and lead to flawed business decisions. Implementing systematic data cleaning protocols, including duplicate removal, null value handling, and outlier detection, creates trustworthy foundations for visualization projects.

- AI models often produce confidence scores, probability distributions, and uncertainty metrics alongside predictions. Incorporating these uncertainty measures into visualizations prevents overconfident interpretations. Error bars, confidence intervals, and shaded regions communicate prediction reliability, helping stakeholders understand when AI insights require additional validation. This transparency builds trust in AI-powered analytics and supports more informed decision-making processes.

- Data transformation techniques play a crucial role in preparing AI outputs for visualization. Normalization and standardization ensure different metric scales are displayed proportionally in charts. Feature engineering may create derived variables that enhance visualization clarity, such as calculating percentage changes or creating categorical bins from continuous variables. Aggregation strategies determine appropriate detail levels—displaying individual predictions versus aggregated trends—based on audience needs and visualization purposes.

- Version control and data lineage tracking maintain visualization integrity across AI model iterations. As models retrain with new data, visualizations must update consistently while maintaining historical comparability. Documenting data sources, transformation logic, and calculation methods enables reproducibility and supports regulatory compliance requirements. Data governance frameworks establish standards for visualization accuracy, update frequencies, and approval workflows, ensuring AI insights maintain organizational credibility over time.

- Interactive Dashboards and Real-Time Monitoring

- Interactive dashboards transform static AI visualizations into dynamic exploration tools, enabling users to investigate data insights from multiple perspectives. Drill-down capabilities allow stakeholders to navigate from high-level KPI summaries to granular transaction details, supporting both strategic overview and tactical investigation needs. Filter controls, date range selectors, and parameter adjustments empower users to customize views without requiring technical expertise or data science knowledge.

- Real-time monitoring dashboards display AI model performance as new data flows through systems, essential for production machine learning applications. Streaming data visualizations update continuously, showing prediction accuracy, processing latency, and anomaly detection alerts. Color-coded indicators using traffic light systems—green, yellow, red—communicate system health at a glance, enabling rapid response to degrading model performance or data quality issues.

- Natural language querying represents a breakthrough in dashboard accessibility, allowing non-technical users to ask questions conversationally and receive appropriate visualizations automatically. AI-powered analytics platforms interpret user intent, select relevant data, and generate suitable chart types without manual configuration. This democratizes data exploration, reducing dependency on specialized analysts and accelerating insight discovery across organizations.

- Responsive design ensures interactive dashboards function effectively across devices, from desktop monitors to mobile phones. Touch-optimized controls and adaptive layouts maintain usability regardless of screen size, supporting field teams and remote workers accessing AI insights on various devices. Progressive disclosure techniques present essential information prominently while making detailed analyses available through expansion or navigation, preventing information overload while maintaining comprehensive data access.

Color Theory and Visual Design Principles

- Color selection significantly impacts how audiences interpret data visualizations and extract AI insights. Sequential color schemes using single-hue gradients effectively represent continuous data like probability scores or prediction confidence levels. Diverging color palettes with contrasting hues highlight deviations from neutral points, ideal for displaying positive versus negative sentiment analysis results or gains versus losses in predictive models.

- Categorical data requires qualitative color palettes with distinct, easily distinguishable hues. However, limiting color counts to seven or fewer prevents visual confusion and supports colorblind accessibility. Colorblind-safe palettes using combinations like blue-orange or purple-green ensure visualizations communicate effectively to approximately 8% of males with color vision deficiencies. Testing visualizations with colorblind simulation tools validates accessibility before distribution.

- Visual hierarchy guides viewer attention to the most important data insights first. Typography choice, including font weights, sizes, and styles, emphasizes key findings while subordinating supporting details. White space—often undervalued—reduces clutter and improves comprehension by creating visual breathing room around elements. Contrast ratios between text and backgrounds must meet WCAG accessibility standards, ensuring readability for users with varying visual capabilities.

- Consistency across visualization suites creates professional, cohesive analytics experiences. Establishing design systems with standardized color palettes, font selections, and layout templates accelerates dashboard creation while maintaining brand identity. However, consistency shouldn’t become rigidity—flexibility to emphasize unique aspects of specific AI insights through thoughtful design variations prevents monotonous presentations and maintains audience engagement across multiple visualizations.

Implementing Predictive Analytics Visualizations

- Predictive analytics represents one of the most valuable applications of AI technology, and effective visualization techniques communicate future projections compellingly. Forecast charts combining historical actuals with predicted values using distinct visual treatments—solid lines for past, dashed lines for future—clearly differentiate known from estimated data. Confidence bands surrounding predictions communicate uncertainty ranges, helping stakeholders understand best-case and worst-case scenarios.

- Scenario comparison visualizations allow decision-makers to evaluate multiple predictive models simultaneously. Side-by-side bar charts or small multiples display how different assumptions or model configurations produce varying outcomes. What-if analysis dashboards with adjustable input parameters show prediction sensitivity, revealing which factors most significantly impact forecasted results. These interactive capabilities transform static predictions into dynamic planning tools supporting strategic decision-making.

- Lead scoring and customer churn predictions benefit from specialized visualization approaches like waterfall charts showing factors contributing to individual predictions. Feature contribution graphs break down how each variable influences a specific prediction, providing transparency into AI model reasoning. This interpretability proves crucial when predictive insights drive high-stakes decisions affecting customer relationships or resource allocation.

- Time-to-event predictions common in survival analysis and maintenance planning require Kaplan-Meier curves or cumulative incidence plots. These statistical visualizations display probability distributions over time, showing when equipment failures or customer conversions are most likely to occur. Incorporating risk scores through color intensity or marker sizes adds additional dimensionality, helping prioritize interventions for the highest-risk cases first.

- Automated Insight Generation and Natural Language

- AI-powered insight generation automatically identifies significant patterns within data and presents them through natural language summaries alongside visualizations. These automated narratives highlight key findings like “Revenue increased 23% compared to last quarter, primarily driven by North American markets,” reducing the time required for manual analysis. Natural language generation (NLG) transforms statistical findings into readable explanations accessible to non-technical stakeholders.

- Anomaly detection visualizations benefit enormously from automated annotation systems that flag unusual patterns and explain their significance. When machine learning algorithms identify outliers or unexpected trends, callout boxes or highlighting draw attention to these critical points within charts. Contextual explanations describe why values are anomalous—comparing against historical norms, seasonal patterns, or peer benchmarks—supporting rapid investigation and response.

- Smart recommendations embedded within dashboards suggest next-best actions based on current AI insights. If predictive models indicate rising customer churn risk, visualizations might include recommended retention strategies or customer segments requiring immediate attention. These actionable insights transform passive data consumption into active decision support, increasing analytics business value and user engagement.

- Narrative flow in AI reporting follows logical sequences, guiding audiences from context through analysis to conclusions. Storytelling frameworks structure visualizations into beginning (situation overview), middle (detailed analysis), and end (recommendations) sequences. Progressive disclosure reveals complexity gradually, starting with high-level summaries before allowing deeper dives into technical details. This approach maintains engagement across diverse audiences from executives seeking quick insights to analysts requiring comprehensive technical information.

Model Interpretability and Explainability Visuals

- Model interpretability has become essential as AI systems increasingly influence critical business decisions and face regulatory scrutiny. SHAP (SHapley Additive exPlanations) plots visualize feature contributions to individual predictions, showing how each variable pushes predictions higher or lower. Waterfall charts display cumulative effects of features, starting from base probability and adding positive or negative contributions until reaching final prediction values.

- Partial dependence plots reveal how machine learning models respond to specific variables while holding others constant. These visualizations show whether relationships are linear, curvilinear, or contain thresholds where model behavior changes dramatically. Two-dimensional partial dependence plots explore interaction effects between variable pairs, uncovering complex relationships that single-variable analyses might miss.

- Decision tree visualizations make ensemble methods like random forests and gradient boosting more interpretable. While displaying entire ensembles proves impractical, visualizing representative trees or averaged decision paths provides insights into model logic. Path highlighting techniques show which decision branches specific observations follow, explaining individual predictions through transparent decision sequences.

- Global feature importance rankings using bar charts or bubble plots communicate which variables matter most across all predictions. However, these aggregated views can mask local variations where different features dominate for specific subpopulations. Local interpretable model-agnostic explanations (LIME) address this by visualizing feature importance for individual predictions or small data regions, revealing how AI models adapt reasoning across diverse scenarios.

Handling High-Dimensional Data and Complexity

High-dimensional datasets common in AI applications—with hundreds or thousands of features—present significant visualization challenges requiring dimensionality reduction techniques. Principal Component Analysis (PCA) projects high-dimensional data into two or three dimensions suitable for scatter plots, preserving maximum variance while enabling visual exploration. Axes labels explain cumulative variance captured, helping users understand how much information the reduced representation contains.

- T-SNE and UMAP algorithms provide alternative dimensionality reduction approaches, particularly effective for visualizing cluster structures and nonlinear relationships. These techniques optimize local neighborhood preservation, revealing how similar observations cluster while dissimilar ones separate. Interactive 3D visualizations allow rotation and zoom, enabling exploration of spatial relationships that static 2D projections might obscure.

- Parallel coordinates plots display high-dimensional data without dimensionality reduction by representing each feature as a vertical axis and each observation as a polyline connecting values across axes. Brushing and linking techniques highlight selected observations across multiple visualizations, supporting multivariate pattern discovery. While initially complex for unfamiliar users, parallel coordinates prove powerful for identifying feature combinations characterizing specific clusters or outcomes.

- Heatmaps effectively visualize correlation matrices, confusion matrices, and other symmetric high-dimensional relationships through color intensity. Hierarchical clustering applied to rows and columns reorders features to group related variables, revealing block structures and redundancies. Dendrograms alongside heatmap edges show clustering relationships, supporting feature selection decisions and identifying multicollinearity issues affecting model performance.

Performance Optimization for Large-Scale Visualizations

- Performance optimization becomes critical when visualizing AI insights from big data sources containing millions of observations. Data aggregation reduces point counts while preserving trend accuracy—displaying daily summaries instead of transaction-level details maintains visualization responsiveness without sacrificing essential patterns. Progressive rendering loads high-priority visible elements first, then populates additional details asynchronously, maintaining interface responsiveness during initial page loads.

- Server-side rendering shifts computational burden from client browsers to dedicated servers, particularly beneficial for complex statistical visualizations or machine learning model outputs requiring intensive calculations. Caching strategies store pre-computed aggregations and common queries, dramatically reducing response times for frequently accessed dashboards. Incremental updates refresh only changed data portions rather than regenerating entire visualizations, crucial for real-time monitoring applications.

- Level-of-detail (LOD) techniques adapt visualization complexity based on zoom level or screen resolution. Displaying simplified representations at overview scales and revealing full detail upon zooming balances performance with information richness. Canvas-based rendering instead of SVG handles larger point counts efficiently, though sacrificing some interactivity features. Selecting appropriate rendering technologies based on data volumes and interaction requirements optimizes user experience.

- Query optimization ensures that database operations supporting visualizations execute efficiently. Indexing frequently filtered columns, partitioning large tables, and materialized views pre-computing complex aggregations prevent query bottlenecks. Asynchronous loading patterns display preliminary results immediately while background processes fetch additional details, creating a perception of responsiveness even with computationally intensive AI analytics.

Collaboration and Sharing Best Practices

- Collaboration features transform individual data visualization efforts into team-wide knowledge resources. Annotation capabilities allow users to add contextual notes directly on charts, capturing insights and questions for colleagues reviewing the same analytics. Version control tracks dashboard changes over time, supporting rollback if modifications introduce issues and documenting the evolution of analytical approaches.

- Role-based access control ensures sensitive AI insights reach appropriate audiences while maintaining data security. Different stakeholder groups may require distinct visualization levels—executives need strategic summaries, while operations teams require tactical details. Personalization features let users customize default views, favorite specific dashboards, and configure alert thresholds matching their responsibilities.

- Export capabilities supporting various formats—PDF, PowerPoint, Excel, images—enable integration of visualizations into broader reporting workflows and presentations. However, exported static versions lose interactivity benefits; providing shareable dashboard links maintains dynamic features while extending access beyond platform users. Embedding options integrate AI visualizations into external applications, portals, or business intelligence ecosystems.

- Documentation standards ensure visualizations remain interpretable long after creation. Clearly labeled axes, legend descriptions, and methodology notes prevent misinterpretation. Data dictionaries define metrics, calculations, and business rules underlying visualizations, supporting consistency across teams and preventing duplicate, conflicting analyses. Change logs document modifications to data sources or calculation logic, maintaining transparency about why historical trends might shift after updates.

Mobile-First Visualization Design

- Mobile devices increasingly serve as primary data access points, demanding visualization designs optimized for smaller screens and touch interactions. Responsive layouts automatically adjust chart dimensions, font sizes, and control placements based on device characteristics, maintaining usability across form factors. Touch-optimized controls with larger hit targets accommodate finger-based interaction, replacing hover-dependent features that desktop mice easily activate but touchscreens struggle with.

- Simplified mobile views prioritize essential KPIs and key visualizations while making detailed analyses available through deliberate navigation. Progressive disclosure techniques prevent overwhelming small screens with excessive information, presenting summary insights prominently and allowing drill-downs for users requiring deeper analysis. Gesture support, including pinch-to-zoom and swipe navigation, creates intuitive mobile experiences matching user expectations from consumer applications.

- Offline capabilities enable mobile visualizations to function with limited connectivity—common in field operations, travel scenarios, or areas with unreliable networks. Data caching stores recent dashboard states locally, allowing users to review AI insights even without active connections. Sync mechanisms update cached data when connectivity returns, balancing fresh information with offline availability.

- Performance considerations intensify on mobile devices with limited processing power and battery life compared to desktop systems. Lightweight visualization, reducing animation complexity and data point counts, maintains acceptable responsiveness on mid-range smartphones. Lazy loading defers rendering off-screen content until users scroll to it, conserving resources and accelerating initial load times, critical for mobile user retention.

Testing and Validation Strategies

- Visualization testing ensures AI insights communicate accurately and effectively before releasing dashboards to broader audiences. Data validation compares visualization outputs against known correct results using test datasets with verified answers. Edge case testing evaluates how visualizations handle unusual data conditions—extreme values, missing data, zero counts—ensuring graceful degradation rather than confusing displays or errors.

- User testing with representative stakeholders reveals whether visualizations actually communicate intended insights. Think-aloud protocol, where participants describe their interpretation while exploring dashthe, uncover misunderstandings, confusing elements, or missing context. A/B testing compares alternative visualizations, designs, and measures which one more effectively drives desired user behaviors like identifying key trends or taking recommended actions.

- Cross-browser compatibility testing ensures visualizations function correctly across common browsers and versions. JavaScript frameworks powering interactive features may behave differently across browsers; thorough testing prevents surprises when users access dashboards through various platforms. Performance testing under realistic concurrent user loads validates that AI visualization systems maintain responsiveness during peak usage periods.

- Accessibility audits using automated tools and manual evaluation confirm visualizations meet WCAG standards. Keyboard navigation testing ensures all interactive features work without mice, supporting users with motor impairments. Screen reader compatibility verification confirms alternative text appropriately describes the scribes’ visual content for blind users. Color contrast validation prevents low-vision users from struggling to distinguish important elements.

Ethical Considerations and Bias Awareness

- Ethical visualization practices acknowledge how presentation choices influence interpretation and decision-making. Y-axis manipulation—starting at nonzero values or using logarithmic scales without clear labeling—can exaggerate or minimize trends deceptively. Transparent scaling and consistent axis ranges across related charts maintain integrity, allowing fair comparisons without distorting perceptions.

- Bias detection in AI visualizations requires vigilance about how machine learning models might perpetuate historical discrimination. Demographic breakdowns displaying model performance across protected characteristics reveal whether predictions work equally well for all groups. Disparate impact visualizations show if AI systems produce systematically different outcomes for specific populations, highlighting potential fairness concerns requiring investigation.

- Data source transparency builds trust by clearly disclosing where information originates, collection methods, and potential limitations. Sample size indicators prevent overconfident conclusions from small datasets with high uncertainty. Update timestamps show information currency, crucial when AI insights inform time-sensitive decisions, where stale data might mislead.

- Privacy protection through aggregation and anonymization ensures visualizations don’t inadvertently reveal sensitive individual information. K-anonymity principles guarantee sufficient group sizes before displaying demographic breakdowns, preventing the identification of specific individuals. Differential privacy techniques add statistical noise, protecting privacy while maintaining overall pattern accuracy, enabling useful insights without compromising confidentiality commitments.

Tool Selection and Technology Stack

- AI visualization tool selection balances functionality, ease of use, cost, and integration capabilities. Enterprise platforms like Tableau, Power BI, and Qlik offer comprehensive features, strong governance, and extensive connectivity options suitable for large organizations with diverse data sources. Natural language querying, automated insights, and collaboration features make these platforms accessible to non-technical users while supporting advanced customization for specialists.

- Open-source libraries, including D3.js, Plotly, and Matplotlib, provide maximum flexibility and customization control for developers building bespoke visualization solutions. React-based frameworks like Recharts integrate seamlessly into modern web applications, supporting component-based architectures. Python ecosystems combining Pandas, Seaborn, and Dash enable data scientists to create sophisticated interactive dashboards using familiar tools.

- Specialized AI visualization platforms like TensorBoard for deep learning or MLflow for model lifecycle management offer purpose-built features for specific AI workflows. These domain-focused tools provide model performance tracking, hyperparameter tuning visualizations, and experiment comparison capabilities that general business intelligence platforms lack.

- Integration capabilities determine how easily visualization tools connect to AI infrastructure. API support enables programmatic dashboard updates and embedding into existing applications. Cloud-native platforms offering serverless deployment models reduce infrastructure management overhead while scaling automatically with usage. Data connector breadth affects whether tools can access diverse data sources without requiring intermediate ETL processes.

Future Trends in AI Data Visualization

- Augmented reality (AR) and virtual reality (VR) represent emerging frontiers for immersive data visualization. Three-dimensional spatial representations of complex AI insights leverage human spatial reasoning capabilities, potentially revealing patterns obscured in traditional two-dimensional displays. Collaborative VR spaces enable distributed teams to explore data together, pointing and annotating shared virtual dashboards regardless of physical locations.

- Voice-activated analytics, building on natural language processing advances, allow hands-free data exploration. Users query AI insights conversationally while driving, cooking, or performing other tasks, with systems responding through audio summaries and automatically generated visualizations appearing on nearby screens. Conversational AI assistants proactively surface relevant insights based on user contexts and preferences.

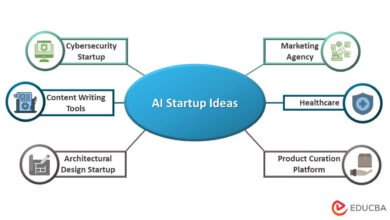

- Automated visualization generation using generative AI will increasingly suggest optimal chart types and layouts based on data characteristics and communication goals. AI assistants might create entire dashboard suites from simple prompts like “show me factors affecting customer churn,” selecting appropriate visualizations, organizing layouts effectively, and highlighting key insights without manual configuration.

- Real-time personalization adapts visualization complexity and focus areas based on individual user roles, expertise levels, and current tasks. Executives might see strategic summaries, while analysts viewing the same data encounter detailed technical analyses. Machine learning observes user interaction patterns to refine presentations, display the relevant metrics prominently, and bury rarely accessed details.

More Read: Smart Contracts and AI Automating Business Processes

Conclusion

Mastering data visualization best practices for AI insights has become indispensable for organizations seeking a competitive advantage through artificial intelligence. Effective visualizations bridge the gap between complex machine learning algorithms and human decision-makers, transforming raw AI outputs into compelling narratives that drive action. By selecting appropriate chart types, implementing interactive dashboards, maintaining data quality, and following ethical design principles, practitioners ensure their AI investments generate measurable business value.

The convergence of advancing visualization technologies, expanding AI capabilities, and growing data volumes demands continuous learning and adaptation. Organizations prioritizing visualization excellence empower stakeholders at all levels to leverage AI insights confidently, fostering data-driven cultures that respond rapidly to opportunities and challenges. As artificial intelligence continues evolving, those who skillfully communicate its discoveries through thoughtful data visualization will lead their industries into an increasingly intelligence-augmented future where human expertise and machine insights combine synergistically for unprecedented outcomes.