Edge Computing vs Cloud Computing for AI Applications

Edge computing vs cloud computing for AI: Compare latency, scalability, security & costs. Discover which approach suits your real-time AI

The landscape of artificial intelligence applications is rapidly evolving, with organizations facing critical decisions about where and how to deploy their AI workloads. The choice between edge computing and cloud computing for AI applications has become one of the most significant architectural decisions in modern technology infrastructure. As businesses increasingly rely on real-time data processing and intelligent automation, the fundamental differences, advantages, and limitations of these two computing paradigms are essential for making informed strategic decisions.

Edge computing processes data locally on devices or nearby servers, bringing computational power closer to where data is generated. This approach contrasts sharply with traditional cloud computing models, where data is transmitted to centralized, remote data centers for processing. For AI applications, this distinction becomes particularly crucial as it directly impacts factors like latency, bandwidth consumption, data privacy, and overall system performance.

The rise of Internet of Things (IoT) devices, autonomous systems, and real-time analytics has intensified the debate between edge and cloud deployment strategies. Organizations must now consider various factors, including data processing requirements, latency sensitivity, scalability needs, and security concerns, when architecting their AI systems. Modern AI workloads range from simple sensor data analysis to complex machine learning models, each with unique computational and infrastructure requirements.

Real-time AI processing has become increasingly critical across industries, from manufacturing and healthcare to transportation and retail. Applications requiring instant decision-making, such as autonomous vehicles, industrial automation, and medical monitoring systems, demand ultra-low latency that traditional cloud-based approaches may struggle to deliver. Conversely, applications requiring massive computational resources for training complex neural networks often benefit from the scalability and power of cloud infrastructure.

The convergence of Edge Computing and cloud AI technologies is creating hybrid architectures that leverage the strengths of both approaches. These hybrid models enable organizations to optimize their AI deployments based on specific use cases, balancing factors like performance, cost, and operational complexity. These trade-offs and identifying the optimal deployment strategy for different AI applications have become a critical competency for technology leaders and architects in today’s data-driven economy.

Edge Computing for AI Applications

Edge computing represents a distributed computing paradigm that brings data processing capabilities closer to the source of data generation. In the context of AI applications, this means running machine learning models and artificial intelligence algorithms directly on local devices, sensors, or nearby edge servers rather than relying on distant cloud data centers. This fundamental shift in computational architecture addresses several critical challenges associated with traditional centralized processing models.

Core Principles of Edge AI

Edge AI operates on the principle of decentralized intelligence, where AI models are deployed and executed at the network edge. This approach involves several key components: edge devices equipped with AI-capable processors, local data storage systems, and specialized software frameworks optimized for resource-constrained environments. The edge computing architecture typically includes IoT devices, edge gateways, and micro data centers strategically positioned to minimize the distance between data generation and processing.

The implementation of edge AI solutions requires careful consideration of hardware capabilities, power consumption, and thermal management. Modern edge devices leverage specialized AI chips, such as neural processing units (NPUs), graphics processing units (GPUs), and field-programmable gate arrays (FPGAs), to achieve optimal performance while maintaining energy efficiency. These hardware optimizations enable complex AI algorithms to run effectively on devices with limited computational resources compared to traditional cloud infrastructure.

Real-Time Processing Capabilities

One of the most significant advantages of edge computing for AI is its ability to deliver real-time processing capabilities. By eliminating the need to transmit data to remote servers, edge AI systems can process information and make decisions within milliseconds. This ultra-low latency is crucial for applications such as autonomous driving, industrial safety systems, and medical monitoring devices, where delays can have serious consequences.

Real-time AI processing at the edge enables immediate responses to changing conditions and events. For example, in manufacturing environments, Edge Computing can detect equipment anomalies and trigger automatic shutdowns before failures occur. Similarly, in retail environments, edge-based computer vision systems can analyze customer behavior and adjust displays or recommendations instantly without waiting for cloud processing delays.

Privacy and Security Benefits

Edge Computing offers enhanced privacy and security by keeping sensitive data local to the source. Instead of transmitting raw data across networks to cloud servers, edge devices process information locally and only share aggregated results or insights. This approach significantly reduces the attack surface and minimizes exposure to data breaches during transmission.

The data privacy advantages of edge computing are particularly important in regulated industries such as healthcare, finance, and government applications. By processing patient data, financial transactions, or sensitive government information locally, organizations can maintain better control over their data and ensure compliance with regulations such as GDPR, HIPAA, and other data protection standards.

Edge Computing Architecture for AI Workloads

Edge Computing provides a centralized approach to AI applications, leveraging vast computational resources, storage capabilities, and specialized services offered by major cloud providers. This model enables organizations to access powerful infrastructure without significant upfront investments in hardware, making advanced artificial intelligence capabilities accessible to businesses of all sizes.

Scalability and Resource Flexibility

Cloud AI platforms offer virtually unlimited scalability, allowing organizations to dynamically adjust computational resources based on demand. This elasticity is particularly valuable for machine learning workloads that experience varying computational requirements during different phases of the AI lifecycle. Training complex neural networks, for instance, may require massive parallel processing capabilities that can be provisioned on demand in cloud environments.

The cloud computing architecture supports both horizontal and vertical scaling, enabling AI applications to handle everything from small experimental workloads to enterprise-scale production systems. Cloud providers offer specialized AI services, including pre-trained models, AutoML platforms, and managed machine learning services that accelerate the development and deployment of AI solutions.

Advanced AI Services and Tools

Major cloud platforms provide comprehensive ecosystems of AI development tools, frameworks, and pre-built services that significantly reduce the complexity of implementing AI applications. These platforms offer specialized services for natural language processing, computer vision, speech recognition, and predictive analytics, allowing developers to integrate sophisticated AI capabilities without building models from scratch.

Cloud-based AI services also provide access to cutting-edge research and continuously updated models. Cloud providers invest heavily in AI research and development, making the latest advancements in artificial intelligence available to their customers through managed services and APIs. This approach enables organizations to leverage state-of-the-art AI capabilities without maintaining specialized research teams or infrastructure.

Global Accessibility and Collaboration

Edge Computing enables global accessibility to AI applications and data, facilitating collaboration across distributed teams and locations. This accessibility is crucial for organizations with international operations or remote teams working on AI projects. Cloud platforms provide consistent access to AI resources and data from anywhere in the world, supporting modern distributed work environments.

The collaborative capabilities of cloud platforms extend to AI model development and deployment workflows. Teams can share datasets, collaborate on model development, version control their AI assets, and deploy models globally through cloud infrastructure. This global reach is particularly valuable for applications serving international user bases or requiring data aggregation from multiple geographic regions.

Performance Comparison: Latency and Speed Analysis

The performance characteristics of edge computing versus Edge Computing for AI applications differ significantly across multiple dimensions, with latency being one of the most critical factors influencing deployment decisions. These performance trade-offs are essential for architects and decision-makers when selecting the optimal infrastructure for their AI workloads.

Latency Characteristics and Impact

Edge AI typically delivers sub-millisecond to low-millisecond latency for real-time processing scenarios, as data doesn’t need to travel across networks to distant servers. This ultra-low latency is achieved by processing data locally on edge devices or nearby edge servers, eliminating network transmission delays that can range from 20 to 100 milliseconds or more in cloud-based systems.

Real-time processing and AI inference happen directly on devices with edge computing, allowing for instantaneous responses and decisions while reducing latency since data doesn’t need to travel to centralized servers. This performance advantage is particularly crucial for applications requiring immediate responses, such as autonomous vehicles, industrial control systems, and medical monitoring devices.

Edge Computing latency varies significantly based on factors including geographic distance to data centers, network conditions, and current server loads. While cloud providers have established global networks of data centers to minimize latency, the fundamental physics of data transmission over long distances create inherent delays that edge computing eliminates.

Throughput and Processing Capacity

Edge Computing AI platforms excel in high-throughput scenarios where massive amounts of data need to be processed simultaneously. The virtually unlimited computational resources available in cloud environments enable processing of large datasets, training of complex machine learning models, and handling of concurrent requests that would overwhelm typical edge devices.

Edge computing throughput is constrained by the computational capabilities of individual edge devices, which typically have limited processing power compared to cloud infrastructure. However, edge deployments can achieve high aggregate throughput through distributed processing across multiple edge nodes, effectively parallelizing workloads across the edge network.

Bandwidth Utilization and Network Efficiency

Edge Computing AI significantly reduces bandwidth consumption by processing data locally and transmitting only results or aggregated insights to central systems. This approach is particularly valuable in scenarios involving high-volume sensor data, video streams, or other bandwidth-intensive data sources that would be expensive or impractical to transmit continuously to the cloud.

Cloud computing approaches require substantial bandwidth for uploading raw data, which can become a bottleneck for applications generating large volumes of data. Network costs and limitations can significantly impact the total cost of ownership and performance of cloud-based AI solutions, especially in remote locations or areas with limited network infrastructure.

Scalability and Resource Management

The scalability characteristics of edge computing and cloud computing for AI applications present fundamentally different approaches to resource management, each with distinct advantages and limitations that organizations must carefully consider when designing their AI infrastructure.

Horizontal vs. Vertical Scaling Approaches

Cloud computing offers exceptional scalability through both horizontal and vertical scaling mechanisms. Horizontal scaling allows AI applications to leverage additional servers and computational resources as demand increases, while vertical scaling enables upgrading individual resources such as CPU, memory, or storage capacity. This flexibility makes cloud platforms ideal for AI workloads with unpredictable or highly variable resource requirements.

Edge computing scalability operates through distributed horizontal scaling, where additional edge devices or nodes are deployed to handle increased workloads. This approach requires careful planning and coordination to ensure consistent performance and data management across the distributed edge infrastructure. While individual edge nodes have limited scalability, the collective capacity of edge networks can be substantial.

Resource Allocation and Optimization

Cloud AI platforms provide sophisticated resource allocation mechanisms, including auto-scaling capabilities that automatically adjust computational resources based on demand patterns. These platforms offer specialized AI-optimized instances, GPU clusters, and tensor processing units (TPUs) that can be dynamically allocated to different machine learning workloads based on performance requirements and cost constraints.

Edge computing resource management requires more strategic planning, as resources are typically pre-allocated and cannot be easily adjusted on demand. Organizations must carefully balance the computational capabilities of edge devices against expected workloads, often over-provisioning to handle peak demands or implementing load balancing across multiple edge nodes.

Cost Implications of Scaling

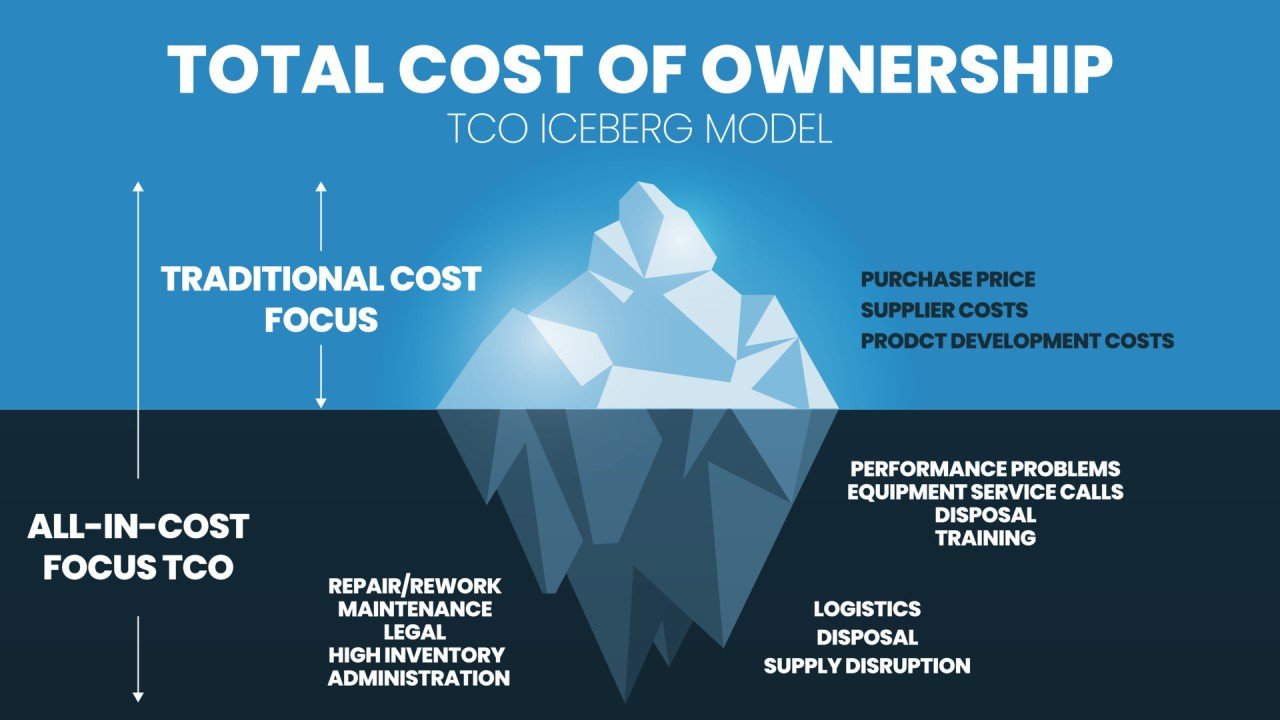

The cost structures of scaling-edge vs. cloud AI deployments differ significantly. Edge Computing typically follows a pay-as-you-use model, where costs scale linearly with resource consumption. This approach provides cost predictability and eliminates upfront capital expenditures, but can become expensive for consistently high-utilization workloads.

Edge computing scaling involves higher upfront capital costs for purchasing and deploying edge hardware, but generally results in lower operational costs for high-utilization scenarios. The total cost of ownership for edge deployments often decreases as utilization increases, making this approach more economical for applications with consistent, high-volume processing requirements.

Security and Privacy Considerations

Security and privacy represent critical decision factors when choosing between edge computing and cloud computing for AI applications. Each approach presents unique security advantages and challenges that organizations must carefully evaluate based on their specific requirements, regulatory constraints, and risk tolerance.

Data Privacy and Compliance

Edge AI offers significant privacy advantages by keeping sensitive data local to its source, reducing the risk of data exposure during transmission and storage in external systems. Local processing minimizes the risk of data breaches and enhances data privacy and security. This approach is particularly valuable for applications handling personal information, medical records, financial data, or other sensitive information subject to strict regulatory requirements.

Cloud computing requires transmitting data to external servers, creating potential privacy concerns and regulatory compliance challenges. However, major cloud providers invest heavily in security infrastructure and compliance certifications, often providing more robust security measures than many organizations can implement independently. Cloud platforms typically offer advanced encryption, access controls, and audit capabilities that enhance overall security posture.

Attack Surface and Vulnerability Management

Edge computing presents a distributed attack surface that can be challenging to secure and maintain. Each edge device represents a potential entry point for attackers, requiring comprehensive security measures across the entire edge network. However, the distributed nature of edge computing can also limit the impact of security breaches, as compromising individual devices doesn’t necessarily provide access to the entire system.

Cloud AI platforms consolidate security management into centralized systems managed by specialized security teams. While this creates larger, more attractive targets for attackers, cloud providers typically implement enterprise-grade security measures, including advanced threat detection, automated patching, and professional security monitoring that individual organizations might struggle to replicate.

Identity and Access Management

Cloud computing platforms provide sophisticated identity and access management systems that enable fine-grained control over who can access AI applications and data. These systems support role-based access controls, multi-factor authentication, and integration with enterprise identity systems, facilitating secure collaboration and governance.

Edge AI deployments often rely on simpler security models due to resource constraints and the distributed nature of edge devices. However, emerging standards and technologies for edge security, including hardware-based security modules and blockchain-based authentication, are improving the security capabilities of edge deployments.

Use Cases and Industry Applications

The choice between edge computing and cloud computing for AI applications often depends on specific industry requirements, use case characteristics, and operational constraints. Different sectors have embraced these technologies in varying ways, each leveraging the unique advantages of edge or cloud architectures to solve domain-specific challenges.

Manufacturing and Industrial IoT

Manufacturing environments increasingly rely on edge AI for real-time quality control, predictive maintenance, and process optimization. Edge AI technology enables real-time processing of sensor data with low latency and high reliability, which is essential for ensuring safety and efficiency. Factory floor applications require immediate responses to equipment failures, quality defects, or safety hazards, making the ultra-low latency of edge computing critical for operational effectiveness.

Cloud computing complements edge deployments in manufacturing by providing centralized analytics, model training, and enterprise-wide optimization capabilities. Historical data from multiple facilities can be aggregated in the cloud for comprehensive analysis, supply chain optimization, and strategic decision-making that benefits from the computational power and storage capacity of cloud platforms.

Healthcare and Medical Devices

Healthcare applications demonstrate the complementary nature of edge and cloud AI architectures. Patient monitoring devices, medical imaging equipment, and diagnostic tools benefit from edge computing for immediate analysis and alert generation. Real-time processing of vital signs, ECG data, or medical images enables rapid diagnosis and treatment decisions that can be life-saving.

Cloud AI supports healthcare applications through large-scale data analysis, population health studies, and training of sophisticated machine learning models using vast datasets from multiple healthcare providers. The scalability and computational power of cloud platforms enable medical research and the development of advanced diagnostic algorithms that improve patient care across the healthcare system.

Autonomous Vehicles and Transportation

The autonomous vehicle industry exemplifies applications requiring edge AI for safety-critical decision-making. Self-driving cars must process sensor data from cameras, lidar, and radar systems in real-time to make split-second decisions about navigation, obstacle avoidance, and emergency responses. The latency requirements for these applications make cloud-based processing impractical for critical safety functions.

Cloud computing supports autonomous vehicle ecosystems through high-definition mapping, route optimization, traffic pattern analysis, and continuous model improvement based on fleet-wide data collection. The cloud infrastructure enables the massive data processing required for improving autonomous driving algorithms and coordinating transportation networks.

Smart Cities and Urban Infrastructure

Smart city initiatives leverage both edge and cloud computing to optimize urban services and infrastructure. Edge AI enables real-time traffic management, intelligent lighting systems, environmental monitoring, and public safety applications that require immediate responses to changing conditions. Edge deployments in smart cities reduce bandwidth costs and improve system reliability by processing data locally.

Cloud AI platforms support smart city applications through city-wide analytics, resource planning, and predictive modeling that inform policy decisions and infrastructure investments. The ability to analyze data from multiple sources across the urban environment enables a comprehensive understanding of city dynamics and evidence-based urban planning.

Cost Analysis and ROI Considerations

The financial implications of edge computing versus cloud computing for AI applications require a comprehensive analysis of both direct costs and indirect economic impacts. Organizations must consider initial investments, operational expenses, and long-term total cost of ownership when making infrastructure decisions.

Initial Investment and Setup Costs

Edge computing typically requires significant upfront capital expenditure for purchasing specialized hardware, edge devices, and local infrastructure. These costs include AI-capable processors, storage systems, networking equipment, and environmental controls necessary for edge deployments. Organizations must also invest in edge management software and monitoring systems to effectively operate distributed edge infrastructure.

Cloud computing minimizes initial investment by providing AI capabilities through subscription-based models. Organizations can begin using sophisticated AI services immediately without purchasing hardware, setting up data centers, or hiring specialized infrastructure teams. This approach enables faster time-to-market and reduces financial risk associated with technology investments.

Operational Expenses and Ongoing Costs

Cloud AI platforms follow usage-based pricing models where costs scale with computational consumption, data storage, and network utilization. While this provides cost predictability and eliminates infrastructure management overhead, expenses can accumulate rapidly for high-utilization applications or those processing large volumes of data continuously.

Edge computing operational costs are typically more predictable but require ongoing investments in maintenance, updates, and technical support for distributed hardware. Organizations must budget for device replacement cycles, software licensing, and specialized personnel to manage edge infrastructure. However, edge deployments can provide better cost control for applications with consistent, high-volume processing requirements.

Total Cost of Ownership Analysis

Long-term total cost of ownership calculations must consider both direct infrastructure costs and indirect factors such as productivity improvements, risk mitigation, and competitive advantages enabled by different deployment models. Edge AI implementations often provide significant value through reduced latency, improved reliability, and enhanced data privacy that can translate into business advantages.

Cloud computing’s total cost of ownership benefits from economies of scale, reduced management overhead, and access to continuously improving AI services without additional investment. The ability to leverage cloud providers’ research and development investments in AI technology can provide significant value over time.

Return on Investment Factors

ROI analysis for AI applications must consider the specific value propositions of edge versus cloud deployments. Real-time processing capabilities of edge computing can enable new business models, improve customer experiences, and create competitive differentiation that generates substantial returns. Applications requiring immediate responses often justify higher infrastructure costs through improved operational efficiency or revenue generation.

Cloud computing ROI often comes from accelerated development cycles, reduced technical complexity, and the ability to scale AI capabilities rapidly as business needs evolve. The flexibility to experiment with different AI technologies without significant upfront investment can accelerate innovation and market responsiveness.

Hybrid Approaches and Future Trends

The evolution of AI applications is driving convergence between edge computing and cloud computing through hybrid architectures that leverage the strengths of both approaches. These integrated solutions enable organizations to optimize their AI deployments based on specific requirements while maintaining flexibility for future technology evolution.

Edge-Cloud Integration Strategies

Modern hybrid architectures implement intelligent workload distribution where different types of processing occur at the most appropriate location. Real-time inference and immediate decision-making happen at the edge, while machine learning model training, complex analytics, and long-term data storage utilize cloud resources. This approach optimizes both performance and cost by matching computational requirements with infrastructure capabilities.

Federated learning represents an advanced hybrid approach where AI models are trained collaboratively across edge devices while maintaining data privacy. Edge nodes contribute to model improvement without sharing raw data, combining the privacy benefits of edge processing with the collective intelligence possible through cloud coordination.

Emerging Technologies and Innovations

5G networks are transforming the edge-cloud paradigm by enabling ultra-low latency connections between edge devices and cloud resources. This technology makes previously impractical hybrid approaches feasible by providing the high-bandwidth, low-latency connectivity necessary for seamless integration between edge and cloud processing.

Edge AI hardware continues advancing with more powerful and energy-efficient processors specifically designed for artificial intelligence workloads. These developments are expanding the capabilities of edge devices, enabling more sophisticated AI applications to run locally while maintaining the benefits of edge processing.

Industry Standardization and Interoperability

The development of industry standards for edge computing and cloud integration is facilitating more seamless hybrid deployments. Standards organizations are working to establish common frameworks for edge-cloud communication, data formats, and security protocols that enable interoperability between different vendors and platforms.

Container technologies and orchestration platforms are simplifying the deployment and management of AI applications across hybrid environments. These technologies enable consistent application behavior whether running on edge devices or cloud infrastructure, reducing complexity and improving operational efficiency.

Predictions for Future Development

The future of AI applications will likely see increased intelligence in workload distribution, where systems automatically optimize processing location based on real-time conditions, cost considerations, and performance requirements. Machine learning algorithms will manage the edge-cloud infrastructure itself, continuously optimizing for efficiency and performance.

Artificial intelligence capabilities will become increasingly distributed, with specialized AI functions embedded throughout the technology stack from edge devices to cloud services. This distributed intelligence will enable more responsive and efficient systems that can adapt to changing conditions and requirements automatically.

More Read: AI Startup Ecosystem: Which Cities Are Leading Innovation

Conclusion

The choice between edge computing and cloud computing for AI applications represents a strategic decision that significantly impacts performance, cost, security, and scalability of artificial intelligence deployments. Edge AI excels in scenarios requiring real-time processing, enhanced privacy, and reduced bandwidth consumption, making it ideal for autonomous systems, industrial automation, and latency-sensitive applications.

Cloud AI provides superior scalability, computational power, and access to advanced AI services, making it optimal for large-scale data processing, complex machine learning model development, and applications requiring massive computational resources. The future of AI infrastructure lies in hybrid approaches that intelligently combine both paradigms, leveraging edge computing for immediate decision-making and local processing

while utilizing cloud computing for centralized analytics, model training, and global coordination. Organizations should evaluate their specific requirements for latency, privacy, scalability, and cost to determine the optimal balance between edge and cloud deployments, recognizing that the most effective AI strategies often involve integrating both approaches to maximize the benefits of each computing paradigm.