The artificial intelligence revolution has fundamentally transformed how enterprises approach digital transformation, creating unprecedented demand for robust, scalable infrastructure solutions. As organizations worldwide race to implement AI deployment strategies, they’re discovering that traditional on-premises infrastructure and pure public cloud solutions each present significant limitations. This realization has positioned hybrid cloud solutions as the optimal architecture for enterprise AI deployment, offering the perfect balance between control, flexibility, and scalability.

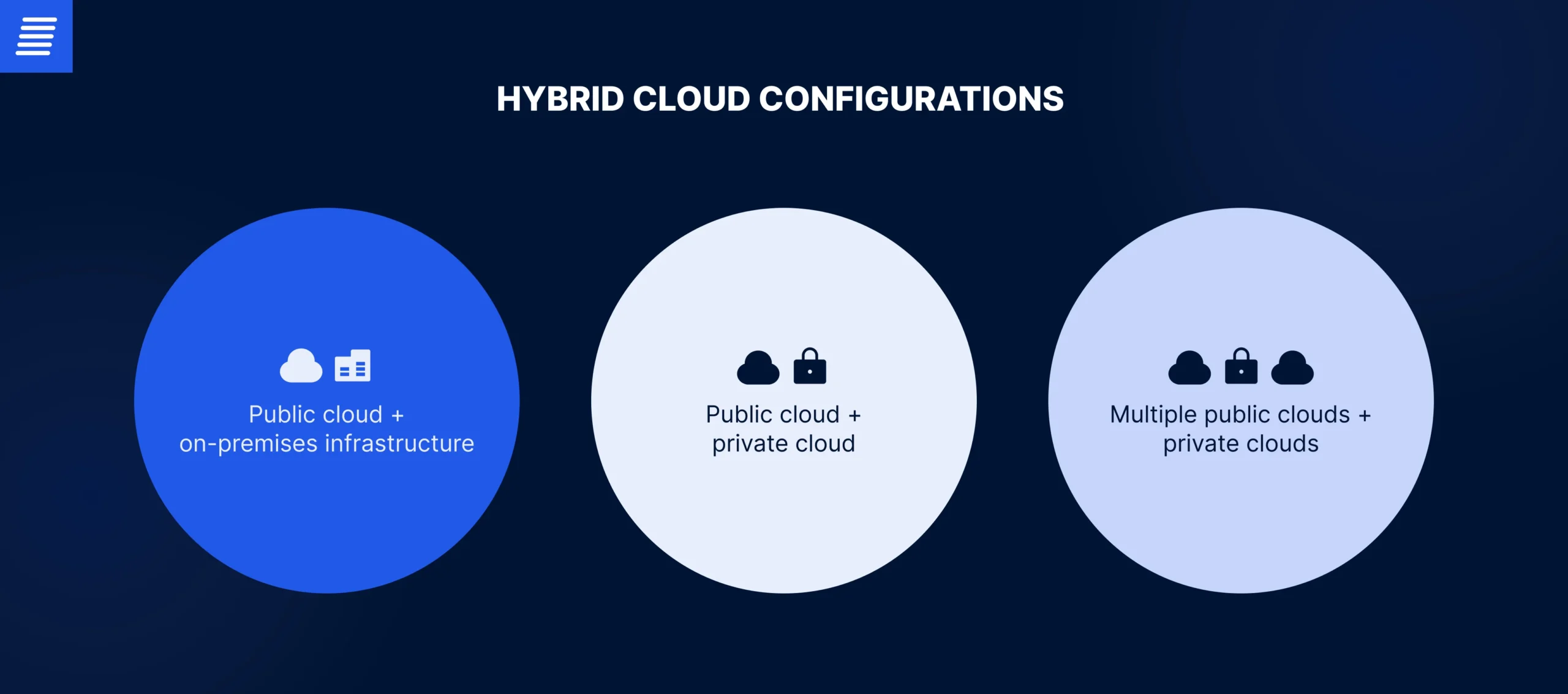

Hybrid cloud infrastructure enables organizations to seamlessly distribute AI workloads across on-premises data centers, private clouds, and public cloud platforms, creating a unified ecosystem that maximizes resource utilization while maintaining data sovereignty and regulatory compliance. The integration of artificial intelligence and machine learning models within hybrid environments presents unique opportunities for businesses to accelerate innovation, reduce operational costs, and achieve competitive advantages in their respective industries.

Recent industry surveys reveal that 85% of cloud buyers have either deployed or are actively implementing hybrid cloud architectures, with AI workloads serving as a primary driver for this adoption. Organizations are recognizing that enterprise AI solutions require unprecedented computational resources, massive data processing capabilities, and flexible scaling options that single-environment infrastructures simply cannot provide efficiently.

The complexity of modern AI deployment extends beyond mere infrastructure considerations. Enterprises must navigate challenges including data integration, security compliance, model training optimization, inference latency requirements, and cost management. Hybrid cloud solutions address these multifaceted challenges by enabling organizations to strategically position workloads based on specific requirements—training computationally intensive models in high-performance on-premises environments while leveraging public cloud resources for inference scaling and global distribution.

This comprehensive guide explores how hybrid cloud solutions are revolutionizing enterprise AI deployment, examining architectural patterns, implementation strategies, security considerations, cost optimization techniques, and real-world use cases that demonstrate the transformative potential of this approach.

Hybrid Cloud Architecture for AI Workloads

Hybrid cloud architecture represents a computing environment that combines on-premises infrastructure, private cloud resources, and public cloud services through sophisticated orchestration and management layers. For enterprise AI deployment, this architecture provides the foundational flexibility required to accommodate diverse workload characteristics, data gravity considerations, and regulatory requirements that define modern artificial intelligence implementations.

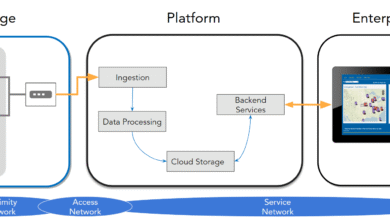

The fundamental architecture of hybrid cloud solutions for AI comprises several interconnected layers. The infrastructure layer includes on-premises GPU clusters, edge computing devices, private cloud platforms, and public cloud instances, each optimized for specific workload types. The data layer ensures seamless data movement and synchronization across environments while maintaining security and compliance. The orchestration layer manages workload placement, resource allocation, and automated scaling based on predefined policies and real-time performance metrics.

AI workloads exhibit distinct characteristics that make hybrid cloud infrastructure particularly advantageous. Model training operations demand massive computational power and high-bandwidth access to large datasets, making them ideal candidates for on-premises or private cloud environments with specialized hardware like NVIDIA GPUs or Google TPUs. Conversely, model inference operations require rapid scaling to handle variable request volumes, making them well-suited for public cloud platforms with global content delivery networks and auto-scaling capabilities.

Data locality and sovereignty considerations significantly influence hybrid cloud architecture design for AI applications. Organizations operating in regulated industries must ensure sensitive data remains within specific geographical boundaries or controlled environments. Hybrid cloud solutions enable these organizations to perform data preprocessing and feature engineering in compliant environments while leveraging public cloud resources for less sensitive computational tasks, maintaining regulatory compliance without sacrificing innovation velocity.

The network layer in a hybrid cloud infrastructure plays a critical role in AI deployment success. High-throughput, low-latency connections between on-premises and cloud environments enable seamless data transfer for model training updates, real-time inference requests, and monitoring data collection. Modern hybrid cloud solutions incorporate software-defined networking, dedicated interconnects, and edge caching to minimize latency and optimize bandwidth utilization.

Key Benefits of Hybrid Cloud for Enterprise AI Deployment

Hybrid cloud solutions deliver transformative benefits for organizations pursuing ambitious AI deployment strategies, addressing the fundamental challenges that have historically limited artificial intelligence adoption at enterprise scale. The strategic advantages extend across cost optimization, performance enhancement, security compliance, and operational flexibility dimensions.

Cost optimization represents one of the most compelling benefits of hybrid cloud infrastructure for AI workloads. Organizations can strategically allocate expensive GPU resources in on-premises environments for continuous, predictable workloads like ongoing model training, while leveraging spot instances and serverless computing in public clouds for burst capacity and development environments. This hybrid approach can reduce infrastructure costs by 30-60% compared to pure public cloud or fully on-premises solutions, as organizations avoid over-provisioning fixed resources while maintaining access to virtually unlimited scale when needed.

Performance optimization through hybrid cloud architecture enables organizations to position workloads closest to data sources and end users, dramatically reducing latency and improving user experience. Enterprise AI solutions requiring real-time decision-making, such as fraud detection, autonomous systems, or recommendation engines, benefit enormously from edge computing components integrated within hybrid architectures. These edge nodes perform local inference using pre-trained models, delivering sub-100-millisecond response times impossible to achieve with centralized cloud-only architectures.

Security and compliance advantages of hybrid cloud solutions address critical concerns that prevent many organizations from fully embracing public cloud platforms for sensitive AI workloads. By maintaining control over data processing environments, enterprises can implement defense-in-depth security strategies, ensuring proprietary algorithms and sensitive training data never leave controlled infrastructure. This capability proves essential for organizations in healthcare, financial services, defense, and other highly regulated sectors where data privacy and intellectual property protection are paramount.

Flexibility and vendor independence provided by hybrid cloud infrastructure prevent lock-in to single cloud providers while enabling organizations to leverage best-of-breed services across multiple platforms. An enterprise might use AWS for its comprehensive machine learning services, Google Cloud for its specialized AI accelerators, and Azure for its enterprise application integration, all orchestrated through a unified hybrid cloud management platform. This multi-cloud flexibility within a hybrid framework maximizes innovation potential while maintaining strategic control.

Business continuity and disaster recovery capabilities inherent in hybrid cloud architecture significantly enhance resilience for critical AI deployment scenarios. Organizations can implement active-active configurations where AI models run simultaneously across on-premises and cloud environments, ensuring continuous availability even during infrastructure failures. Automated failover mechanisms redirect inference requests to healthy environments within seconds, maintaining service availability crucial for revenue-generating AI applications.

Essential Components of Hybrid Cloud AI Infrastructure

Building an effective hybrid cloud infrastructure for enterprise AI deployment requires carefully orchestrated integration of specialized hardware, software platforms, networking components, and management tools. These essential components enable organizations to design architectures that optimize performance, cost, and operational efficiency.

GPU computing resources form the computational foundation for AI workloads in hybrid environments. Modern enterprise AI solutions leverage NVIDIA A100, H100, or AMD MI300 accelerators for training deep learning models, requiring careful capacity planning to balance on-premises investments against cloud-based GPU access. Organizations typically maintain baseline GPU capacity on-premises for consistent workloads while establishing cloud burst capacity for peak demands and experimental projects. The hybrid approach maximizes utilization of capital expenditure investments while maintaining access to the latest-generation accelerators through cloud providers.

Container orchestration platforms like Kubernetes have become essential for deploying and managing AI workloads across hybrid environments. Kubernetes enables consistent application deployment, scaling, and management regardless of underlying infrastructure, abstracting complexity from data science teams. Specialized distributions like Red Hat OpenShift or Rancher provide additional enterprise features, including multi-cluster management, security policy enforcement, and integrated monitoring, specifically designed for hybrid cloud solutions. These platforms ensure AI models developed on local workstations deploy identically to on-premises clusters or public cloud regions.

Data management and storage systems must accommodate the extraordinary volume, velocity, and variety requirements of AI deployment initiatives. Hybrid data lakes combining on-premises object storage with cloud-native services like AWS S3 or Azure Blob Storage enable unified data access patterns while optimizing storage costs through intelligent tiering. High-performance file systems such as Lustre or GPFS accelerate model training by delivering the sustained throughput necessary to keep expensive GPU resources fully utilized, preventing I/O bottlenecks that waste computational capacity.

MLOps platforms bridge the gap between data science experimentation and production AI deployment in hybrid environments. Tools like MLflow, Kubeflow, or vendor-specific solutions from Databricks and Domino Data Lab provide experiment tracking, model versioning, automated retraining pipelines, and deployment automation. These platforms enable data scientists to develop models locally or in cloud notebooks while operations teams deploy them across hybrid infrastructure with appropriate governance, monitoring, and compliance controls.

Network infrastructure components ensure seamless connectivity between distributed elements of a hybrid cloud architecture. Dedicated interconnects like AWS Direct Connect, Azure ExpressRoute, or Google Cloud Interconnect provide high-bandwidth, low-latency connections between on-premises data centers and cloud providers. Software-defined WAN solutions optimize traffic routing, prioritizing latency-sensitive inference requests while using more cost-effective paths for bulk data transfers. Edge computing nodes integrated through 5G networks extend hybrid architectures to remote locations, enabling real-time AI deployment for IoT and mobile applications.

Data Security and Governance in Hybrid AI Environments

Security and governance represent critical success factors for enterprise AI deployment in hybrid cloud environments, requiring comprehensive strategies that address data protection, regulatory compliance, intellectual property security, and ethical AI principles. Organizations must implement defense-in-depth approaches that secure AI workloads throughout their entire lifecycle.

Data encryption forms the foundation of security in hybrid cloud solutions, protecting information at rest, in transit, and increasingly during processing. Modern AI deployment architectures implement end-to-end encryption using hardware-accelerated cryptographic processors that minimize performance impact. Sensitive training datasets stored in on-premises repositories remain encrypted using customer-managed keys, with encrypted channels protecting data as it moves to cloud environments for preprocessing or augmentation. Confidential computing technologies like Intel SGX or AMD SEV enable encrypted AI model inference within secure enclaves, protecting both input data and proprietary model architectures from cloud provider access.

Access control and identity management in hybrid AI environments require sophisticated approaches that span multiple administrative domains. Organizations implement federated identity systems that provide single sign-on across on-premises and cloud resources while enforcing role-based access control policies consistently. Data scientists receive appropriate permissions for development environments, while production AI deployment remains restricted to approved operations personnel. Service accounts used by automated ML pipelines follow the principle of least privilege, accessing only specific resources required for their functions.

Regulatory compliance considerations significantly influence hybrid cloud architecture design for AI workloads, particularly in industries subject to GDPR, HIPAA, SOC 2, or regional data residency requirements. Hybrid cloud solutions enable organizations to maintain sensitive personal information within geographical boundaries or certified facilities while leveraging global cloud resources for anonymized or aggregated data processing. Audit logging captures all data access and model inference requests, providing compliance teams with the complete traceability required for regulatory reporting and breach investigation.

AI model security addresses unique threats specific to enterprise AI deployment, including adversarial attacks, model extraction, data poisoning, and intellectual property theft. Organizations implement model versioning and integrity checking to detect unauthorized modifications to production models. Input validation and anomaly detection protect inference APIs from adversarial examples designed to manipulate model behavior. Watermarking techniques embed unique identifiers in models, enabling organizations to prove ownership and detect unauthorized redistribution of proprietary machine learning models.

Governance frameworks for AI in hybrid cloud infrastructure establish policies, procedures, and controls that ensure responsible development and deployment of artificial intelligence systems. Model documentation requirements capture training data characteristics, performance metrics, known limitations, and ethical considerations before production deployment. Human-in-the-loop approval processes review high-risk AI decisions, ensuring alignment with organizational values and regulatory requirements. Regular bias testing and fairness audits identify and remediate discriminatory patterns in AI deployment outcomes.

Cost Optimization Strategies for Hybrid AI Infrastructure

Financial optimization of hybrid cloud solutions for enterprise AI deployment requires sophisticated strategies that balance capital expenditure on on-premises infrastructure against operational expenditure for cloud resources. Organizations implementing these strategies typically achieve 40-70% cost reductions compared to unoptimized approaches while maintaining or improving performance and scalability.

Workload placement optimization represents the most impactful cost reduction strategy for hybrid cloud infrastructure. Organizations analyze AI workload characteristics—computational intensity, data transfer volumes, latency requirements, and duration—to determine optimal execution environments. Long-running model training jobs with consistent GPU utilization run most economically on owned hardware, while short-duration experiments and variable inference workloads leverage cloud resources. Advanced placement algorithms automatically schedule workloads based on real-time pricing, resource availability, and performance requirements, continuously optimizing the cost-performance tradeoff.

Resource right-sizing and auto-scaling prevent over-provisioning that wastes budget on underutilized infrastructure. Enterprise AI solutions implement monitoring that tracks actual GPU utilization, memory consumption, and throughput metrics, identifying opportunities to reduce instance sizes or consolidate workloads. Auto-scaling policies ensure inference services expand during peak demand periods and contract during quiet hours, with cloud resources providing elasticity that’s prohibitively expensive to implement on-premises. Serverless inference platforms like AWS Lambda or Google Cloud Functions eliminate charges during zero-usage periods, dramatically reducing costs for sporadic AI deployment scenarios.

Data transfer cost management addresses significant expenses often overlooked in hybrid cloud architecture planning. Ingress data transfer to cloud providers is typically free, but egress charges accumulate rapidly when retrieving training data or inference results. Organizations minimize these costs by implementing intelligent caching at the edge, preprocessing data locally before cloud transfer, and designing workflows that keep iterative processing within single environments. Cross-region replication uses compression and delta updates to minimize bandwidth consumption, while dedicated network connections provide fixed-cost alternatives to metered internet transfer pricing.

Reserved capacity and commitment discounts dramatically reduce costs for predictable AI deployment workloads. Cloud providers offer 40-70% discounts for one or three-year commitments to specific instance types and usage volumes. Organizations analyze historical usage patterns to identify baseline capacity requirements suitable for reserved instances, supplementing with on-demand resources for peak demands. Hybrid strategies combine on-premises capacity for guaranteed baseline loads with reserved cloud instances for predictable growth and spot instances for cost-effective burst capacity.

Storage optimization strategies address the massive data volumes required for enterprise AI solutions. Intelligent tiering automatically moves infrequently accessed training datasets to lower-cost storage tiers while keeping active data on high-performance systems. Data lifecycle policies delete temporary artifacts from failed experiments, preventing the accumulation of obsolete files that consume expensive storage. Deduplication and compression reduce storage footprints by 50-80% for many AI datasets, with modern systems maintaining transparent access for training pipelines.

Implementation Best Practices and Migration Strategies

Successfully implementing hybrid cloud solutions for enterprise AI deployment requires methodical approaches that minimize risk, ensure business continuity, and accelerate time-to-value. Organizations following proven best practices navigate complexity more effectively while avoiding common pitfalls that derail AI initiatives.

Phased migration approaches prove most effective for transitioning existing AI deployment to hybrid cloud infrastructure. Organizations typically begin with non-critical experimental workloads, developing operational expertise and validating architecture decisions before migrating production systems. This crawl-walk-run methodology identifies integration challenges early, allowing iterative refinement of processes, tools, and governance frameworks. Each phase establishes measurable success criteria—performance benchmarks, cost targets, security validations—ensuring the migration delivers expected benefits before expanding scope.

Infrastructure as Code (IaC) practices enable consistent, repeatable deployment of hybrid cloud architecture components across on-premises and cloud environments. Tools like Terraform, Pulumi, or AWS CloudFormation define infrastructure declaratively, with version control systems tracking changes and enabling rollback when issues arise. IaC eliminates configuration drift between environments, ensuring development, staging, and production systems maintain identical configurations. Automated testing validates infrastructure changes before deployment, preventing outages from misconfiguration.

Observability and monitoring systems provide critical visibility into distributed enterprise AI solutions spanning hybrid environments. Unified monitoring platforms aggregate metrics, logs, and traces from all infrastructure components, enabling operations teams to identify performance bottlenecks, predict capacity constraints, and troubleshoot failures. AI-specific monitoring tracks model accuracy degradation, prediction latency, and training job progress, alerting data science teams when retraining becomes necessary. Cost monitoring dashboards provide real-time visibility into cloud spending, enabling rapid response to budget overruns.

Skills development and organizational change management often determine AI deployment success more than technical considerations. Organizations invest in training programs that develop cloud-native skills among infrastructure teams while educating data scientists about hybrid architecture capabilities and constraints. Cross-functional collaboration between data science, engineering, security, and operations teams ensures holistic consideration of requirements during architecture design. Champions programs identify and empower advocates who drive adoption, share knowledge, and overcome organizational resistance to change.

Vendor partnership strategies leverage expertise from cloud providers, technology vendors, and system integrators to accelerate hybrid cloud solutions implementation. Many organizations lack internal expertise in specialized areas like container orchestration, MLOps tooling, or network optimization. Strategic partnerships provide access to specialists who implement proven patterns, avoiding costly trial-and-error approaches. Vendor-neutral consulting firms offer unbiased architecture recommendations, ensuring enterprise AI deployment aligns with organizational requirements rather than specific product capabilities.

Real-World Use Cases and Success Stories

Hybrid cloud solutions are enabling transformative enterprise AI deployment across industries, delivering measurable business outcomes that demonstrate the strategic value of this architectural approach. Examining real-world implementations provides actionable insights for organizations planning their own AI initiatives.

Financial services institutions leverage hybrid cloud infrastructure to deploy fraud detection systems that analyze transaction patterns in real-time while maintaining regulatory compliance. Banks process sensitive customer data in on-premises environments certified for PCI-DSS compliance, training machine learning models that identify fraudulent patterns. These models deploy to edge nodes in payment processing systems, evaluating transactions in milliseconds to approve legitimate purchases while blocking suspicious activity. Cloud resources handle batch analysis of historical transactions for model retraining, scaling elastically during month-end processing peaks.

Healthcare organizations implement enterprise AI solutions for medical imaging analysis within hybrid architectures that protect patient privacy. Hospitals maintain HIPAA-compliant on-premises infrastructure where CT scans, MRIs, and X-rays are stored and initially processed to remove identifying information. De-identified images are transferred to cloud-based training environments where deep learning models learn to detect cancers, fractures, and other conditions. Validated models deploy back to hospital systems for local inference, providing radiologists with AI-assisted diagnosis without exposing patient data to public cloud environments.

Manufacturing companies deploy hybrid cloud solutions for predictive maintenance systems that minimize equipment downtime and optimize production efficiency. IoT sensors on factory equipment stream telemetry to edge computing nodes that perform real-time anomaly detection using pre-trained machine learning models. Edge nodes identify developing failures immediately, triggering maintenance interventions before catastrophic breakdowns occur. Historical sensor data transfers to cloud data lakes for comprehensive analysis, training improved models that detect previously unknown failure patterns. This hybrid approach delivers both real-time responsiveness and continuous improvement.

Retail enterprises utilize hybrid cloud architecture for personalization engines that recommend products while managing seasonal demand fluctuations. Customer interaction data—purchases, browsing history, search queries—feeds into on-premises data warehouses protected by stringent privacy controls. Machine learning pipelines in secure environments generate customer embeddings and preference models. These models deploy to cloud-based inference services that handle millions of concurrent personalization requests during holiday shopping peaks, scaling automatically to maintain sub-second response times. The hybrid approach balances privacy protection with scalability requirements.

Autonomous vehicle developers rely on enterprise AI deployment in hybrid environments to train and validate perception systems. Massive datasets collected from test vehicles—petabytes of sensor data including cameras, LiDAR, and radar—remain in on-premises high-performance computing clusters optimized for data ingestion and preprocessing. Training compute-intensive neural networks occurs on cloud GPU clusters, leveraging the latest accelerators without capital expenditure on rapidly obsolescing hardware. Validated models deploy to vehicles through edge computing architectures, enabling real-time decision-making with redundancy and failover mechanisms that ensure safety.

Future Trends in Hybrid Cloud AI Technology

The evolution of hybrid cloud solutions for enterprise AI deployment continues accelerating, driven by technological innovations, changing business requirements, and emerging use cases. Understanding these trends enables organizations to make strategic architecture decisions that remain relevant as the landscape evolves.

Edge AI integration is transforming hybrid cloud architecture by distributing intelligence closer to data sources and decision points. Next-generation edge devices incorporate specialized AI accelerators, enabling sophisticated machine learning models to run locally with minimal latency and bandwidth consumption. 5G networks provide the connectivity infrastructure that seamlessly integrates edge nodes into broader hybrid architectures, enabling real-time model updates and federated learning approaches where distributed devices collaboratively train models without centralizing sensitive data.

AI-specific silicon is revolutionizing the performance and economics of enterprise AI solutions within hybrid environments. Custom processors like Google’s TPUs, AWS Inferentia, and emerging neuromorphic chips deliver order-of-magnitude improvements in energy efficiency and throughput for specific AI workloads. Organizations designing hybrid cloud infrastructure increasingly incorporate specialized accelerators strategically, using GPUs for flexible development and experimentation while deploying purpose-built inference processors in production environments optimized for specific model architectures.

Federated learning and privacy-preserving AI techniques address fundamental challenges in AI deployment across distributed hybrid architectures. These approaches enable organizations to train models on decentralized datasets without consolidating sensitive information, maintaining privacy and regulatory compliance while leveraging diverse data sources. Techniques like differential privacy, homomorphic encryption, and secure multi-party computation allow collaborative model development between organizations or across geographical boundaries previously impossible due to data sovereignty concerns.

Automated machine learning (AutoML) and AI-assisted development tools are democratizing enterprise AI solutions by reducing the specialized expertise required for implementation. These platforms automatically explore model architectures, hyperparameter configurations, and feature engineering approaches, accelerating development while improving performance. Integration with hybrid cloud infrastructure enables AutoML systems to leverage massive computational resources for exploration while deploying optimized models to cost-effective production environments. This democratization expands AI adoption beyond tech-forward organizations to traditional industries.

Sustainability and green AI considerations increasingly influence hybrid cloud architecture decisions as organizations prioritize environmental responsibility. Data centers powered by renewable energy, liquid cooling systems that improve energy efficiency, and carbon-aware workload scheduling that shifts computation to regions with cleaner electricity sources are becoming standard practices. Enterprise AI deployment strategies consider carbon footprint alongside traditional performance and cost metrics, with hybrid cloud solutions enabling optimization across multiple dimensions, including sustainability objectives.

More Read: Multi Cloud Strategy for AI Benefits and Implementation

Conclusion

Hybrid cloud solutions have emerged as the definitive architecture for successful enterprise AI deployment, addressing the complex requirements that pure on-premises or public cloud approaches cannot satisfy independently. By strategically combining on-premises infrastructure, private cloud resources, and public cloud services, organizations achieve the optimal balance of performance, cost-efficiency, security, and scalability required for transformative artificial intelligence initiatives.

The implementation of hybrid cloud architecture enables enterprises to maintain control over sensitive data and proprietary algorithms while accessing virtually unlimited computational resources for training sophisticated machine learning models and scaling inference services globally. As AI deployment continues evolving with emerging technologies like edge computing, federated learning, and specialized accelerators, hybrid approaches provide the flexibility to integrate innovations without wholesale infrastructure replacement. Organizations that embrace hybrid cloud solutions position themselves to navigate the AI revolution successfully, accelerating innovation while maintaining governance, compliance, and financial discipline essential for sustainable competitive advantage in an increasingly AI-driven business landscape.;