The rise of artificial intelligence applications has transformed how we work, create, and solve problems daily. From ChatGPT to Google Gemini, millions of users interact with AI chatbots to generate content, answer questions, and automate tasks. However, behind these convenient tools lies a significant concern that deserves your attention: privacy issues with popular AI apps are becoming increasingly severe. As these platforms grow more integrated into our digital lives, the amount of personal data collected and processed by AI companies reaches unprecedented levels. Research reveals that leading AI service providers collect between 7 to 22 different types of personal information, including chat history, behavioral data, location information, and device identifiers.

This data collection extends far beyond what users typically understand or consent to, creating vulnerabilities that cybersecurity experts and regulatory bodies worldwide are now scrutinizing. The EU AI Act and emerging data privacy regulations demonstrate that governments recognize the urgent need to protect citizens from invasive AI data collection practices. Users must understand exactly what happens to their data when they interact with generative AI platforms, how it’s stored, who has access to it, and what safeguards exist to protect sensitive information. This comprehensive guide explores the most pressing privacy concerns in AI apps, examines the specific data practices of major AI platforms, and provides actionable strategies to safeguard your personal information in an increasingly AI-driven world.

The Growing Landscape of AI Apps and Data Collection

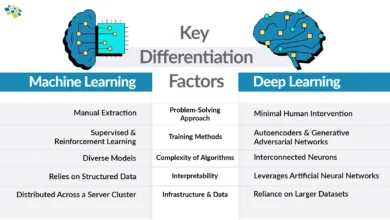

Artificial intelligence applications have experienced explosive growth since 2023, with millions downloading AI chatbot apps monthly. The free and accessible nature of platforms like ChatGPT, Google Gemini, and Microsoft Copilot has made AI technology mainstream. However, this accessibility comes at a cost: user data. Every interaction with these AI platforms generates valuable information that companies collect, analyze, and often use to improve their models. The business model underlying most popular AI apps relies on converting user interactions into training data and insights.

When you input a prompt into an AI chatbot, your question, the context, your typing patterns, and even the time spent reviewing responses become data points. This represents a fundamental shift in how technology companies monetize their services. Rather than charging users directly, many AI service providers extract value from personal information. The explosive expansion of generative AI services has created a landscape where privacy protection in AI lags significantly behind innovation. Most AI app developers prioritize feature development and market competition over transparent data privacy practices. This imbalance puts users at a disadvantage, particularly those unaware of the extent of data being collected through their daily AI interactions.

Major AI Platforms and Their Privacy Practices

ChatGPT: OpenAI’s Comprehensive Data Collection Approach

ChatGPT, developed by OpenAI, represents the market leader in conversational AI applications. The platform offers both free and premium tiers, making it accessible to hundreds of millions globally. However, ChatGPT’s privacy policy reveals extensive data collection practices. The application collects approximately 10 different types of personal information, including everything users type, files uploaded, feedback provided, and detailed behavioral data. OpenAI explicitly states that ChatGPT conversations are used to train and improve its models by default for free and premium users. This means your proprietary business information, creative work, or sensitive discussions could be incorporated into training datasets used to develop models that competitors can access.

OpenAI collects geolocation data, network activity, contact information, and device identifiers. The company may share this information with third-party vendors and service providers. While users can disable chat history through ChatGPT’s data controls, the permanent deletion of data is not guaranteed, and previous conversations may remain in OpenAI’s systems indefinitely. The vague language in ChatGPT’s privacy documentation uses qualifiers like “may” extensively, creating ambiguity about data practices. For organizations handling sensitive information, ChatGPT’s default data collection poses significant compliance risks under regulations like GDPR and CCPA.

Google Gemini: The Data Collection Leader

Google Gemini, Google’s advanced AI chatbot, takes data collection to unprecedented levels among mainstream AI applications. Recent research by Surfshark and other cybersecurity firms reveals that Gemini collects 22 different types of personal data—significantly more than any competitor. This includes chat history, search history, location data, device information, usage patterns, behavioral analytics, and integration with other Google services like Gmail, YouTube, and Chrome. Google’s integration across its ecosystem means AI conversations automatically connect to your broader digital footprint. When you use Gemini, the platform accesses your Google account data, including search history, location history, and activity from related services.

This interconnected approach allows Google to build comprehensive user profiles that extend far beyond individual AI interactions. Gemini’s privacy policy indicates that human reviewers read and annotate conversations to improve AI models. Google explicitly warns users: “Don’t enter confidential information in conversations or data you wouldn’t want a reviewer to see.” This acknowledgment that human eyes review your private conversations raises serious concerns about sensitive information exposure. Google retains Gemini conversations for up to 18 months by default, though users can adjust this to 3 or 36 months. The platform shares data with third parties in some cases and integrates AI capabilities directly into Android Messages, Gmail, and the Google Assistant, expanding the scope of potentially monitored communications.

DeepSeek: Chinese AI with Critical Security Concerns

DeepSeek, the Chinese AI application that rapidly became the third most-downloaded free app globally, presents distinct privacy concerns. Security researchers at NowSecure identified several alarming vulnerabilities in DeepSeek’s privacy architecture. The application uses hardcoded encryption keys, a critical security flaw that undermines data protection. Most troubling, DeepSeek sends unencrypted user data and device information to Chinese servers, violating basic data security standards. DeepSeek’s privacy policy states data retention on Chinese servers, raising regulatory flags under GDPR and other international privacy regulations.

The geopolitical dimension adds complexity, as Chinese companies typically must comply with government data requests. While DeepSeek’s performance capabilities impressed users, the security and privacy risks should concern anyone with sensitive information. The platform collects 11 types of data, placing it in the middle range compared to competitors, but the encryption vulnerabilities and overseas data retention significantly amplify privacy exposure.

Microsoft Copilot, Meta AI, and Others

Microsoft Copilot collects 13 types of user data and integrates with Microsoft’s broader ecosystem. Unlike ChatGPT, Copilot explicitly states it doesn’t use prompts to train its foundation models. However, Copilot still collects extensive usage and behavioral data. Meta AI ranked at the bottom of privacy assessments, collecting minimal user data for an AI platform, though Meta’s broader data practices raise questions. Smaller platforms like Poe, Jasper, and Perplexity demonstrate varying data collection approaches. Some share user information with third parties for targeted advertising, while others maintain more restrictive practices. These variations mean users must evaluate each platform individually rather than assuming uniform privacy standards across AI applications.

Specific Privacy Risks and Data Vulnerabilities

Data Breaches in AI Applications

Recent data breaches in AI companion apps illustrate concrete privacy risks. In 2025, two AI companion applications—Chattee Chat and GiMe Chat—exposed over 43 million intimate messages and 600,000 images and videos after leaving a server completely unsecured. The developers failed to implement basic security protections, allowing anyone with a link to access private conversations. This breach demonstrated that AI data security remains inadequate across many platforms. Users had shared deeply personal interactions worth thousands of dollars collectively, trusting platforms to protect confidentiality. The exposure created risks for blackmail, identity theft, and public embarrassment. This incident represents just one documented case; many AI data breaches likely go unreported or undiscovered.

Training Data Usage and Model Development

One of the most significant privacy concerns in AI involves how platforms use conversation data for model training. When you input information into ChatGPT or Gemini, that data enters training datasets used to develop more advanced models. Your proprietary business strategies, personal health information, or creative intellectual property could theoretically be incorporated into AI models and become accessible through subsequent model outputs. Even anonymized datasets can reveal personal information through various de-anonymization techniques. Generative AI companies argue that this data usage is necessary for model improvement, but users typically don’t understand or consent to this level of data exploitation.

Location Tracking and Behavioral Profiling

Several popular AI apps, including Google Gemini, Microsoft Copilot, and Perplexity, collect precise location data. This enables detailed behavioral profiling that reveals movement patterns, frequent locations, and daily routines. AI platforms combine location data with conversation content to build comprehensive profiles predicting user interests, preferences, and vulnerabilities. This information becomes valuable for targeted advertising, manipulation, and potential security threats. Location tracking by AI apps extends beyond the platform itself, integrating with broader corporate data collection ecosystems.

Third-Party Data Sharing

Research reveals that approximately 30% of analyzed popular AI chatbots share user data with third-party networks. Platforms including Copilot, Poe, and Jasper disclose data-sharing practices in fine print that few users read thoroughly. Third-party vendors may include advertising networks, analytics providers, and data brokers who independently monetize user information. This data sharing creates exposure beyond the primary AI platform, extending risks across an unknown number of secondary and tertiary data handlers.

Regulatory Non-Compliance

The EU AI Act, implemented in 2024, establishes comprehensive requirements for AI system governance, including strict data privacy protections. However, many popular AI apps remain non-compliant with these standards. The European Union explicitly prohibits AI practices that exploit vulnerable populations or enable manipulative techniques. Several AI applications currently violate these standards through aggressive data collection and unclear consent mechanisms. Regulatory investigations into Google Gemini, DeepSeek, and other platforms suggest enforcement actions will intensify throughout 2025 and beyond.

Regulatory Framework and Legal Protections

The European Union AI Act

The EU AI Act, effective June 2024, represents the world’s first comprehensive regulatory framework specifically governing artificial intelligence systems. This legislation applies to AI companies marketing services within the European Union, establishing categories based on AI risk levels. High-risk AI applications require enhanced documentation, compliance checks, and data governance. The framework prohibits specific AI practices, including social scoring systems and real-time biometric identification in public spaces. Organizations deploying AI systems must implement robust risk management and maintain detailed documentation of data flows and algorithmic processes. EU citizens now have explicit rights regarding AI-driven decision-making and can demand human oversight of significant automated decisions.

GDPR and International Privacy Laws

The General Data Protection Regulation (GDPR) established foundational privacy protections for EU residents. However, its extraterritorial reach applies to any organization processing personal data of EU citizens, regardless of the company’s location. This means American AI companies like OpenAI and Google must comply with GDPR when serving European users. Similar frameworks have emerged globally: California’s CCPA, China’s PIPL, and emerging state-level laws create a fragmented but expanding regulatory landscape. As of early 2025, 82% of the global population benefits from at least one data privacy law.

Emerging U.S. State-Level Regulations

The United States lacks comprehensive federal AI privacy legislation, but state-level action accelerates. Utah’s AI and Policy Act (2024) represents the first state-specific AI regulation, requiring consent, transparency, and appropriate use of AI-generated content. Texas, Montana, Oregon, California, and other states have enacted privacy laws with specific implications for AI data handling. As more states implement privacy regulations, AI companies face increasing compliance complexity, particularly those operating nationally or globally.

Practical Steps to Protect Your Privacy

Disable Data Collection Features

Most AI platforms offer settings to limit data collection. For ChatGPT, disable chat history and opt out of data usage in settings. For Google Gemini, turn off “Gemini Apps Activity” and adjust data retention to a minimum of 3 months. Microsoft Copilot users can limit tracking through privacy settings. These adjustments don’t eliminate all data collection but reduce exposure significantly. Regularly review privacy settings as AI companies frequently update policies.

Use Temporary or Incognito Modes

Several AI platforms offer temporary chat modes that delete conversations immediately after sessions end. ChatGPT’s temporary chat feature automatically deletes conversations after 30 days. Using these modes prevents conversation storage in company databases. However, understand that even temporary modes may retain data for brief periods for security monitoring.

Avoid Sensitive Information

Never share proprietary business information, personal health data, financial details, or identifying information in public AI applications. Treat AI interactions as potentially public communications. For sensitive use cases, consider self-hosted AI models or enterprise AI solutions with dedicated privacy and security provisions.

Review Privacy Policies Regularly

While dense and complex, AI privacy policies contain crucial information about data practices. Allocate time quarterly to review privacy updates from AI platforms you use. Watch for changes in data retention, third-party sharing, or access policies. Subscribe to privacy news sources that track AI company policy changes.

Consider Privacy-Focused Alternatives

Platforms like Claude (by Anthropic) and Grok (by xAI) implement stronger privacy protections by default. Claude explicitly commits to not using conversation data for training without permission. Grok shows better privacy rankings than major competitors. Regional platforms with strong regulatory compliance may offer better privacy protections than global giants.

Use VPN Services and Privacy Tools

Virtual Private Networks encrypt your internet traffic, preventing location tracking by AI platforms. Password managers help maintain unique credentials for each service. Privacy-focused browsers and DNS services add additional protection layers. However, recognize these tools complement rather than replace careful privacy practices.

The Future of Privacy in AI

The trajectory of privacy in AI remains uncertain but increasingly regulated. Governments globally recognize the importance of data protection in AI systems. More sophisticated regulatory frameworks will emerge, raising privacy standards and enforcement. AI companies face mounting pressure to adopt transparent data practices and implement privacy-by-design principles. However, the competitive pressure to develop advanced models may continue incentivizing aggressive data collection despite regulatory constraints. Consumer awareness increasingly influences business practices; users demanding stronger privacy protections drive platform changes. The intersection of AI innovation and privacy protection will define the technology sector’s future. Organizations and users who prioritize privacy concerns in AI today position themselves advantageously as regulations tighten and norms evolve.

More Read: Best AI Dating Apps and How They Match People

Conclusion

Privacy issues with popular AI apps represent one of the defining challenges of 2025 as artificial intelligence becomes embedded throughout daily life. Platforms like ChatGPT, Google Gemini, and DeepSeek collect unprecedented volumes of personal data, creating vulnerabilities that extend beyond individual users to organizations, economies, and societies. While regulatory frameworks like the EU AI Act and GDPR establish protective standards, enforcement remains inconsistent, and many AI companies continue aggressive data collection practices.

Users must adopt a proactive stance, disabling optional data collection features, avoiding sensitive information in public AI platforms, and regularly reviewing privacy policies. The responsibility for privacy protection cannot rest solely on users; AI companies, policymakers, and regulators must collaborate to establish meaningful standards that balance innovation with fundamental rights to privacy. The coming years will determine whether AI systems evolve toward privacy-respecting models or entrench invasive data collection as an industry norm. Your awareness and choices today contribute to shaping that future.